Bridging the gap between AI/ML model development and DevSecOps

Store, secure, govern, and manage AI components with confidence

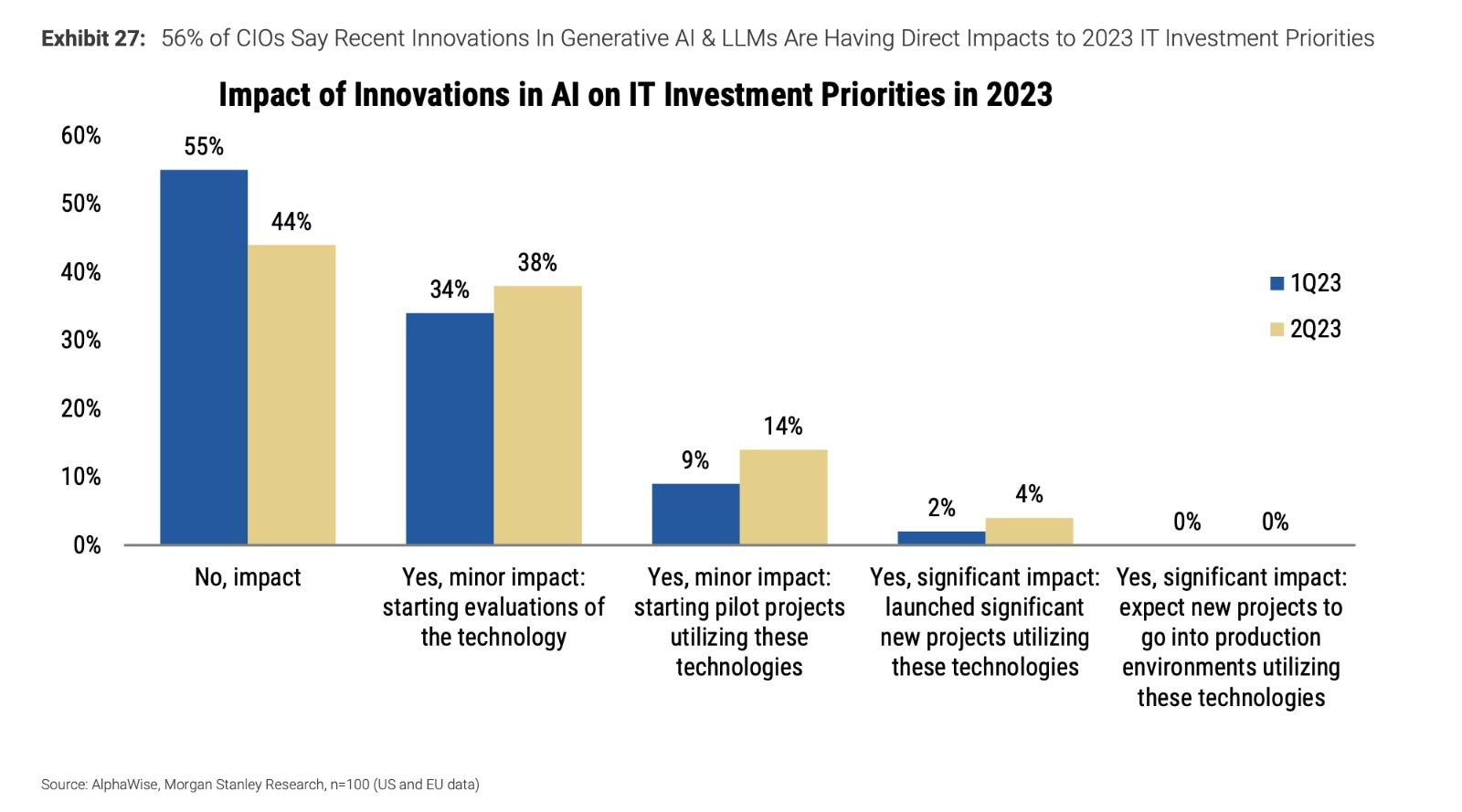

Artificial intelligence (AI) and machine learning (ML) have hit the mainstream as the tools people use everyday – from making restaurant reservations to shopping online – are all powered by machine learning. In fact, according to Morgan Stanley, 56% of CIOs say that recent innovations in AI are having a direct impact on investment priorities. It’s no surprise, then, that the ML Engineer role is one of the fastest growing jobs.

At the core of every AI-enabled application is the model powering it. And that model is just another binary that needs to be secured, managed, tracked, and deployed as part of a quality software application. The challenge for organizations is that model development is still a relatively new domain, largely occurs in isolation, and lacks transparency and integration with broader, more established software development practices.

With all signs indicating that the use of ML models will only continue to increase in the future, it’s imperative that DevOps and Security practitioners act to serve the MLOps needs of their organizations. This means establishing:

- Local repos for proprietary and internally-augmented ML and GenAI models with scalability to support massive binary files

- Remote repos for proxying the public model hubs organizations rely on to quickly build and bring new AI-enabled features to market

- A single source of truth that facilitates automation of the development, management, and security of models

- Full control and governance over which models are brought into and leveraged by the ML/data science organization in anticipation of inevitable regulations to come

- The ability to manage models alongside other binaries to seamlessly bundle and distribute ML models as part of any software release

Introducing Machine Learning Model Management from JFrog

JFrog’s ML Model Management makes it easy for DevOps and Security teams to leverage their existing JFrog solution to meet their organization’s MLOps needs, integrating seamlessly into the workflows of ML Engineers and Data Scientists. In doing so, organizations can apply their existing practices and policies towards ML model development, extending their secure software supply chain.

Now in Open Beta for JFrog SaaS instances, with full hybrid support on the way, ML Model Management lets JFrog users leverage Artifactory to manage their proprietary models as well as proxy Hugging Face (a leading model hub) for the third-party models they bring into their organization. Once inside Artifactory, users can include models as part of immutable Release Bundles for maturation towards release and distribution.

Additionally, by using JFrog Xray’s industry-first ML security capabilities, organizations can detect and block malicious models and those with non-compliant licenses.

ML Model management benefits organizations by providing a single place to manage ALL software binaries in one place, bringing DevOps best practices to ML development, and allowing organizations to ensure the integrity and security of ML models – all while leveraging an existing solution they already have in place.

Let’s explore how JFrog’s ML Model Management works with a fun example you can try for yourself.

Use your existing JFrog Cloud instance or start a trial to follow along.

Machine learning in action: detecting an imposter

One of the most legendary JFrog traditions is the end-of-year holiday party. All the Frogs at each global swamp (aka all JFrog employees in every office location around the world) gather to celebrate a year of hard work and accomplishment. Last year’s event was epic, but something strange happened… the food disappeared too fast, and the venue said we exceeded our headcount.

We asked the venue to check the security cameras so we could understand what happened and learn for future events. After reviewing the footage, something didn’t seem right. Did we have an imposter at our party?

It was hard to be sure! Luckily, I had just been working with our data science team to integrate their work into JFrog’s SDLC (Software Development Lifecycle) and I knew they had experience working with machine learning models.

Setting up my environment

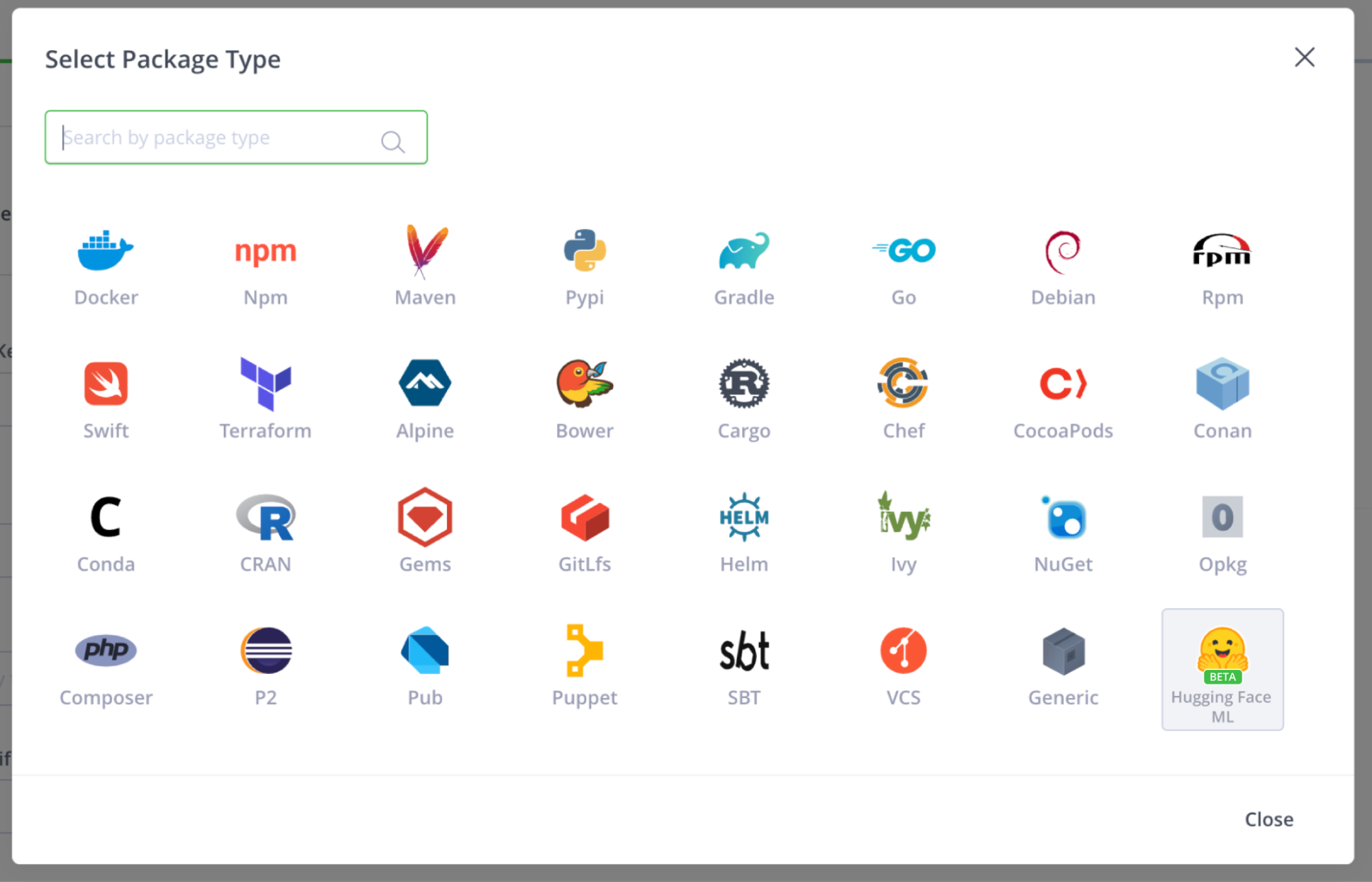

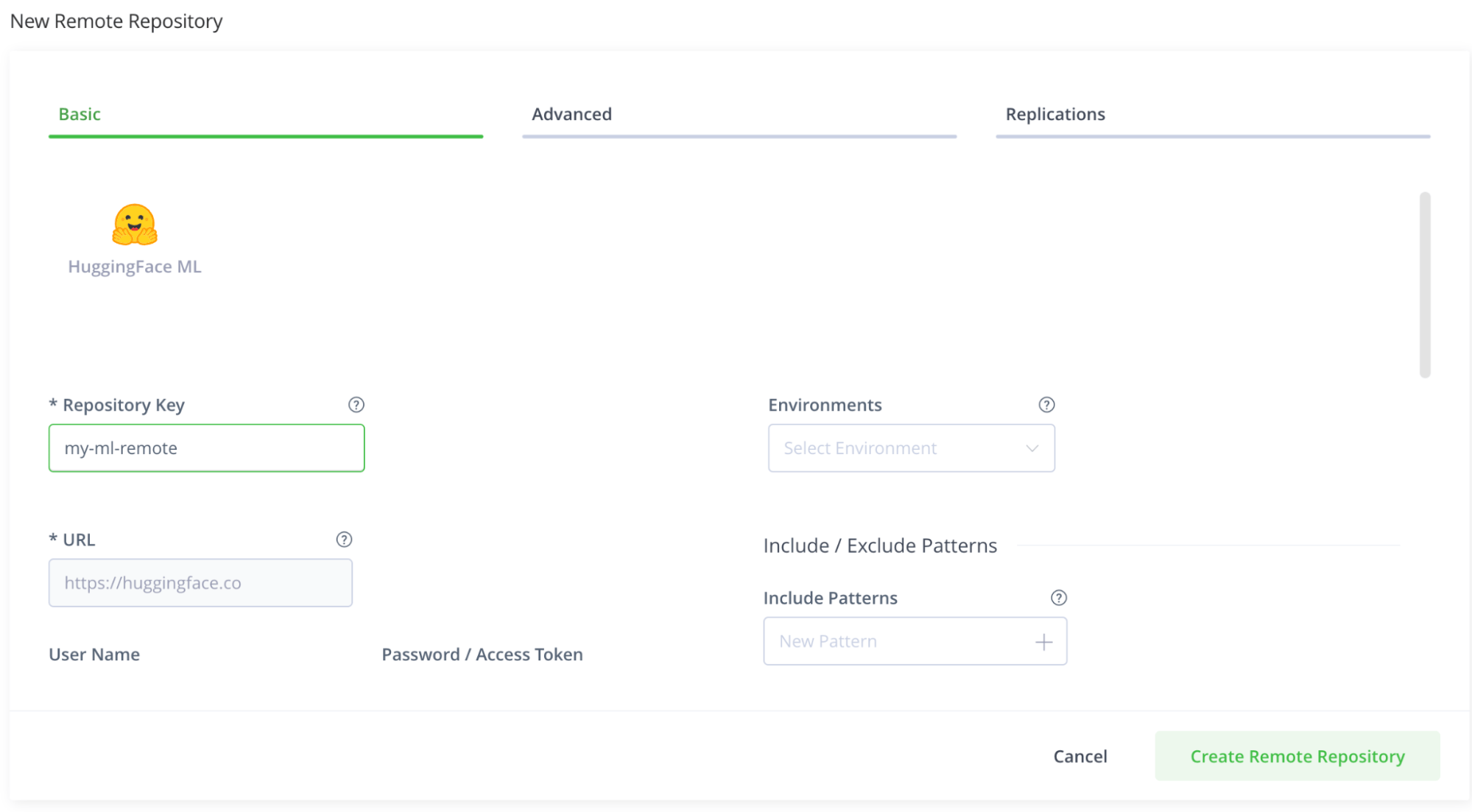

First, I created a remote repository for my open source python files and called it “my-python-remote”. Then I created a Hugging Face ML model remote named “my-ml-remote”, to manage my work easily. Following JFrog’s company procedures, I connected these repositories to the Xray policies that enforce our approved licensing.

Then, I opened a Jupyter Notebook and started typing. Using JFrog’s Set Me Up, I created the environment variables required to connect the Hugging Face client and pip to Artifactory. Having one tool to manage all my ML-oriented packages always makes development much simpler.

Starting to code

With my environment set up, I could then start coding. I decided to try using a Sentiment Analysis algorithm first – I had heard many good things about a model called “bertweet-base-sentiment-analysis” from a friend who had experimented with it before.

I wrote the following code to start using the model:

from huggingface_hub import snapshot_download

from huggingface_hub.utils import HfHubHTTPError

try:

snapshot_download(repo_id="finiteautomata/bertweet-base-sentiment-analysis", etag_timeout=1500000000)

except HfHubHTTPError as e:

print("\n\n\U0001F6A8\U0001F6A8\U0001F6A8\U0001F6A8 Xray blocked model download on 'not approved license' policy.\U0001F6A8\U0001F6A8\U0001F6A8\U0001F6A8\n\n")But when I ran it, this message popped up:

Apparently, the model has no license definition, and JFrog policy forbids using unlicensed assets. I needed a new idea!

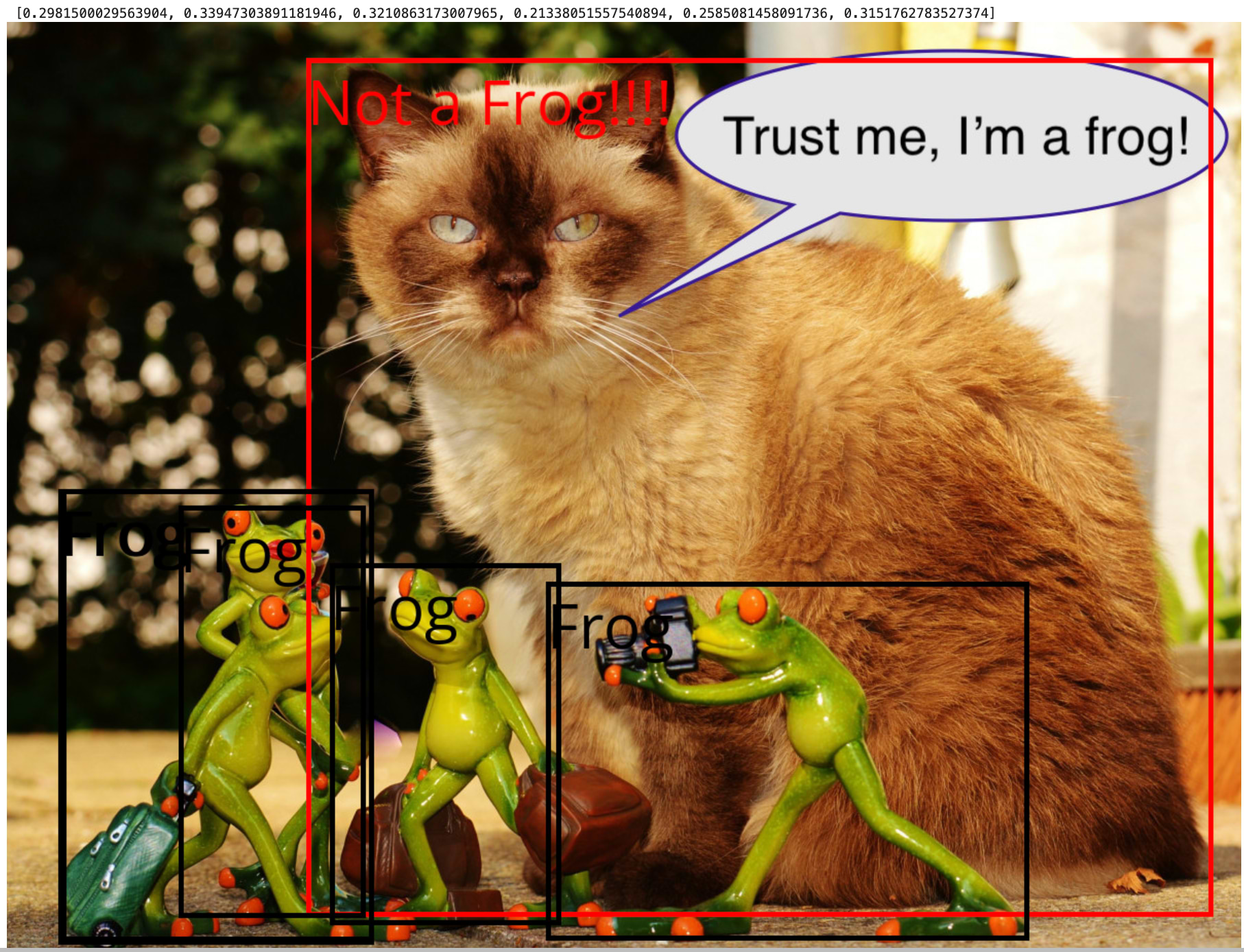

I remembered reading about an interesting image processing technology called “zero-shot-object-detection”. After doing some research, I found an article and a reference to a recommended model, which worked great and had an approved license. With the assistance of the modeI’s examples page, I wrote some code to use the model and identify frogs.

I started by loading the model:

checkpoint = "google/owlvit-base-patch32"

detector = pipeline(model=checkpoint, task="zero-shot-object-detection")

model = AutoModelForZeroShotObjectDetection.from_pretrained(checkpoint)

processor = AutoProcessor.from_pretrained(checkpoint)Then, I added some more code to identify the objects and draw a marking square around them with the needed classification (full, usable code sample can be found here):

text_queries = ["Frog", "Cat"]

inputs = processor(text=text_queries, images=im, return_tensors="pt")

draw = ImageDraw.Draw(im)

with torch.no_grad():

outputs = model(**inputs)

target_sizes = torch.tensor([im.size[::-1]])

results = processor.post_process_object_detection(outputs, threshold=0.2, target_sizes=target_sizes)[0]After a short wait for the model to load and the code to run – these models can be quite big – we found our imposter!

As you can see from this hypothetical (yet totally plausible) example, setting up JFrog to manage your ML models and integrate with your data scientists’ and ML Engineers’ flow is as simple as writing a few lines of code, but it immensely benefits an organization from a visibility and integrity perspective.

Next steps for using ML Model Management with JFrog

This seamless experience will bring DevOps best practices to ML development, allow organizations to manage all of their software artifacts in one place, and ensure the integrity and security of the ML models they use.

Best of all, JFrog customers can start using these capabilities immediately. If you’re not a JFrog customer yet, start your trial here.