Building a DevOps Path in IBM Hybrid Cloud

Sometimes you don’t want to have to choose between two great places, but combine them for the best of each. That’s the principle behind IBM’s hybrid cloud offering, where a private cloud and a public cloud work together to deliver an ideal blend of availability and security.

But how can you best execute DevOps in this hybrid environment? There are some effective models for this, and JFrog Artifactory can help bridge those distinct cloud environments so that your applications stage smoothly and safely from code to production.

To get you started, JFrog now provides you with easy ways to install Artifactory to both IBM private and public clouds through convenient Helm charts.

So let’s take a look at how this can work.

Why a hybrid cloud?

For a growing number of enterprises, operating anytime, from anywhere “in the cloud” makes increasing sense. But which cloud?

A public cloud like IBM Cloud, available to anyone through an internet connection, offers immediate infrastructure without the capital investment, to pay based on usage, and to scale on demand.

A private cloud however, like IBM Cloud Private (ICP) is one that you control. It runs on your own infrastructure on-premises with regulated access, so it isn’t exposed to internet security risks. It may be more appropriate for sensitive or regulated business-critical data that are protected behind your firewall.

Luckily, adopting cloud computing isn’t an all-or-nothing decision. A hybrid model blends elements of each, to offer the best of both options. There are many use cases. A common one has a private cloud at the core while using a public cloud when and where it makes sense. Adding public space as needs mount, while keeping sensitive material private, can provide world-class computing in a cost-effective way.

Hybrid cloud DevOps

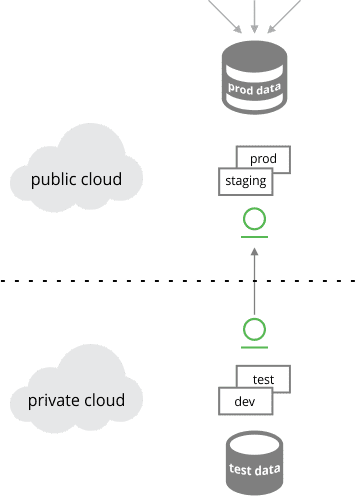

So how can DevOps work in a hybrid cloud? One commonly followed model is to keep everything sensitive in the private cloud, then deliver production components to the public cloud where they will be deployed at scale for wide use.

This means keeping all of your development processes, including your code repository and intermediate artifacts, in the private cloud. Here is where your continuous integration processes run – where the CI server (such as Red Hat OpenShift) executes, and binaries are staged for testing and promotion.

Using a private cloud like ICP, you can design, develop, deploy, and manage your processes on premises, producing containerized cloud applications behind your firewall.

When applications are complete and ready to be used in production, you can then promote them for release into repositories in a public cloud like IBM Cloud. From here, where they are more broadly accessible, your containerized cloud applications can be deployed at scale to the world.

Hybrid cloud configuration: Test in private cloud, promote to production in public cloud

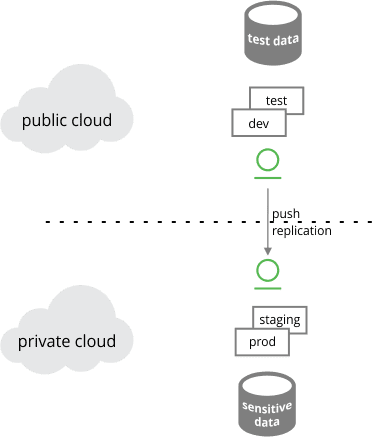

An alternate, but perhaps equally common model is its opposite, in which development and testing are performed in the public cloud using generic test data, providing flexibility in the cost of maintaining development infrastructure. Here, production applications are promoted to an on-prem private cloud, where they have permissioned access to sensitive data secured behind a firewall (as may be required for regulatory compliance).

Hybrid cloud configuration: Test in private cloud, promote to production in public cloud

Artifactory Enterprise helps DevOps pipelines hop

In both the private and public portions of your hybrid cloud, Artifactory instances are your trusted source of binaries. Since binaries are what you actually run careful management of them is required. In this capacity, Artifactory can act as your Kubernetes Docker registry of safely deployable containers.

In one cloud, (such as the ICP private cloud), Artifactory manages all of the artifacts used in and generated by your continuous integration process, including your local and remote dependencies from package managers, the binaries generated, Docker images and Helm charts.

Here, Artifactory can help give you greater speed and reliability in your builds by caching your dependencies in its own repositories, helping to secure against outages of remote resources or network instability. Artifactory provides built-in support for many popular package managers, such as npm and Maven, making it easy to integrate this feature into your CI process.

Artifactory provides visibility into your builds through the metadata it attaches to each artifact. In this way, you can trace your container images back to their source, so you always know what’s in your builds.

Artifactory repositories support the staging and promotion of binaries through your CI/CD process in the private cloud, helping you to advance your builds through testing and validation to production-quality release.

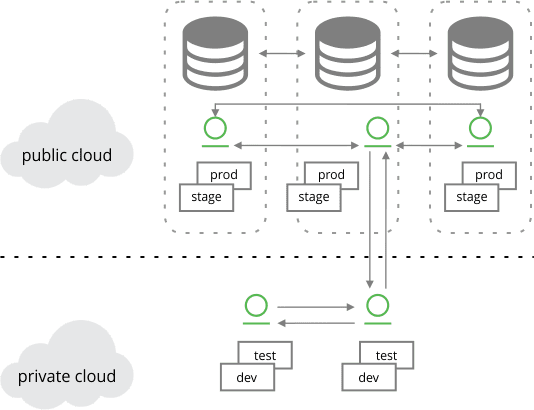

Once fully validated for release, you can promote those container images, along with their Helm charts and any other supporting artifacts, to an instance of Artifactory in the second cloud (such as the public IBM Cloud), which acts as your Kubernetes registry. From here, your K8s orchestrator (such as IBM Kubernetes Service) can pull these artifacts to deliver them to clusters.

Artifactory Enterprise also provides high availability through multiple instances of Artifactory synchronized with the same deployable images. This can help provide redundancy for security against outages, or regional proximity to ensure speed of a global network.

Hybrid cloud configuration: High availability test to production

Charting the path

Artifactory enables you to have the right packages in the right cloud depending upon your use case. Moreover, Artifactory’s replication facilities support locality in a variety of network topologies, and empower the scheduling strategies you need. Using Artifactory for replication is covered in depth in this white paper.

In subsequent blog posts, we’ll explain how to install Artifactory on both IBM Cloud and IBM Cloud Private, so you can put these features to use.