The 3 Kubernetes Essentials: Cluster, Pipeline, and Registry

This blog post is co-authored by Kit Merker of JFrog and Raziel Tabib of Codefresh, and is co-posted on the Codefresh blog.

OK, so you’re adopting Kubernetes. Good choice. There is a dizzying array of good options to get a Kubernetes cluster up and running. But once it’s there, how do you actually get your code into the cluster in a repeatable, reliable way? And how do you make sure that only the right version of your app makes it to production?

This blog post describes the 3 essential components that combine together to enable you to run your application with Kubernetes.

- Kubernetes Cluster – this is the orchestration infrastructure where your containerized application runs.

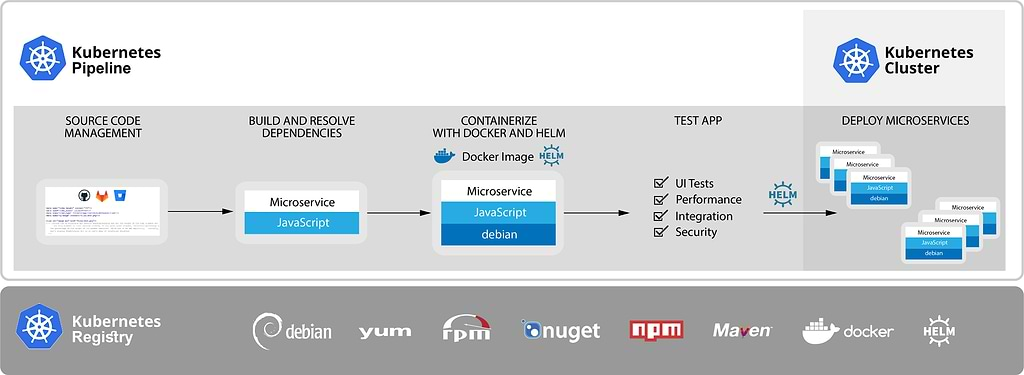

- Kubernetes Pipeline – this is the CI/CD pipeline that runs in Kubernetes and automates the process, starting from the source code and external packages to deploying your application in a Kubernetes cluster.

- Kubernetes Registry – this is the artifact repository that stores and tracks all your build components that are processed by the pipeline, including both local and remote dependencies.

Notice that beyond these 3 essentials, there are additional components, such as monitoring and services discovery, that can be added to this infrastructure according to your needs.

1. Kubernetes Cluster

The piece that gets the most coverage is the cluster so we will not dwell on it too long. First, decide if you want to manage it yourself or not. Then decide if you want it from a cloud provider. Then decide how big you want it. Fortunately Kubernetes clusters are designed to behave pretty much the same everywhere, sometimes with some flavors or optimizations thrown in.

Check out the 32, and growing, list of certified cluster providers from CNCF.

2. Kubernetes Pipeline

Kubernetes pipelines automate the process of building, testing and deploying containerized applications to Kubernetes.

Containerized applications are composed of multiple microservices. To minimize potential risks that may arise when making changes, every proposed change, whether to a single microservice or more, is:

- Tested in the full application context before being deployed to production.

- Once deployed to production, gradually exposed to end users. For example, using a canary deployment.

Setting up a staging/integration environment where every change can run against the full-application has trouble scaling and frequently becomes a bottleneck. To solve this, Kubernetes pipelines must be ‘application aware’.

Application Aware

Kubernetes pipelines are ‘application aware’, meaning they are natively capable of dynamically provisioning a full containerized application stack (generally composed of multiple services, deployments, replica sets, secrets, configmap, etc.). Every change to the application context, whether code, base-layer, image, or configuration changes, will in turn trigger a pipeline.

To summarize, you can:

- Dynamically provision the full application and comprehensively test any change.

- Integrate with the Kubernetes registry, to both:

- Store all application artifacts and enrich them with metadata.

- Set triggers that will invoke the pipeline upon change to any artifact.

- Run Kubernetes native pipelines on Kubernetes clusters, just like your application.

- Pipelines are natively integrated with Kubernetes entities and networking to facilitate advanced deployments like canary, blue/green, etc.

3. Kubernetes Registry

To ensure that the flow of your application from code to cluster is smooth, reliable, and safe, you need to have a clear view of exactly what you are building and releasing. You might think of your application as all about the code you write, but in reality there is a whole stack of code and configurations that come from others, both in source and binary form, that will travel with your application into your Kubernetes cluster.

You can think of a Docker registry as a minimal version of a Kubernetes registry. However, there are a few critical pieces that can’t be stored individually in a Docker registry that are part of your Kubernetes application, such as: OS packages, language packages, application packages, and Kubernetes manifests.

Consider operating system and related packages. Each are sourced from a distro provider (like Canonical Ubuntu or SUSE), or from Microsoft in the case of Windows containers, and you may have OS level packages that need to be installed to make your application work. The beauty of a Kubernetes cluster is that it can run applications that are written in different languages with different operating system versions side by side without any operational difference.

Once you have all the OS and language dependencies figured out (along with open source frameworks) you can containerize your application using Docker build and create Docker images. These are stored in a Kubernetes Docker registry, and can be pulled directly by Kubernetes. On top of this, you have to store and manage Kubernetes yaml files, which can be packaged as Helm Charts. To trust the software you’re deploying into Kubernetes, you need a Kubernetes registry that understands the structure of all these dependencies, their formats and what’s in them.

From Code to Cluster

JFrog Artifactory is the Kubernetes registry that provides you the visibility and universal support for all these different technologies and dependencies. Try out Artifactory free trial.

Codefresh offers an ‘application-aware’ Kubernetes pipeline that can run on your Kubernetes clusters and streamline the testing and deployment of your k8s apps.