JFrog & Qwak: Accelerating Models Into Production – The DevOps Way

We are collectively thrilled to share some exciting news: Qwak will be joining the JFrog family! Nearly four years ago, Qwak was founded with the vision to empower Machine Learning (ML) engineers to drive real impact with their ML-based products and achieve meaningful business results. Our mission has always been to accelerate, scale, and secure the delivery of ML applications.

This new chapter with JFrog will mark a significant milestone in the Qwak journey, and we couldn’t be more excited to move forward together. Building ML models is complex and time-intensive, and many data scientists struggle to turn concepts into production-ready models – let alone getting them to production themselves. Bridging the gap between MLOps and DevSecOps workflows is critical to streamlining this process.

What is an ML Platform?

Building machine learning models is a complex and multifaceted task. It involves several critical stages, including model development, hyperparameter configuration, and the execution of numerous “experiments” to optimize performance. Once a satisfactory model is obtained, the next step is to create a deployable artifact, which can be integrated into production systems for real-world applications. This process requires not only technical expertise, but also a deep understanding of the problem domain, careful planning, and rigorous testing to ensure the model’s reliability and efficiency in a live environment.

This task has become even more complicated with the advent of Generative AI (GenAI) and applications involving Large Language Models (LLMs). Traditional ML models typically focus on specific tasks such as classification, regression, or clustering based on structured or semi-structured data. In contrast, LLMs, like those used in GenAI, are designed to understand and generate human-like text, making them suitable for a wide range of applications such as natural language processing, conversational agents, and automated content creation (this can be seen in the rapid rise of ChatGPT and similar technologies, as well as the myriad chatbots and “assistant” technologies). The scale of operations and the dynamic nature of interactions in GenAI applications significantly increase the demands on the development and deployment processes, making it essential to have specialized tools and strategies to handle these challenges effectively.

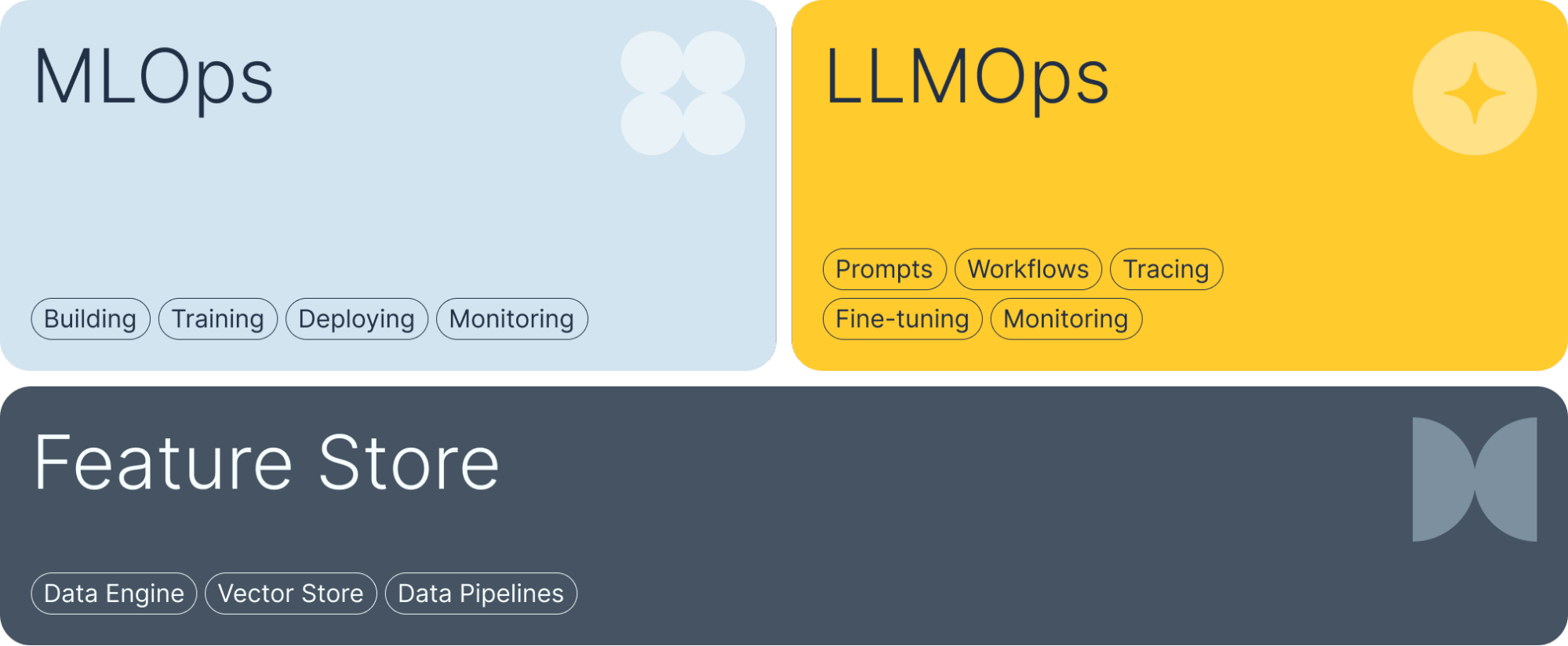

ML Platforms such as Qwak address three critical areas within these challenges to help organizations overcome complexities when delivering machine learning and AI applications:

- MLOps: Comprehensive solutions for building, training, and deploying machine learning models. From experiment management to production deployment, this area covers the entire lifecycle of ML models, ensuring efficiency and scalability.

- LLMOps: Specialized solutions to tackle the unique challenges of large language model-based applications. This includes prompt management, handling complex architectures, and implementing advanced monitoring techniques to ensure optimal performance and reliability.

- Feature Store: A robust data engine that supports the needs of data scientists, including feature pipelines, feature serving, and the ability to create and store vectors. This component ensures that the right data is readily available for model development and deployment.

Why JFrog & Qwak Together

While MLOps platforms make many aspects of Data Scientists’ lives easier when bringing models to production, there are many areas that they may not address in a silo. Following our initial integration, and as we work together towards more fully-integrated solutions, JFrog and Qwak are complementing one another to help individuals and companies deliver on the value of AI faster and with greater trust. This boils down to three key areas:

- Accelerated Development: By integrating Qwak’s managed ML platform with JFrog’s DevSecOps tools, development teams will be able to streamline their workflows, reducing the time it takes to get models from concept to production.

- Enhanced Security: JFrog’s comprehensive security suite ensures that ML models are secure at every stage of the lifecycle, from development through deployment. This integration will help in identifying vulnerabilities and mitigating risks early across traditional components and ML models themselves.

- Improved Collaboration: This integration will foster better collaboration between data scientists, developers, and security teams. By using a common platform, these teams can work together more effectively, leading to higher-quality software releases and less time in addressing issues across toolsets and departments.

TIP: To get started with JFrog and Qwak solutions right away, please see these step-by-step instructions.

Both ML Engineering/Data Science teams and DevSecOps professionals will recognize key technical features that help spur one another, driving simplicity and speed:

- Unified Pipeline: A single, cohesive software supply chain pipeline for ML model management and software development ensures smoother transitions between stages, reducing friction and errors.

- Version Control for ML Assets: Both Qwak and JFrog offer robust version control for all model assets and related dependencies, allowing teams to track changes, manage versions, and roll back if necessary – a key capability if any issues are discovered.

- Compliance and Auditability: The integration provides visibility into the security and compliance status of all models, fostering organizational trust in open source machine learning models.

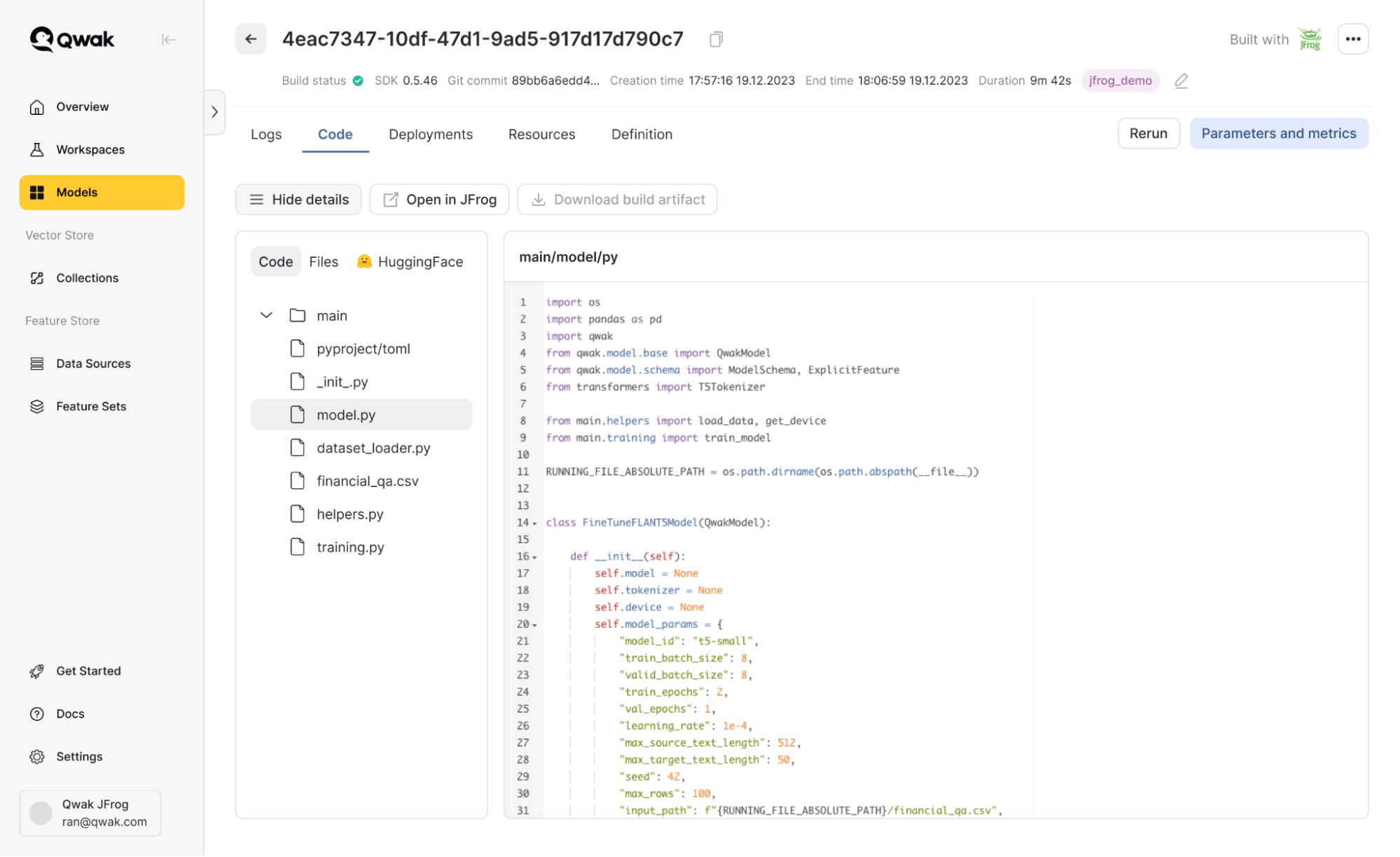

Let’s take an example; let’s say I’m fine-tuning a Flan-T5 model on the Qwak platform. This model is accessible via Hugging Face. With the integrated features:

- I downloaded the model directly from the JFrog virtual Hugging Face repository, leveraging JFrog’s caching and compliance capabilities.

- I trained my model on Qwak, utilizing its build system and infrastructure management features.

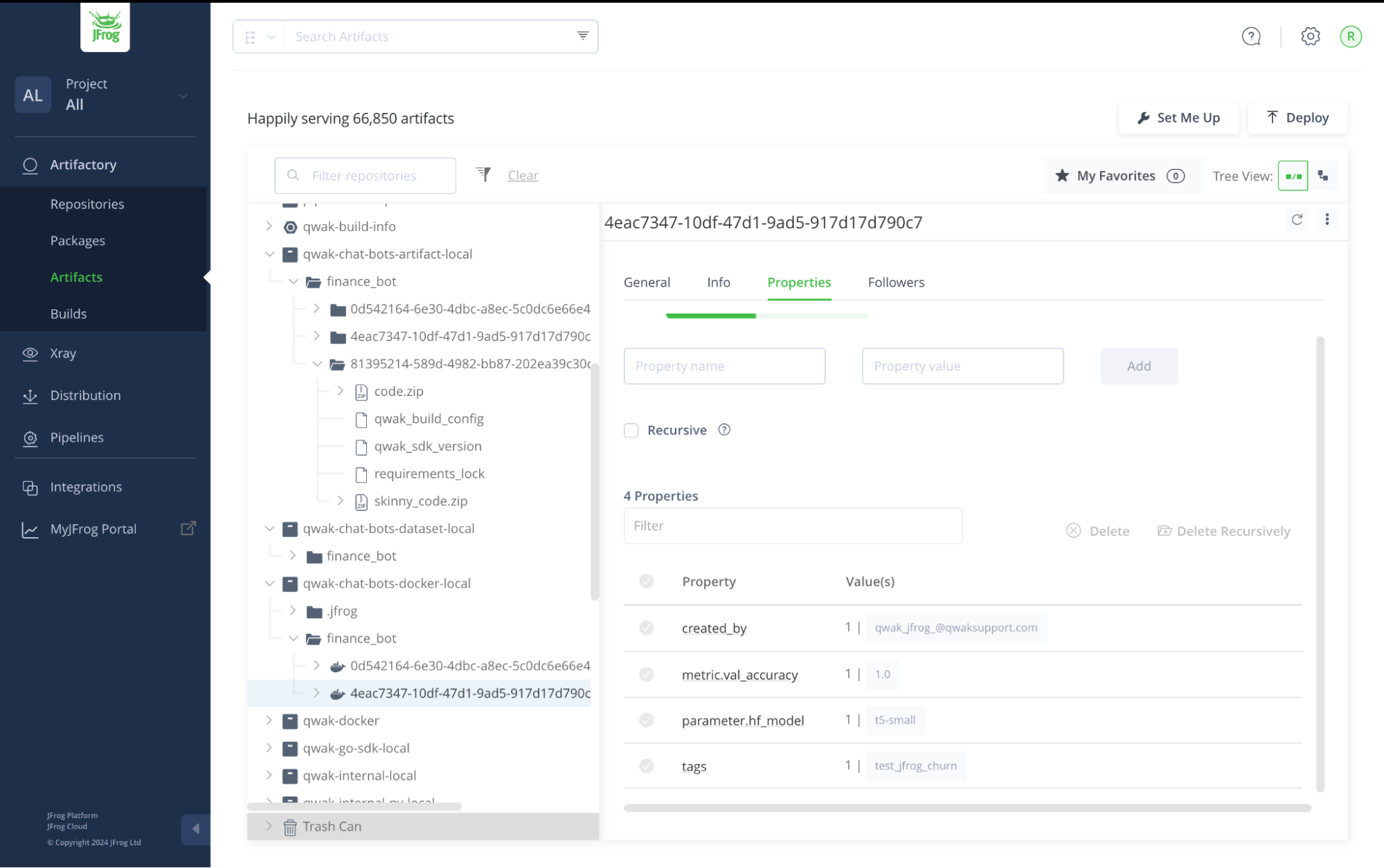

- All artifacts, including the trained model, deployable Docker container, and hyperparameters, were saved in my JFrog account.

Fine-tuning a Flan-T5 model in the Qwak platform

All model artifacts saved in JFrog Artifactory

Moving from MLOps to MLSecOps

During the build process in the above example, Qwak retrieves HuggingFace models through Artifactory. Each model is cached in the remote repository and scanned by JFrog Xray and JFrog Advanced Security, which not only checks for vulnerabilities but also examines the licensing of the model. This comprehensive scan ensures that every model meets high security standards. Moreover, any Xray policies and watches configured in JFrog are respected, ensuring consistent policy enforcement and clean security posture.

This integration will soon extend beyond traditional MLOps by incorporating more advanced security and compliance measures:

- Real-time Analysis of Dependencies: JFrog Xray continuously scans dependencies for vulnerabilities, providing real-time insights into potential security risks.

- Continuous Control and Compliance: JFrog’s tools enforce compliance policies, ensuring all artifacts and models meet industry and regulatory standards. Any policies and watches configured in JFrog are respected, maintaining a consistent security posture.

- Model Curation: Non-compliant components are automatically blocked, ensuring only secure and compliant models are deployed to production.

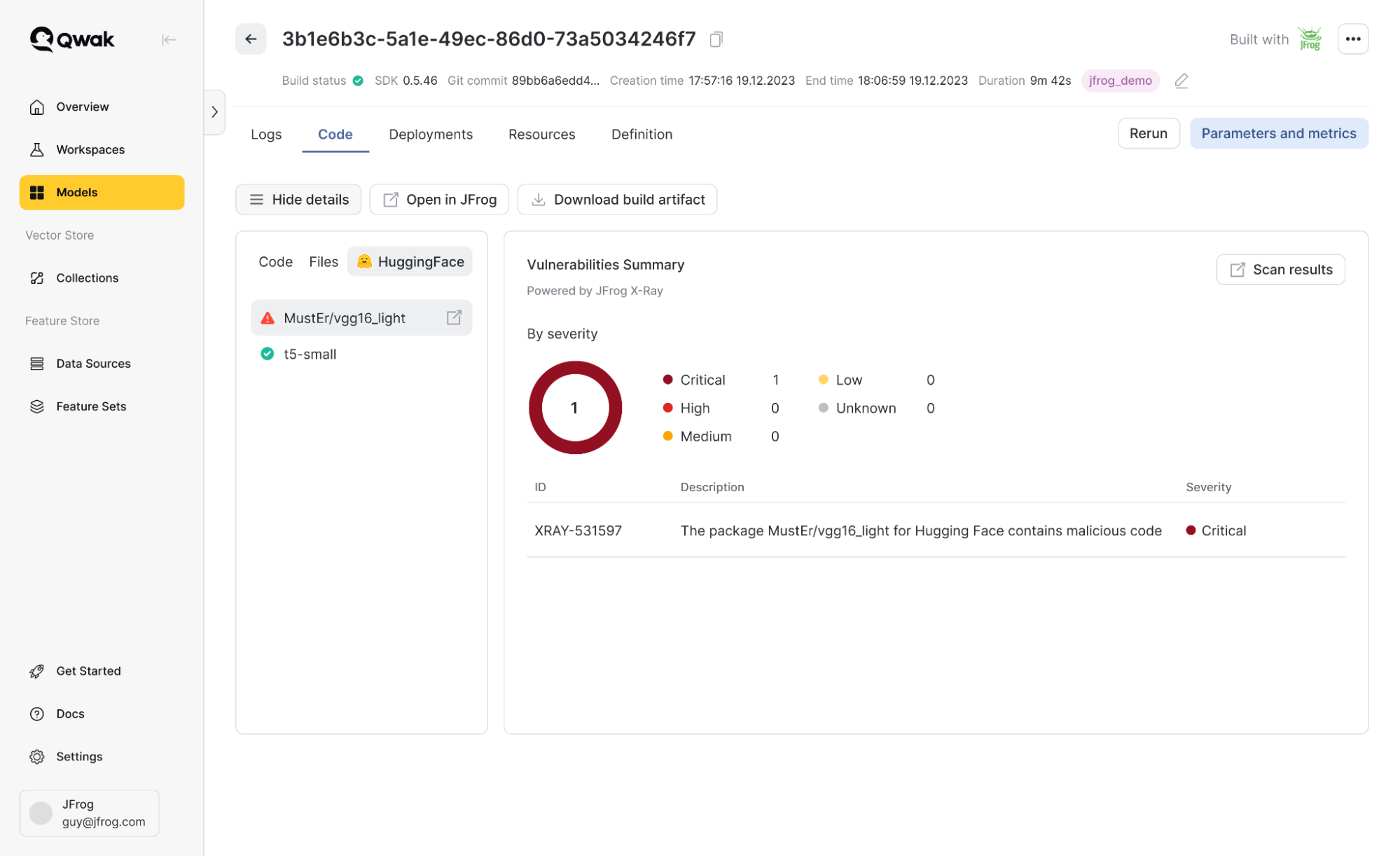

Returning to the example above, let’s consider a scenario where one of my models contains malicious code. JFrog automatically scans my models; if a vulnerability is detected I can view the details and use my existing JFrog policies to manage model usage. For instance, I could automatically block any model found to have critical vulnerabilities.

Model vulnerabilities detected by JFrog Xray

Why We’re “Better Together”

JFrog’s vision is to enable a trusted and seamless software supply chain from developer to device by managing and securing the full lifecycle of software releases. As ML models and AI applications become an integral part of most software products, JFrog and Qwak aim to enhance AI/ML-based software releases, ensuring we stay ahead of market demands.

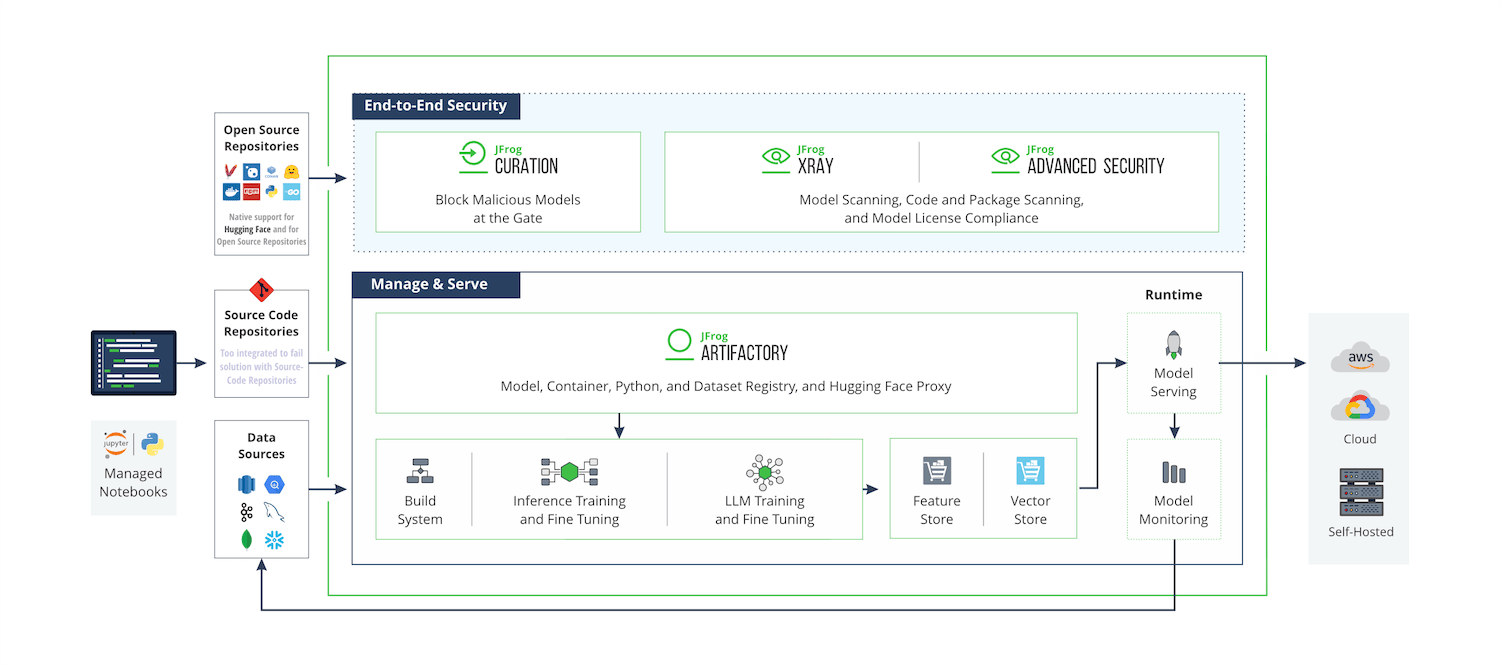

Platform diagram: planned JFrog and Qwak merger technical outcomes

It should be apparent that the only efficient way to streamline getting models into production is a not just “integrated,” but wholly-unified solution that spans the end-to-end pipeline of ML Models, AI components and traditional software. As a mission-critical piece of customers’ software infrastructure, the JFrog Platform fulfills this vision, with the addition of Qwak’s ML Platform bringing the AI delivery technology required to expand customer solutions across their AI initiatives.

Together, JFrog and Qwak will help you establish governance, transparency, visibility, and security into every facet of the development and deployment lifecycle for ML models. With a “model as a package” approach, we’ll provide a simple, trusted Software Supply Chain for ML and AI-powered applications, making moving models to production a fully automated process that can scale for any organization. From managing dependencies to ensuring compliance and optimizing storage, this integration empowers your organization to embrace machine learning with confidence and efficiency. Additionally, it vitally bridges the gaps between ML engineers and the rest of the development teams – especially in light of the advent of GenAI applications – making collaboration more natural and seamless for an entire organization.

What’s Next?

On July 22, 2024, we hosted a deeper-dive webinar where we explored real-world use cases to enhance your MLOps journey. If you couldn’t attend, don’t worry—you can watch the webinar on demand. As JFrog always notes, may the Frog be with you, now with some ‘duck power’ as well!