Ensure your models flow with the JFrog plugin for MLflow

Mastering Model Chaos: Simplifying Artifact Management with MLflow to Artifactory Integration

Just a few years back, developing AI/ML (Machine Learning) models was a secluded endeavor, primarily undertaken by small teams of developers and data scientists away from public scrutiny. However, with the surge in GenAI/LLMs, open-source models, and ML development tools, there’s been a significant democratization of model creation, with more developers and organizations engaging in ML model development than ever before. Nonetheless, despite the increased accessibility, challenges persist, spanning from security concerns to version control issues and beyond.

JFrog’s latest open-source plugin for MLflow offers a solution to store and manage your ML model experiments in a single source of truth, while ensuring the secure deployment of ML models into your production environments.

What is MLflow anyway

Suppose you’re a data scientist or an AI developer. In that case, you’re familiar with the iterative nature of model training—adjusting parameters, conducting multiple runs, and striving for the desired results before pushing the model to production. Notably, a staggering 80 percent of models fail to reach production, underscoring the significance of optimizing the training process. Consequently, having a robust tool to manage this workflow efficiently and systematically becomes paramount. This is where MLflow steps in, providing the necessary framework to streamline the model development and deployment process.

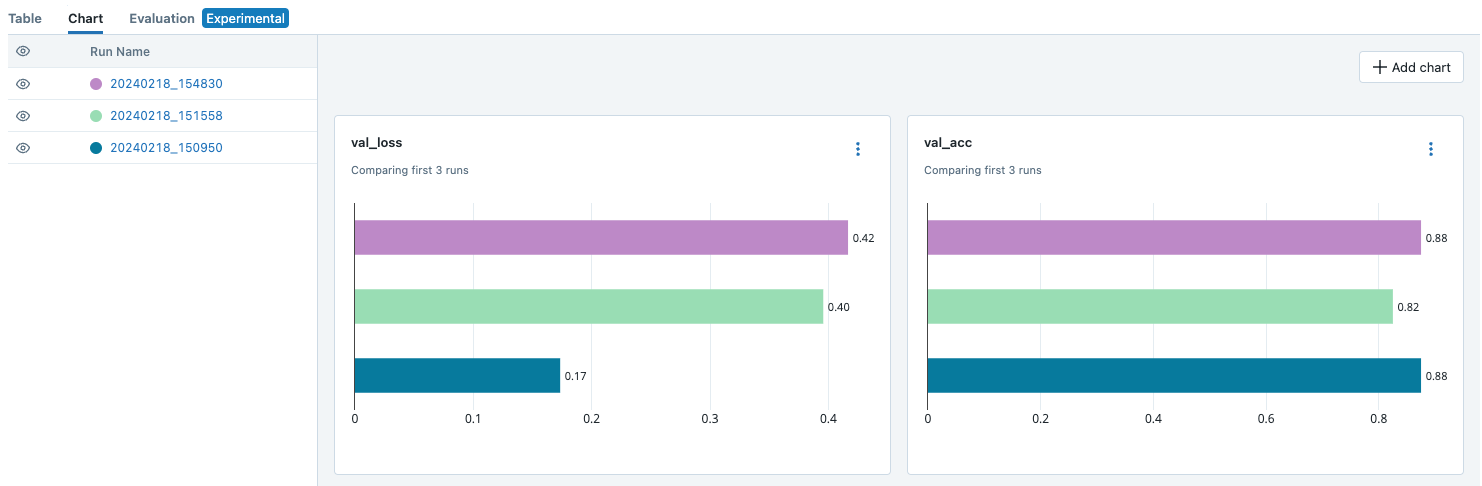

MLflow is a popular open-source solution that streamlines the management and tracking of machine learning experiments, enhances the collaboration process, and offers tools to facilitate the smooth progression from model development to deployment. Moreover, it enables effective comparison between different experiments, ensuring the selection of the most suitable model for your specific use case, based on the optimal training results, it can also save and serve models so you can test your model and query it via an API.

(Measuring Val Loss and Val Accuracy)

The JFrog MLflow Plugin

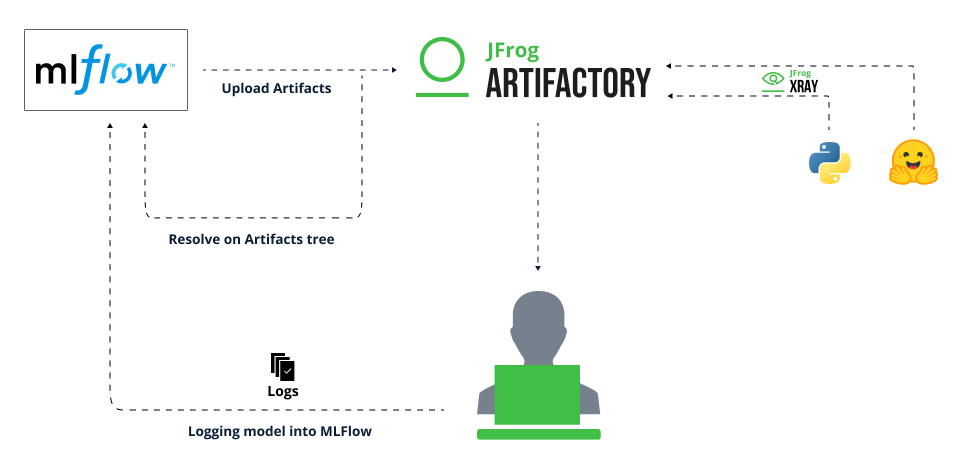

The JFrog plugin makes it easy to share and receive ML artifacts as you work on your models with MLflow. It helps you grab what you need, like dependencies and pre-trained models, while also letting you stash and check out your final model. Everything is kept organized in one reliable place: JFrog Artifactory.

Let’s talk about the flow. As shown in the image below, first, the LLM Developer develops the model and fetches dependencies from PyPI and the pre-trained model from Hugging Face. The dependencies are scanned by JFrog Xray, cached in Artifactory, and proxied back to the developer. The developer logs his model and other parameters into MLflow. MLflow uploads all artifacts into the configured repository in Artifactory. When the developer goes to MLflow UI or queries his model via MLflow, MLflow then fetches the artifacts from Artifactory where it was stored in the first place.

Caveats

When working with this integration, here are some functionalities that can only be configured and accessed from within the JFrog Platform:

- Creating your MLflow repositories (prerequisite – see step-by-step below)

- Viewing your security and compliance scan results and alerts

- Creating and configuring your dependencies, such as Pypi, Docker and HuggingFace proxies. (manual configuration)

| Alternatively, you may be interested in learning more about JFrog MLOps, unifying ML development, artifact management and security in a single platform. |

Governing and Securing Your ML Models

The JFrog MLflow plugin facilitates a seamless out-of-the-box integration that maximizes the combined capabilities of JFrog and MLflow. This integration streamlines the process of running experiments and retrieving models and their packages, all conveniently stored in a pre-configured repository on the JFrog Platform.

Securing your open source dependencies

The vast majority of projects on ML development tools use open-source models and dependencies and then apply their fine-tuning to the pre-trained models. According to our latest JFrog Security Research team findings there are more than 100 malicious models in HuggingFace today.

Most ML development projects start by selecting which components you want to use. Using JFrog Advanced Security, you can download open source models securely and confidently block any malicious models. This ensures the integrity and security of your AI ecosystem.

Governing your user access

With this integration, your repository is designed to be accessible and secure on the JFrog Platform, restricted to authorized individuals for the associated artifacts.

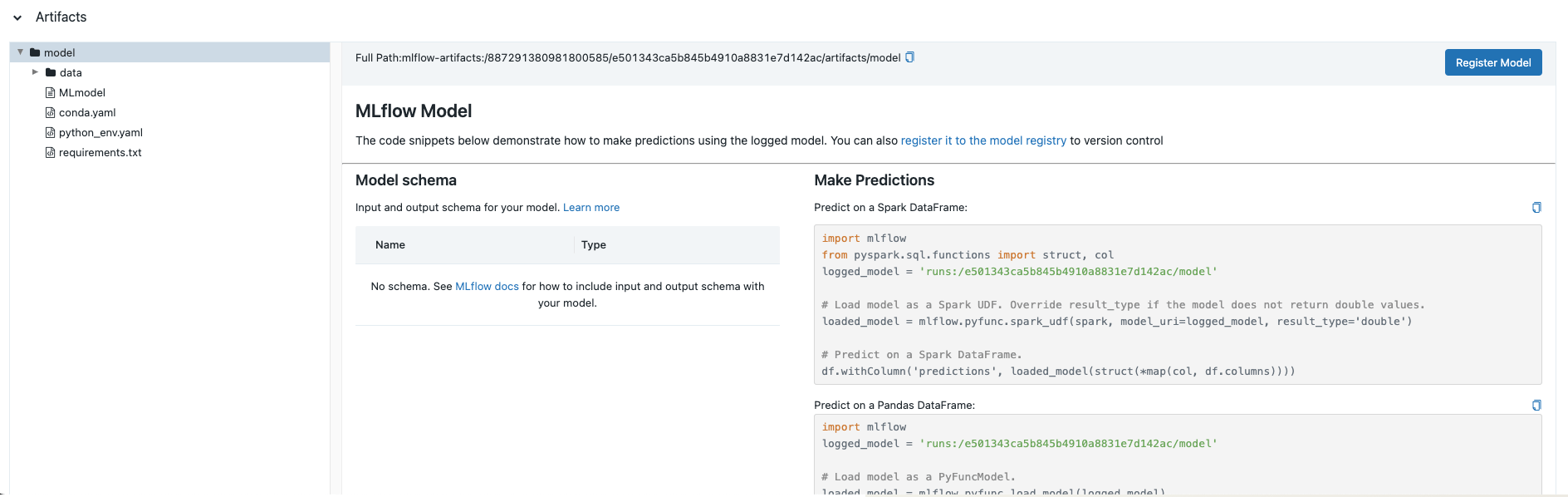

When we are in the ongoing process of training a model, we receive a large number of artifacts as the output, and must keep those artifacts in an orderly and safe manner for several reasons. This includes quick and orderly retrieval, as well as control and definition of the entities that can access those files. This integration simplifies these operations by allowing automatic upload of artifacts directly to your Artifactory repository. In addition, sharing those artifacts and promoting them to production level repositories when ready.

Securing and controlling your model development process is a must. The MLflow JFrog integration enables you to responsibly implement security, control, and understanding of the artifacts of the model, ultimately leading to a more efficient and safer process.

Here are three main benefits of the JFrog MLflow integration:

- Version control management for your diverse ML artifact versions.

- Access control to your ML artifacts with specific user permissions to regulate access.

- CI/CD pipelines integration to streamline your MLflow artifacts and ensure consistent and efficient workflows.

Get started in 5 easy steps

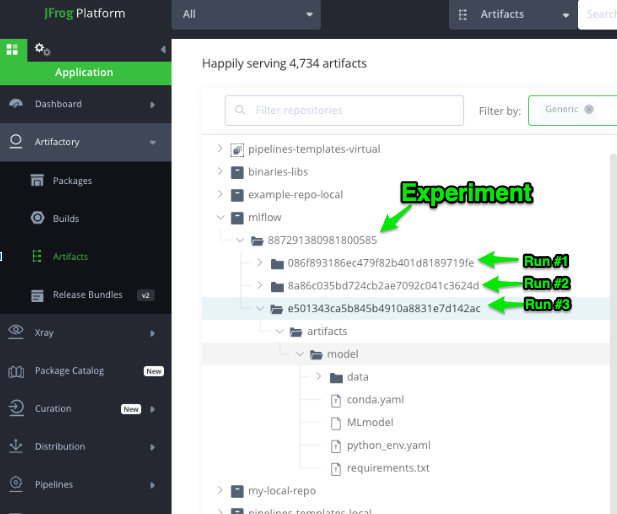

Prerequisite: Create your MLflow repository as a Generic Repository type. This is where your experiments artifacts will be stored. Each experiment run will be stored as a separate folder.

Step 1. Install the JFrog plugin and start your MLflow Server.

pip install mlflow-jfrog-pluginStep 2. Export the token that will be used by MLflow to publish and fetch the experiment’s artifacts. ARTIFACTORY_AUTH_TOKEN (mandatory)

export ARTIFACTORY_AUTH_TOKEN=Step 3. Log the debug output of the JFrog MLflow plugin. ARTIFACTORY_DEBUG (optional) False by default.

export ARTIFACTORY_DEBUG=true Step 4. When an experiment is deleted, it is also by default deleted from its corresponding JFrog Artifactory repository, once the MLflow’s garbage collector runs. To change this behavior, use the following environment variable. This functionality effectively manages your storage resources.

export ARTIFACTORY_ARTIFACTS_DELETE_SKIP=falseStep 5. Run your MLflow server.

mlflow server --host 127.0.0.1 --port 8080 --gunicorn-opts '--timeout 180' --artifacts-destination artifactory://<your_jfrog_host>/artifactory/<repo_name>