Integrating JFrog Artifactory with Amazon SageMaker

Today, we’re excited to announce a new integration with Amazon SageMaker! SageMaker helps companies build, train, and deploy machine learning (ML) models for any use case with fully managed infrastructure, tools, and workflows. By leveraging JFrog Artifactory and Amazon SageMaker together, ML models can be delivered alongside all other software development components in a modern DevSecOps workflow, making each model immutable, traceable, secure, and validated as it matures for release. In this blog post, we’ll dive into customer use cases, benefits, and how to get started using this new integration.

Table of Contents:

- What is Artifactory?

- What is SageMaker?

- Why do I need Artifactory for my AI/ML development?

- What are the advantages of the Artifactory and SageMaker combo?

- What tools does JFrog provide for AI/ML model development?

- Where does it make sense to integrate Artifactory with SageMaker?

- Example workflows within Artifactory and SageMaker

- Final thoughts and conclusion

What is Artifactory?

JFrog Artifactory is the single solution for housing and managing all the artifacts, binaries, packages, files, containers, and components for use throughout your software supply chain. It’s more than just inventory management — Artifactory provides sophisticated access control to stored resources, enterprise scalability with support for a mature high availability architecture, and a wealth of automation tools to support the software development lifecycle (SDLC). It’s just part of the JFrog Software Supply Chain Platform, a comprehensive toolset for DevSecOps that includes tools to ensure the safety, traceability, and efficient delivery of software components for development and production environments.

What is SageMaker?

Amazon SageMaker is a fully managed service that brings together a broad set of tools to enable high-performance, low-cost machine learning (ML) for any use case. With SageMaker, you can build, train, and deploy ML models at scale using tools like notebooks, debuggers, profilers, pipelines, MLOps, and more – all in one integrated development environment (IDE).

The environments used to support the artificial intelligence (AI) and machine learning (ML) lifecycles are varied based on elements such as an organization’s maturity, existing processes and related resources, as well as tool preference and familiarity in order to utilize existing skill sets. Often this translates to designing systems that are composed of multiple components to provide needed flexibility as well as meet the specific needs and requirements of developer, infrastructure, and security teams.

Given the focus of SageMaker on improving the development lifecycle of AI/ML, and the flexibility and maturity of Artifactory to protect and manage software binaries, integrating these two powerful solutions is a pragmatic approach for any AI/ML development.

Why do I need Artifactory for my AI/ML development?

Models are large, complex binaries consisting of multiple parts — and just as it is for other common software components and artifacts, Artifactory is an ideal place to host, manage, version, trace, and secure models.

It’s crucial to understand what goes into AI/ML development. Just like in other types of software development, third-party software packages are frequently used to accelerate development by avoiding writing a lot of code from scratch. These packages compose the beginning building blocks of many applications and frameworks. Third-party resources can come into development environments in various ways: from pre-installed packages or frameworks, through public repositories such as PyPI, Docker Hub, and Hugging Face, or from private repositories containing proprietary code or other artifacts that require authentication.

The inventory of dependencies that your project relies on can grow and change over time, and effectively managing them involves more than knowing the first level of dependencies that often come in the form of import statements in your code. What about the dependencies of these dependencies? What about the versions of all of these dependencies? Tracking these items is imperative to both the stability and reliability of software development projects — including AI/ML projects.

Consider the following scenarios:

The latest version of a public Python package you rely on has been imported into your project, but one of its dependencies has been modified in a way that’s incompatible with your current code, causing new development to come to a halt. Unfortunately, your developers have always just downloaded the latest version and the last working version of the offending dependency isn’t available from the original source. How will you recover?

A security vulnerability is discovered in the Docker image you regularly use to put your ML model into production. Unfortunately, the vulnerability was in an old version of a base image pulled from Docker Hub, and although it was a known CVE, it wasn’t discovered until it was exploited in your production system. Could this have been prevented?

It’s discovered that an ML model is licensed in a way that prohibits your organization’s current use. Fortunately, this was caught before it was released into production. Unfortunately, much of the code base relies on this particular model’s functionality. How much time will it take to find an alternative and potentially rewrite the related code?

Any one of these scenarios can throw a wrench in your production pipeline. Practical management of the inventory of all the artifacts used and produced during AI/ML development processes will help in recovery and reduce downtime. An enterprise-level artifact management system like Artifactory provides the foundation needed for effective troubleshooting, recovery, error prevention, and the ability to address more serious regulatory compliance and security issues around licensing and security vulnerabilities by utilizing other components of the JFrog Platform, such as JFrog Xray.

What are the advantages of the Artifactory and SageMaker combo?

SageMaker already provides a ton of resources for AI/ML development workflows. You can choose from available models and images that already include the most popular distributions, Python libraries, and ML frameworks and algorithms.

But what if you are in the business of building and producing custom and proprietary artifacts?

Built-in resources are generally intended to satisfy a majority of use cases, which usually means the most commonly used packages, libraries, and models are provided. Having these available is helpful for exploring and comparing feature sets, learning the basics of the platform or a specific framework, or building proof-of-concept type material. Over time, more specialized use cases might be addressed and more built-in resources will be provided, but this will likely be decided by popular demand over an individual organization’s immediate needs.

There will undoubtedly be cases where built-in resources can only provide a starting place, including but not limited to the following scenarios:

- Proprietary Code or Models

Model developers may need to access proprietary resources for use in research, training, and testing.

- Standardized Development Environments

An organization’s platform or infrastructure team may wish to standardize development environments to improve efficiency and to meet safety and security regulations required for production deployment.

- Lean Production Artifacts

In some cases, it might be necessary to eliminate automatically supplied packages and frameworks that are superfluous and unused in the final product.

In all of these use cases, the ability to customize will become essential to your organization when developing production-grade custom models and the applications that use them.

What tools does JFrog provide for AI/ML model development?

JFrog is already a powerhouse in the context of artifact management and security. JFrog and AWS cooperate to provide toolsets that further the agenda to improve quality, speed up iterative development, and provide safe and secure environments for AI/ML development workflows.

Just as artifact management systems are essential for software projects in other industries, proper artifact management addresses many of the same problems that exist in AI/ML model development. AI/ML development can greatly benefit from utilizing existing software development processes that improve quality by adhering to established DevOps best practices and by taking advantage of existing infrastructure and tooling that meets organizational regulatory compliance requirements.

JFrog Artifactory provides the foundation for managing software artifacts in a centralized location. The following are just some of the features of Artifactory and other commonly used tools that benefit AI/ML development:

Hugging Face Repositories: Most recently, and a huge contribution to the AI/ML ecosystem, JFrog introduced Hugging Face repositories to Artifactory — remote repositories to proxy models stored in the Hugging Face hub, and local repositories to store custom and proprietary models. We also recently announced new capabilities with ML Model Management general availability release, including ML model versioning.

Container Image Registry: Artifactory already provides a Container Image Registry to store images used for model training and deployment as well as proxy images from popular public registries such as Docker Hub.

Python Repositories: Python repositories in Artifactory are available to store proprietary Python packages or to proxy from public indexes such as PyPI.

NOTE: Artifactory provides repositories for managing 30+ other package types in addition to PyPI packages!

JFrog CLI: A robust command line tool to communicate with and operate an Artifactory instance and other components within the JFrog Platform (e.g. JFrog Xray).

All of these can (and should!) be leveraged in SageMaker environments.

Where does it make sense to integrate Artifactory with SageMaker?

There are many ways that Artifactory can be used with SageMaker, but the primary objective is to enable, within the SageMaker service, authorized access to an Artifactory instance at the appropriate times during development, allowing the successful retrieval of artifacts from Artifactory (including proxied requests for artifacts from remote repositories), and the successful storage of all artifacts needed for a project.

But first, secure the Amazon SageMaker environment!

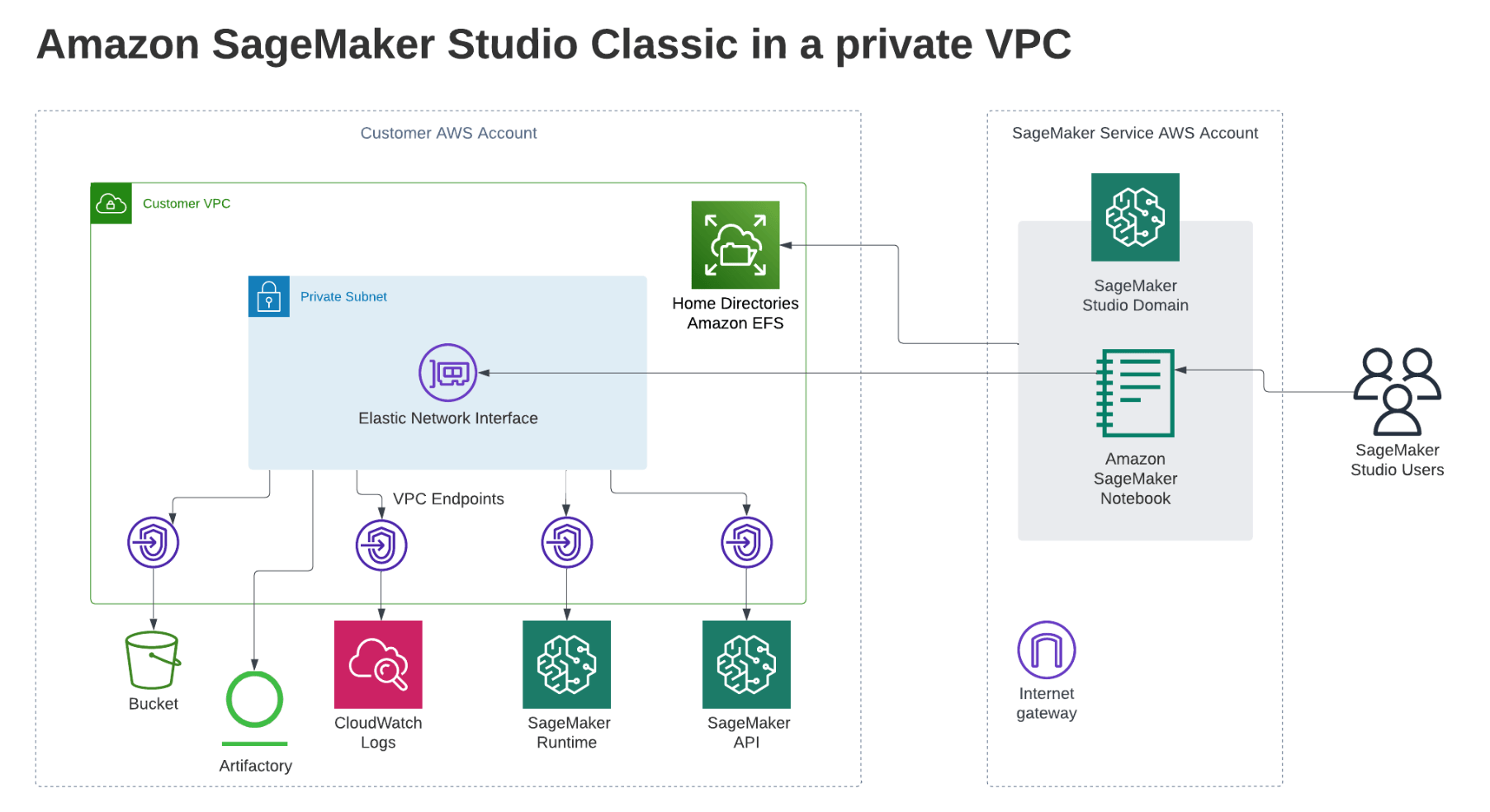

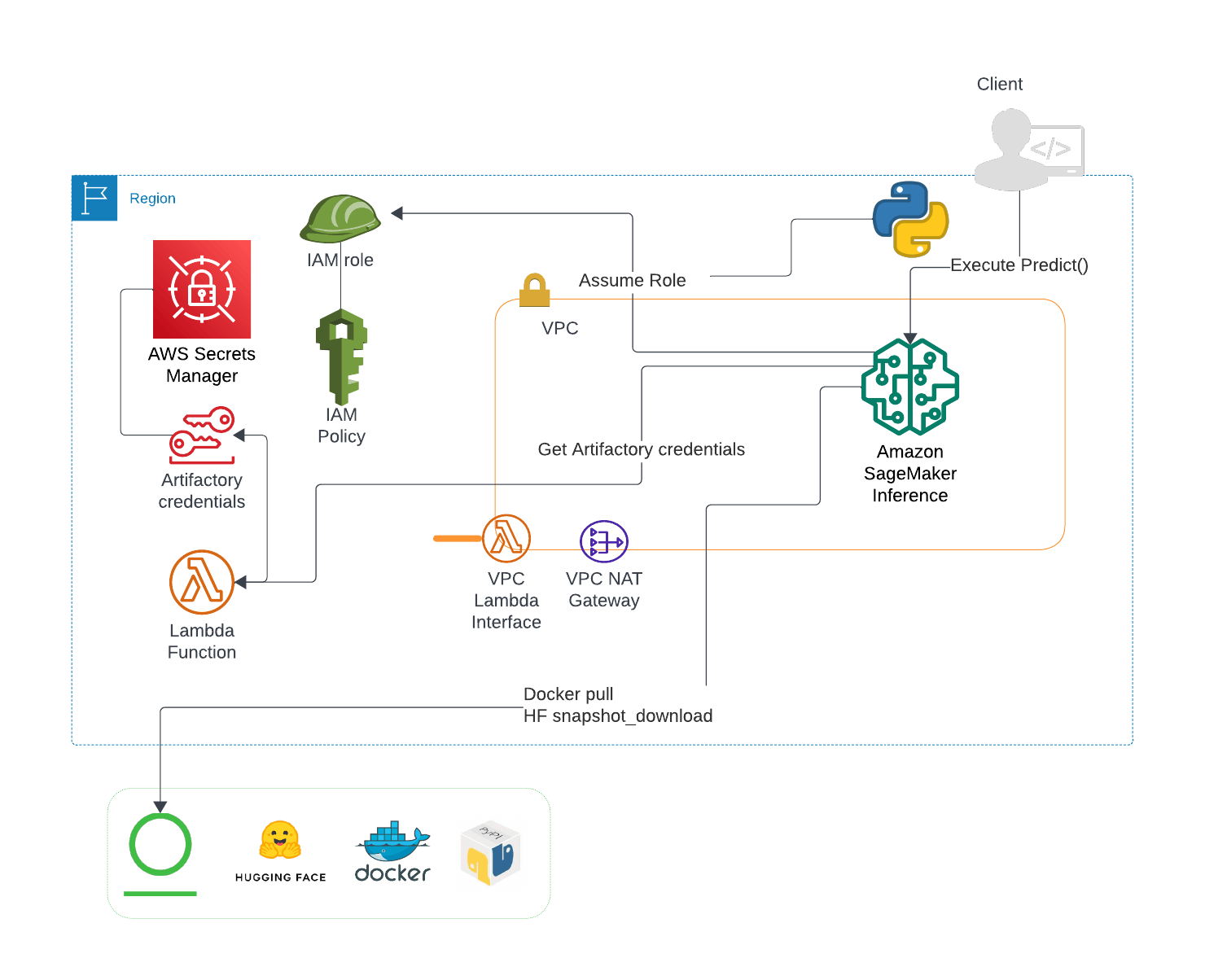

The recommended approach for using any of the resources in SageMaker along with Artifactory is to operate SageMaker in VPC-only mode along with a SageMaker domain setup within the VPC. Learn more about getting started with SageMaker and SageMaker domains here, and about VPC-only setup here.

Example workflows within Artifactory and SageMaker

Beginning with an environment including a private VPC and a SageMaker domain with existing users, the following examples are potential workflows for training and deploying models using SageMaker services, and working within SageMaker notebooks and Notebook Instances. Artifactory fits nicely at each place in the workflow that requires either retrieval or storage of packages, dependencies, and other development artifacts.

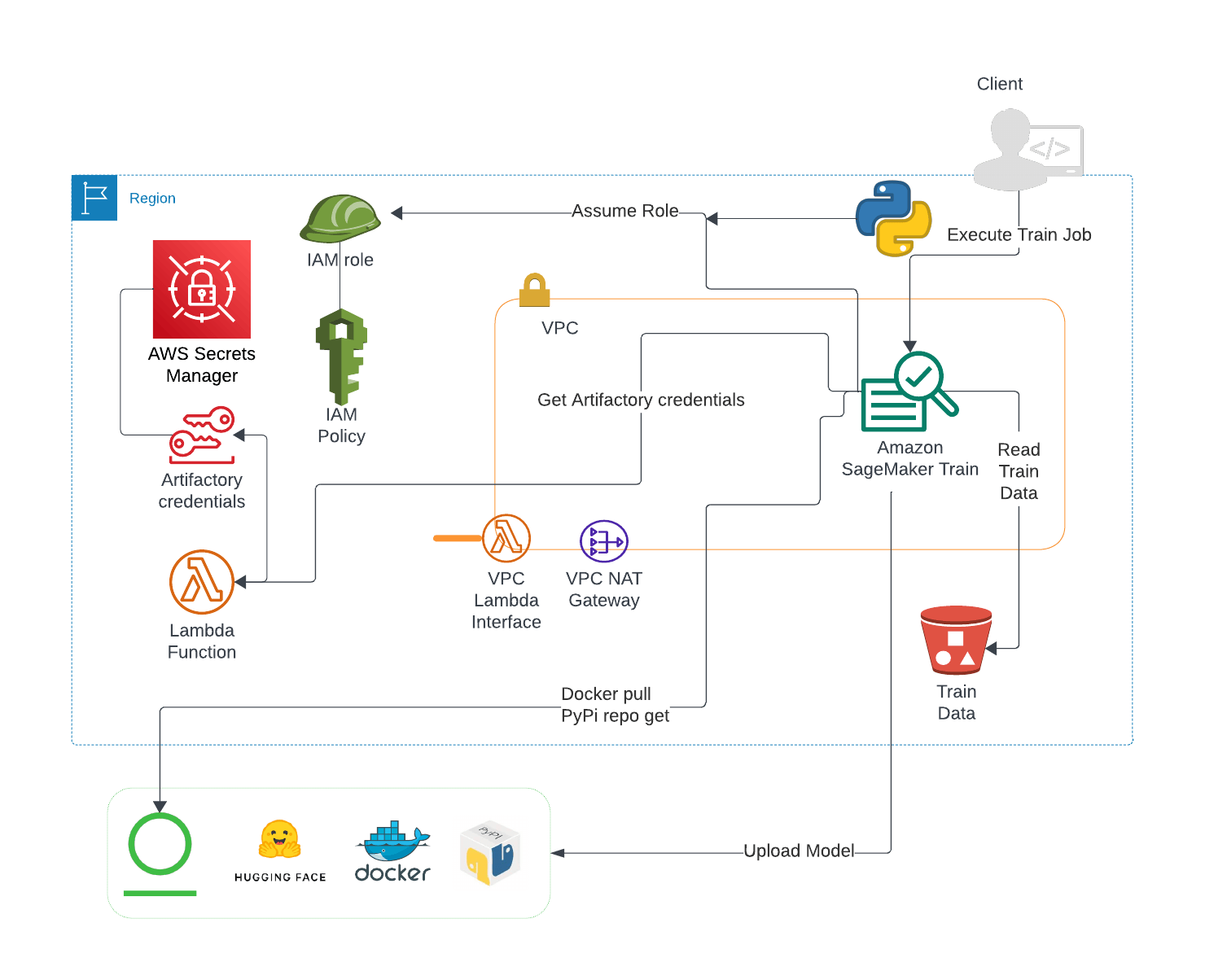

Because training and deploying ML models are such integral tasks of ML model development, let’s start with workflows that utilize an existing development environment on a developer’s machine, but allows for the ability to launch SageMaker training jobs and the deployment of ML models to a SageMaker endpoint to make predictions, or inference.

Training an ML model

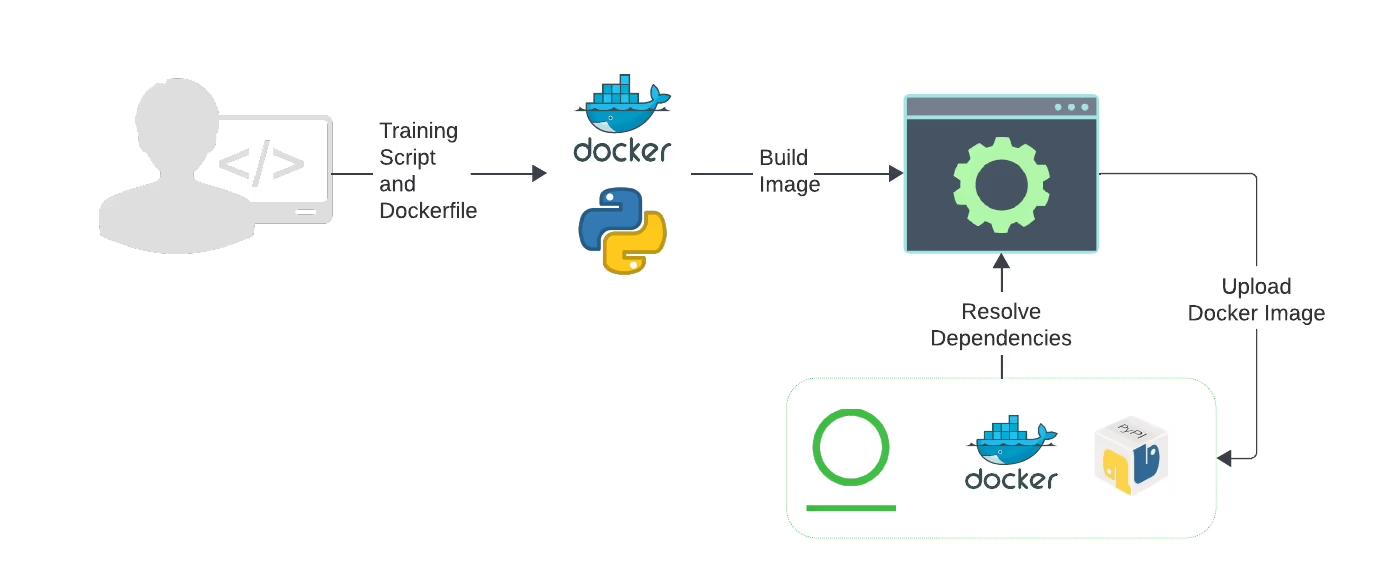

One of the key undertakings of a model developer is to train a particular model on a set of prepared data. The following workflow takes a developer from the creation of a container image required by the SageMaker service for the purpose of launching a SageMaker training job, to storing a trained model version in a private Hugging Face repository in Artifactory.

The first order of business is to create a Docker container image that will encapsulate a script to be used for training a model on a dataset from an AWS S3 bucket. The Sagemaker Python SDK and base images readily available on Docker Hub include popular machine learning frameworks and algorithms, and provide many of the required training utilities.

In this case, the work of writing the training script and building the Docker image is accomplished on a developer’s machine, which is configured to resolve artifacts from Artifactory. Another option for a continuous integration environment is to commit changes made to a Dockerfile and/or training script to a code repo that triggers a build of the Docker image on a build server, which then delivers the resulting image to Artifactory. Note that in either case, requests for the base image, Python packages, or other dependencies to be included in the Docker image are also resolved from Artifactory.

Once the training Docker image is built, the image is pushed to a Docker repository in Artifactory, to be accessed later by the SageMaker service.

The next step is to launch a SageMaker training job. This can also be done from a local development machine utilizing the SageMaker Python SDK. The training job is executed, and the resulting trained model version can then be uploaded to a local Hugging Face repository in Artifactory.

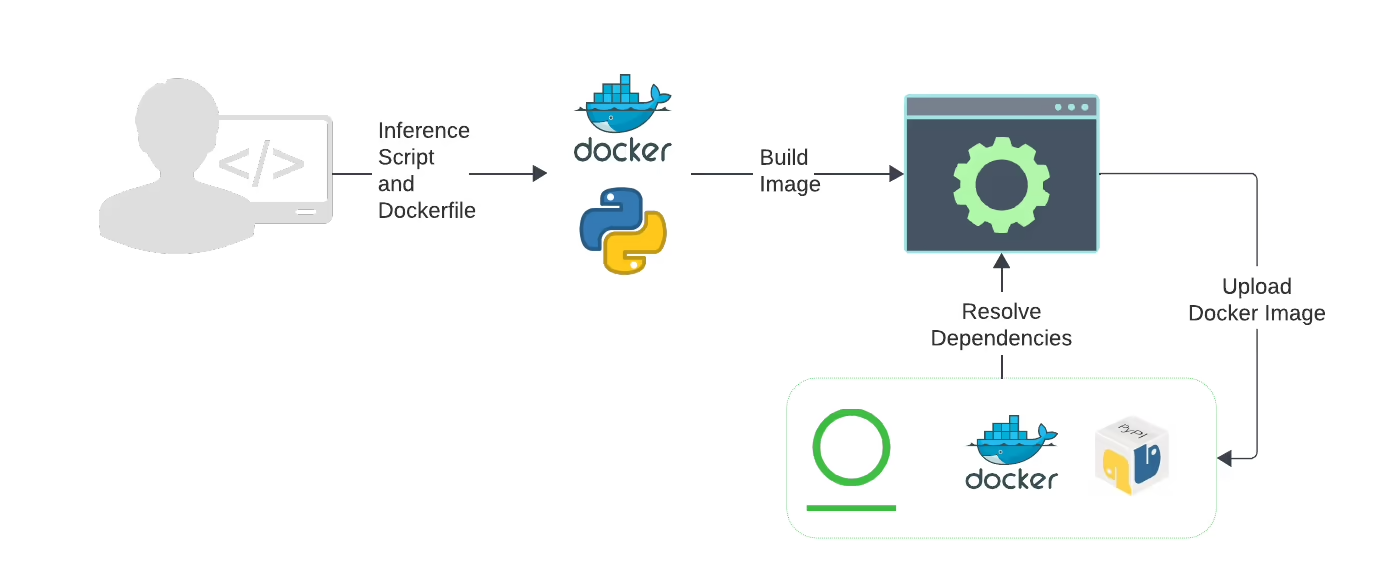

Deploying an ML model for Inference

Once a custom, trained model has been uploaded to Artifactory, this model can now be deployed to the SageMaker Inference service and tested using a SageMaker endpoint. The following workflow takes a developer from the creation of a container image required by the SageMaker Inference service to the deployment of a model to run predictions against.

Similar to the process of Training an ML model, begin with the creation of a custom Docker container that includes the necessary configuration and scripts for running predictions against a given model with SageMaker Inference. All packages and artifacts needed for this task are resolved from Artifactory and the resulting custom Docker image pushed to a Docker repository in Artifactory.

Now that the custom image is available in Artifactory, a Python script utilizing the SageMaker Python SDK can deploy the model to SageMaker Inference. All of the necessary configuration parameters required by SageMaker Inference as well as the location of the custom Docker image and the Hugging Face model in Artifactory can be provided using the SageMaker Python SDK Model initialization and methods.

Once the model is deployed and a SageMaker endpoint is active, it will be possible to run predictions utilizing the SageMaker Python SDK Predictor.

Working in Notebook Instances, SageMaker Studio Classic Notebooks, and other SageMaker resources

The sections on training and deploying ML models assumed a development environment separate from SageMaker, and the use of an IDE on an individual development machine. But what about using SageMaker tools like Studio Classic Notebooks and Notebook Instances?

One of the primary focuses of SageMaker is to provide full-featured environments to code, build, and run everything needed for AI/ML development. The primary advantage of this approach is improved efficiency for developers by avoiding context switching in and out of SageMaker. It’s also possible to customize these environments to provide only the software and tools approved for use by an individual organization to meet safety and security regulations.

Organizations that take advantage of and rely on the enterprise features of Artifactory (and the JFrog Platform) now benefit from the ability to resolve and retrieve artifacts to and from Artifactory via SageMaker Classic Notebooks and Notebook Instances.

One option is to standardize the use of Artifactory within SageMaker Classic Notebooks and Notebook instances by attaching a lifecycle configuration script. For example, consider using a lifecycle configuration script that accesses sensitive authentication and configuration information from an AWS secret, and performs the following actions with that information:

- Install & Configure JFrog CLI

- Configure pip for virtual PyPI repository in Artifactory

- Configure Hugging Face SDK to work with Artifactory

As a result, each time a user launches a Notebook Instance, creates a SageMaker Classic Notebook, or launches a code console or image terminal, the setup needed for users to communicate with an instance of Artifactory is completed in a standardized and secure way. Without any additional effort, users will be able to resolve any Hugging Face Hub or Python PyPI software dependencies required for their workflow through Artifactory.

To see an example of a lifecycle configuration script and a step-by-step example of how to use a lifecycle configuration script with SageMaker Classic Notebooks and Notebook Instances, check out this Knowledge Base Article.

Final thoughts and conclusion

Bringing the enterprise-level management of JFrog Artifactory to Amazon SageMaker is recommended in all stages of the AI/ML lifecycle. The combination of JFrog and AWS helps to alleviate the complexity and cost concerns of iterative ML model development, testing, and deployment.

JFrog Artifactory is widely used to establish best DevOps practices for artifact storage and management. Ensuring that all development artifacts are sourced through JFrog Artifactory allows for more than just basic accounting and software inventory management — this practice will ensure an organization is set up for success when it comes to regulatory compliance, licensing, and safety concerns when using third-party software packages.

The initial setup and configuration of SageMaker and Artifactory, and the customization of the environment can be tricky if you’re unfamiliar with the administrative aspects of AWS VPC networking details, SageMaker domains, AWS IAM roles and permissions, or creating and building Docker images. For detailed documentation on how to incorporate Artifactory in your SageMaker environments, check out the JFrog Knowledge Base for a working demo along with detailed step-by-step guides and explanations.

Plus, join me and the team at AWS to learn more about this integration along with new ML capabilities from JFrog in our webinar, now available on-demand: Building for the future: DevSecOps in the era of AI/ML model development.