From MLOps to MLOops: Exposing the Attack Surface of Machine Learning Platforms

NOTE: This research was recently presented at Black Hat USA 2024, under the title “From MLOps to MLOops – Exposing the Attack Surface of Machine Learning Platforms”.

The JFrog Security Research team recently dedicated its efforts to exploring the various attacks that could be mounted on open source machine learning (MLOps) platforms used inside organizational networks.

Our research culminated in more than 20 disclosed CVEs to various ML vendors, and a deeper understanding of how real-world attacks can be launched against deployed MLOps platforms.

In this blog post, we will tackle –

- Core features of MLOps platforms

- How each MLOps feature can be attacked

- Best practices for deploying MLOps platforms

- What can MLOps do for you

- Inherent vs. Implementation Vulnerabilities

- Inherent Vulnerabilities in MLOps Platforms

- Implementation Vulnerabilities in MLOps Platforms

- How would an attacker chain these vulnerabilities together?

- Mapping MLOps features to possible attacks

- Mitigating some of the attack surface

- Takeaways for deploying MLOps platforms in your organization

What can MLOps do for you

Before we list the various MLOps platform attacks, let’s familiarize ourselves with some basic MLOps concepts.

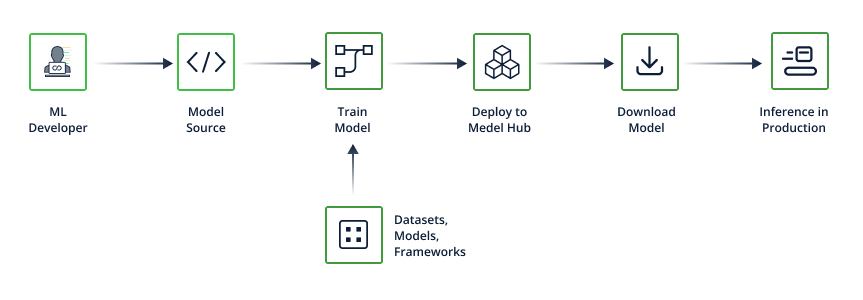

The common lifecycle of building and deploying a machine learning model involves –

- Choosing a machine learning algorithm (ex. SVM or Decision Tree)

- Feeding a dataset to the algorithm (“Training” the model)

- This produces a “pretrained” model which can be queried

- Optionally – Deploying the pretrained model to a model registry

- Deploying the pretrained model to production, either by –

- Embedding it in an application

- Deploying it to an Inference server (“Model Serving” or “Model as a Service”)

Let’s take a deeper look into each of these steps.

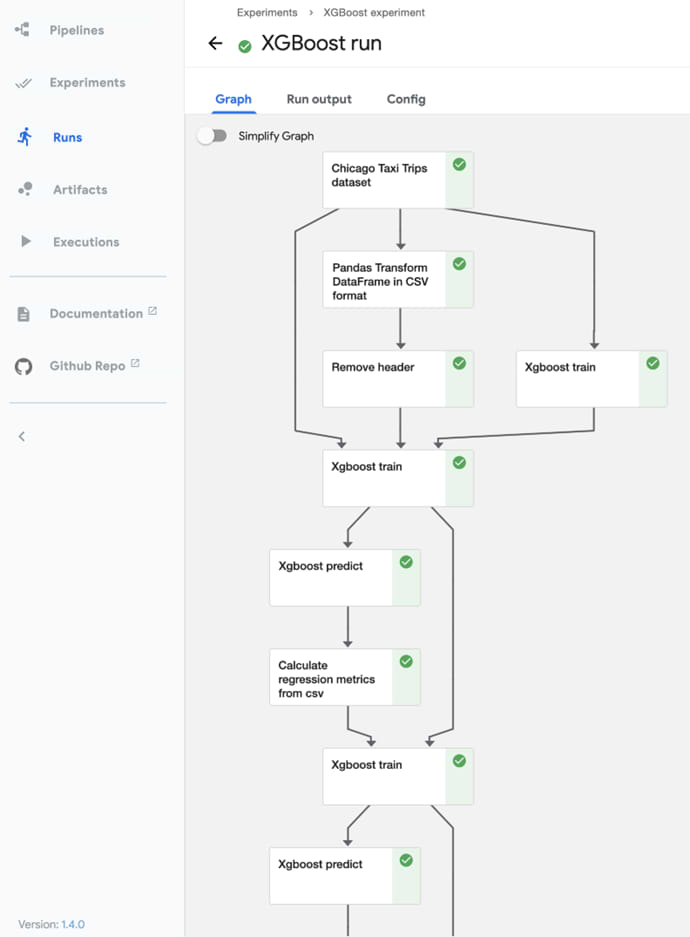

MLOps Pipeline

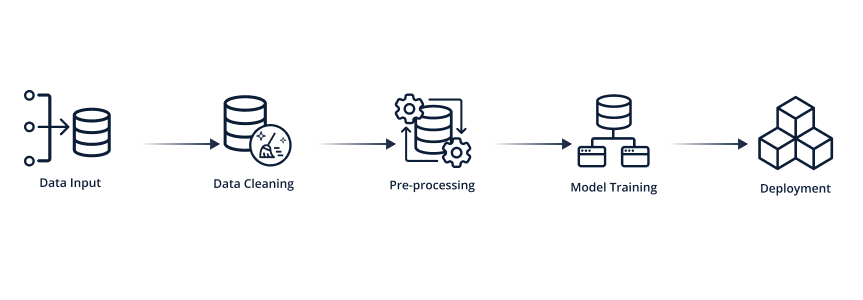

As mentioned, MLOps platforms provide the ability to construct and run an ML model pipeline. The idea is to automate the various stages of model development and deployment.

An MLOps pipeline is similar to a traditional DevOps pipeline.

For example, in a DevOps pipeline we might perform a nightly build based on source code changes, but in an MLOps pipeline we might perform a nightly model training based on dataset changes.

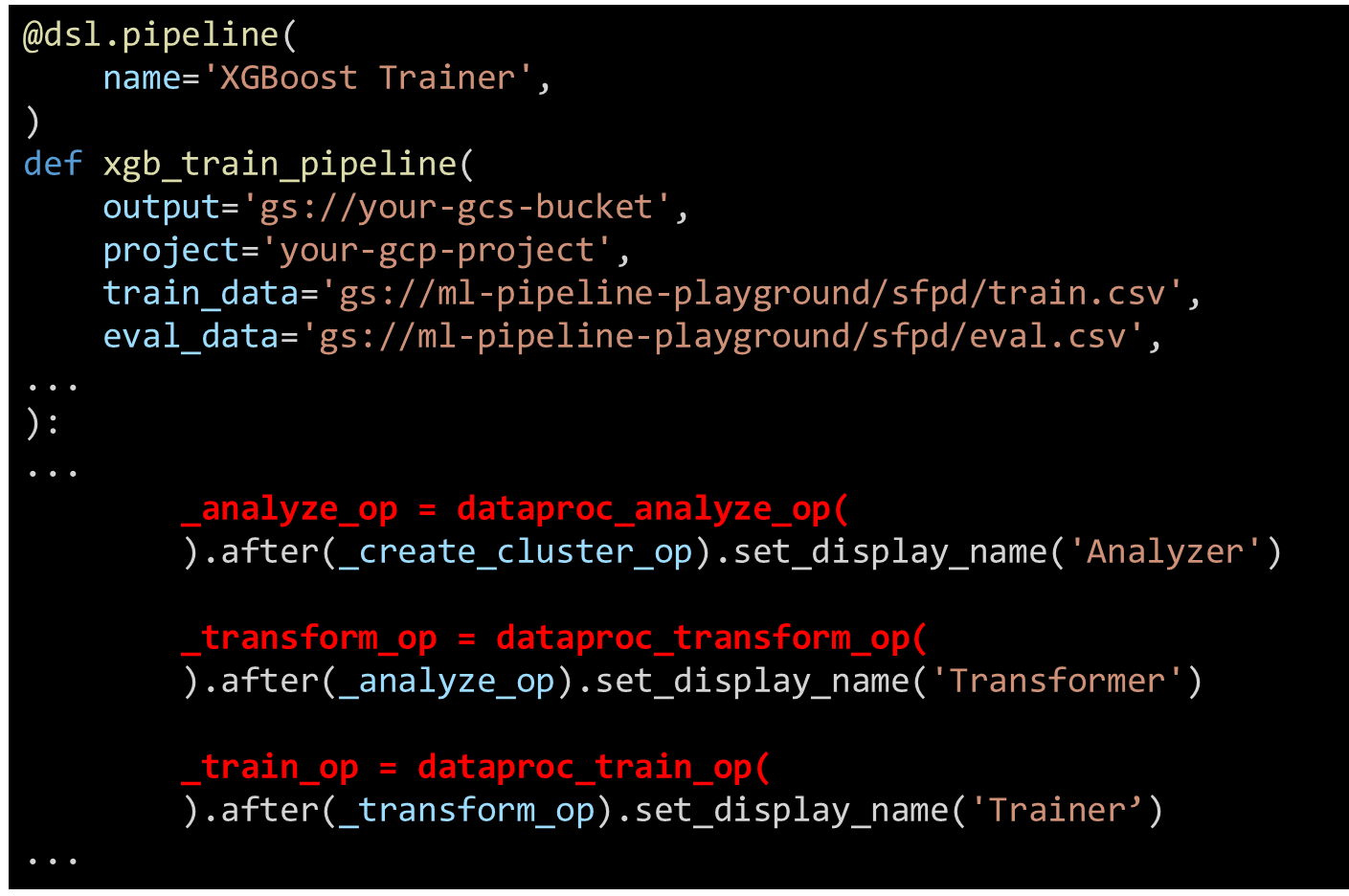

ML Pipelines are usually defined through Python code, the pipeline code monitors for changes in either the dataset or model parameters, and then trains a new model, evaluates it and if it passes the evaluation – deploys it to production.

For example – we can see abbreviated Python code from the popular Kubeflow platform that defines an MLOps pipeline which analyzes, transforms, trains and evaluates a machine learning model, based on datasets stored in (Google) cloud storage that can be constantly updated

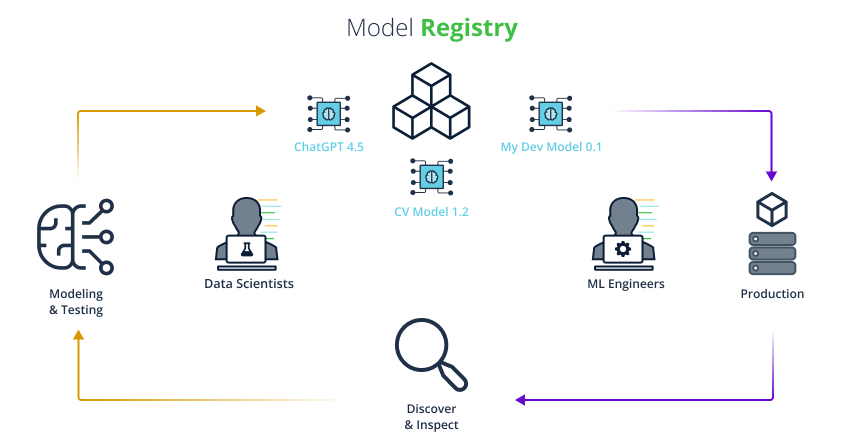

Model Registry

After training a model, either manually or by running an ML pipeline, the most robust way for tracking the pre-trained models is by using a Model Registry.

The Model registry acts as a version control mechanism for ML models. It is the single source of truth for an organization’s ML models and allows for easy fetching of specific model versions, aliasing, tagging and more.

Data scientists use training data to create the models, and then collaborate and iterate on different models and different versions of the models.

ML engineers can then promote some of these models to production machines where the models will be served to clients that will be able to query them.

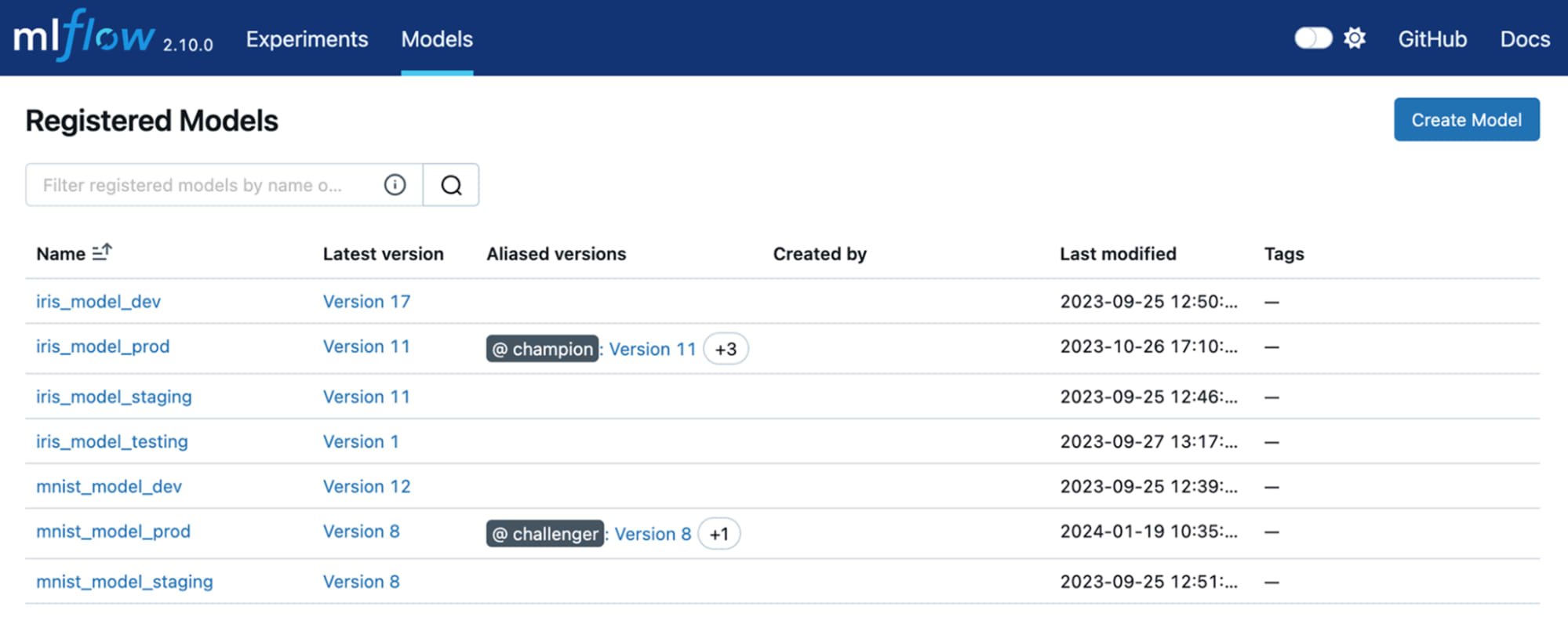

A good example of an ML Model registry is MLFlow, one of the most popular MLOps platforms today –

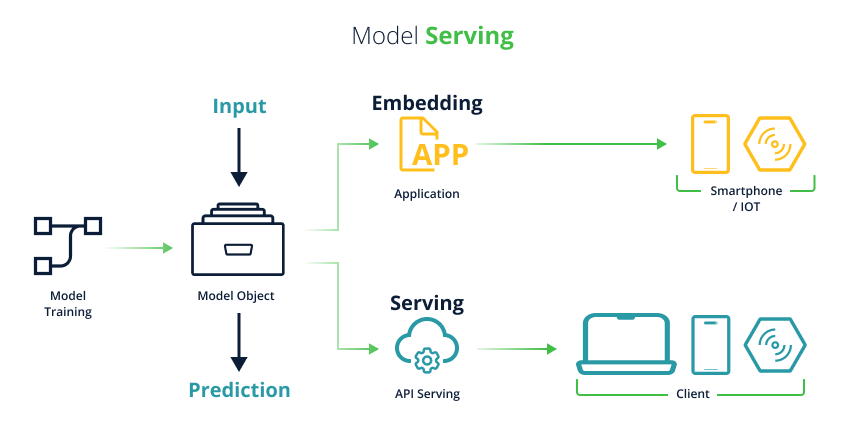

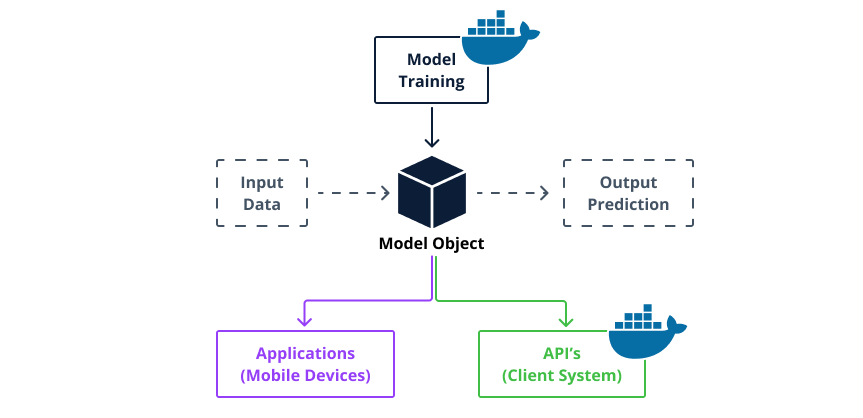

Model Serving

When we want to promote a model to be used in production, we have two choices, either we embed the model in an application or we allow users to query the model through an API, the latter is called “Model Serving” or “Model as a Service” –

Several MLOps platforms support model serving for various ML model formats.

This saves us the need of wrapping the model in some web application (ex. A Python Flask app) as it is difficult to manage, not very scalable, not agile (we usually can’t switch our model type without re-engineering our backend) and requires us to invent custom APIs for this purpose.

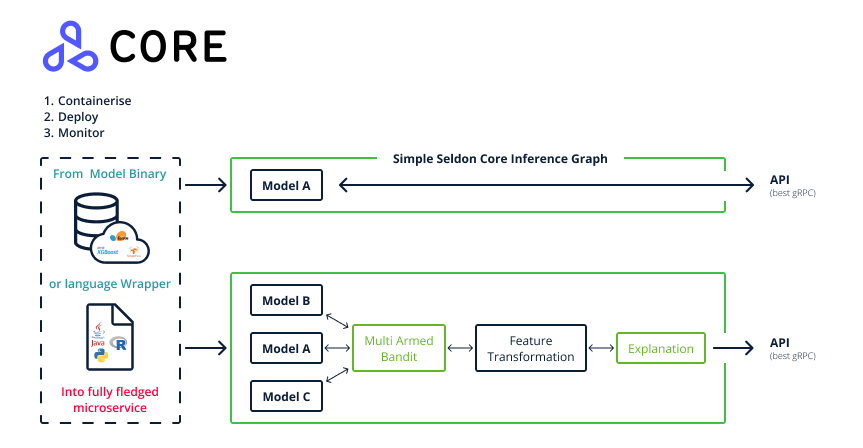

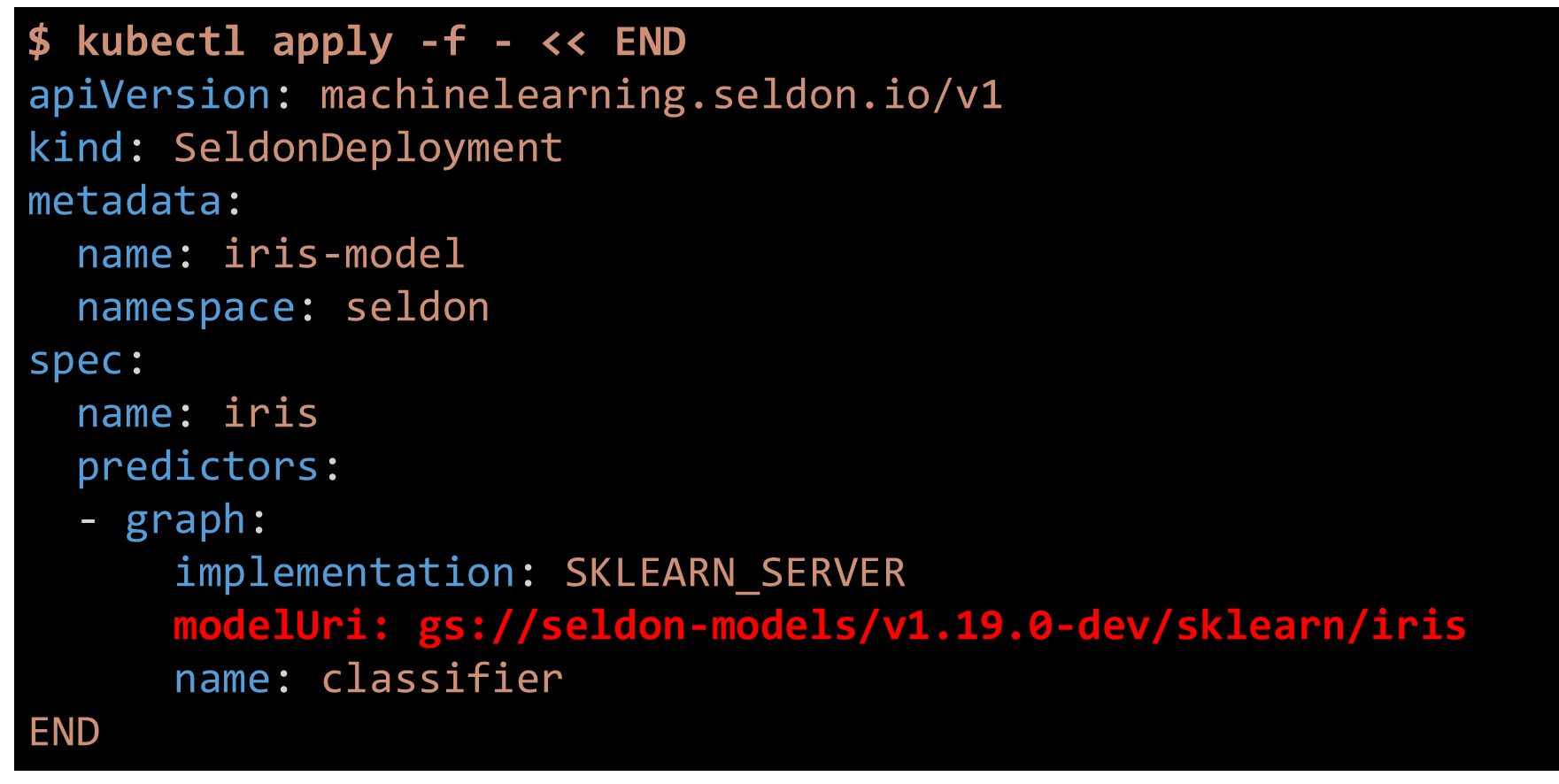

Instead of writing a custom web application to serve our model, the more robust solution is to use an MLOps platform that supports “Model Serving”. For example, Seldon Core, implements Model Serving by converting the model into a Docker image and then deploying it through Kubernetes and wrapping it with a standard API layer –

It’s extremely easy to serve multiple model types, without writing custom code, and consuming the models is also easy using the same API everywhere regardless of the underlying ML model type that is served. The only thing an ML engineer needs to do is apply a relevant Kubernetes configuration and the model is served instantly –

Inherent vs. Implementation Vulnerabilities

Now that we understand the basic functionalities of an MLOps platform, let’s see how attackers can abuse these functionalities to infiltrate and spread inside an organization.

In our research, we chose to analyze six of the most popular open source MLOps platforms, and see which attacks can be implemented against them when deployed in an organization.

In our research, we ended up distinguishing between two types of vulnerabilities in MLOps platforms, inherent vulnerabilities and implementation vulnerabilities.

Inherent vulnerabilities are vulnerabilities that are caused by the underlying formats and processes used in the target technology (in our case – MLOps platforms).

To draw an analogy – Let’s see an inherent vulnerability in Python.

Let’s imagine a PyPI package provides the following unjson_and_unpickle function –

def unjson_and_unpickle(json_data: str):

pickle_bytes = json.loads(json_data)["pickle_data"]

return pickle.loads(pickle_bytes)As you might know, passing untrusted data to pickle.loads can result in arbitrary code execution. Therefore, would the existence of this function merit a vulnerability report (CVE) on our PyPI library? The general consensus to this answer is No, since –

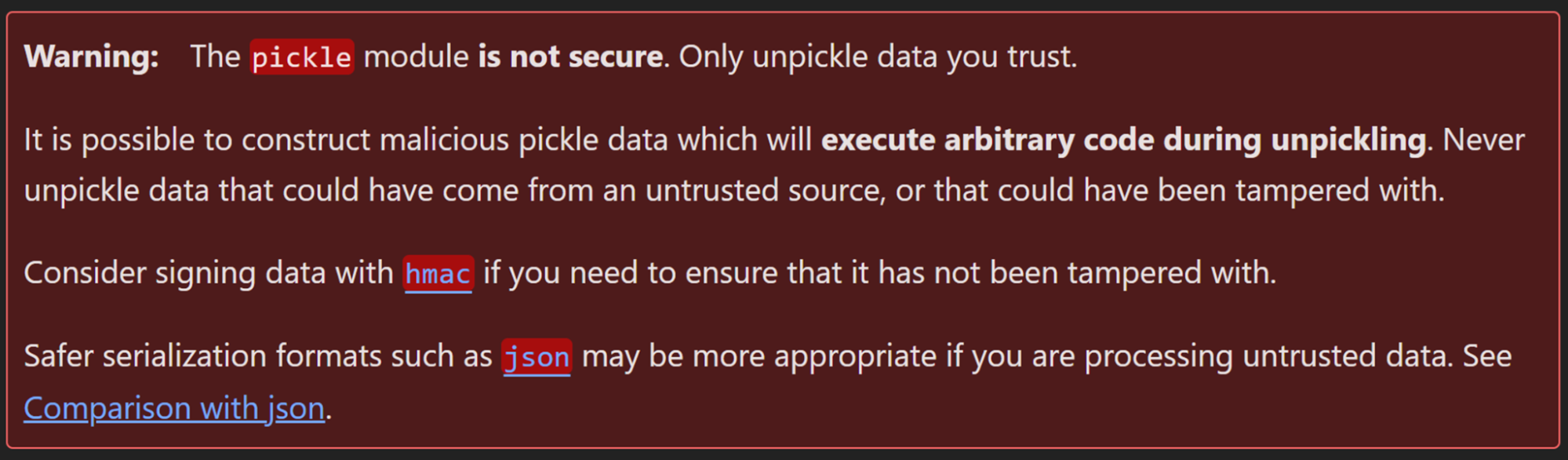

- The pickle module is well-known and documented to be insecure when passing untrusted data.

Figure 11. Python docs about the unsafe nature of Unpickling - Our potentially vulnerable function has a descriptive name (“unpickle” as part of the function name) and hopefully our library also documents this function as unsafe with untrusted inputs.

- In most cases, there is no way to “fix” this function without hurting the library’s functionality

Therefore, the users of this library must be aware of the dangers of using the Pickle format, there is no “vulnerability” that should be fixed in this library.

This is all fine and well since developers already have a lot of experience with Python and Pickle, but the problem is that machine learning is a new field.

Since ML is a new field, this raises the question – which of these “inherent” vulnerabilities are out there which we might not know about? Which ML actions should not be used with untrusted data?

Inherent vulnerabilities are scarier since there are no patches or CVEs against them, either you know about them or you don’t.

Inherent Vulnerabilities in MLOps Platforms

Now that we know about inherent vulnerabilities, let’s enumerate the inherent vulnerabilities in MLOps platforms that we were able to exploit in our research.

Malicious ML Models

Models are code! This is probably the most well-known inherent vulnerability in machine learning, but it’s also the most dangerous one. It can lead to code execution even when ML users are aware of the dangers of loading untrusted ML models.

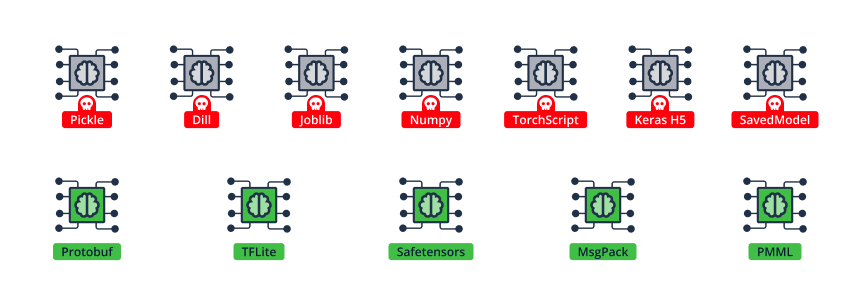

Unfortunately, most ML model formats that are in use today support automatic code execution on load, this means that just by loading an untrusted model, arbitrary code runs on your machine. Contrary to popular belief, this feature is not limited only to Pickle-based models.

Exact details on how the code is executed depend on the model format, but in general, the code is just embedded into the model binary, and rigged to execute when the model is loaded.

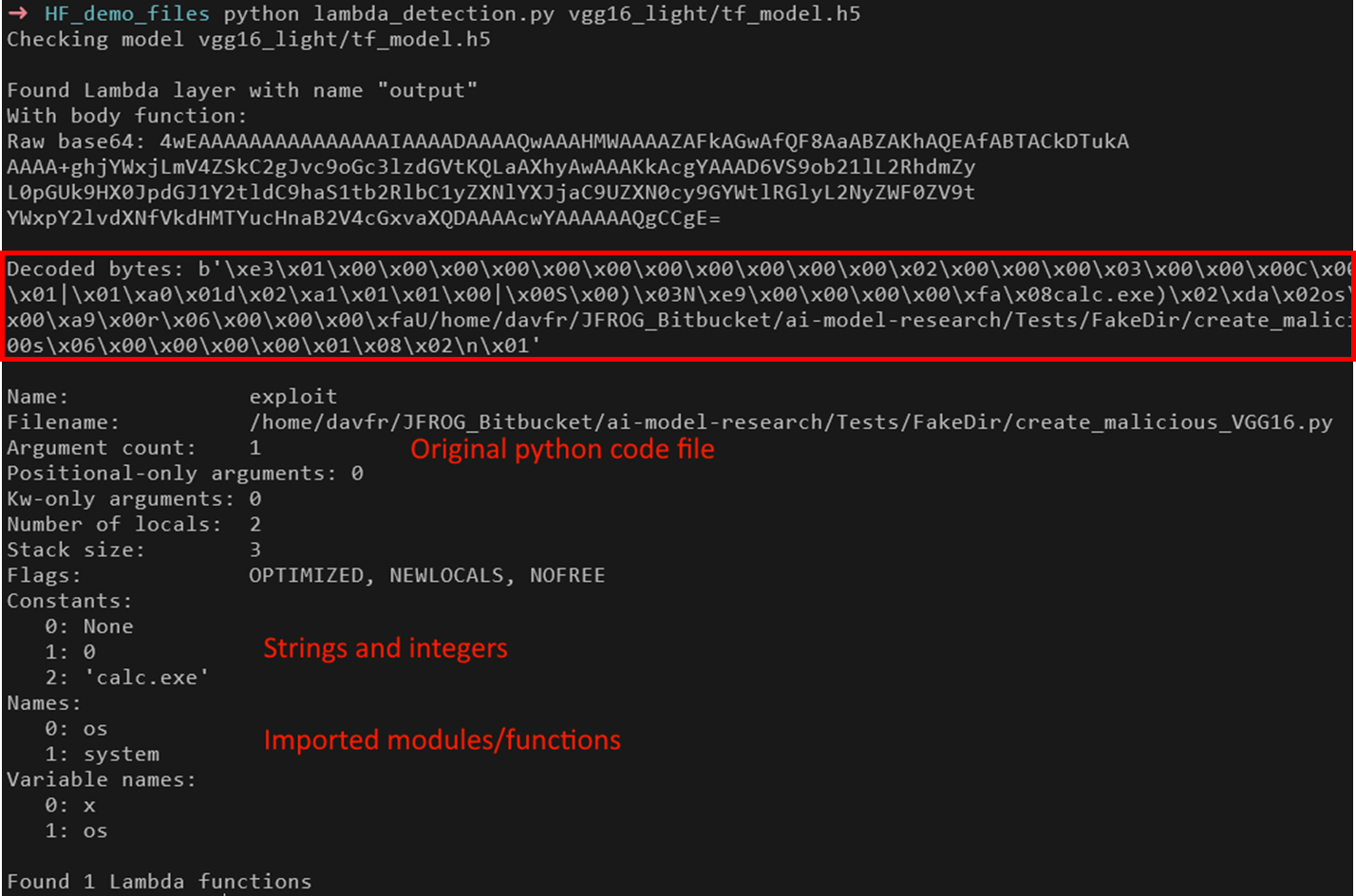

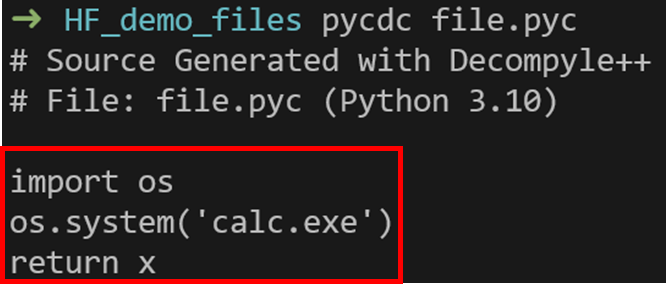

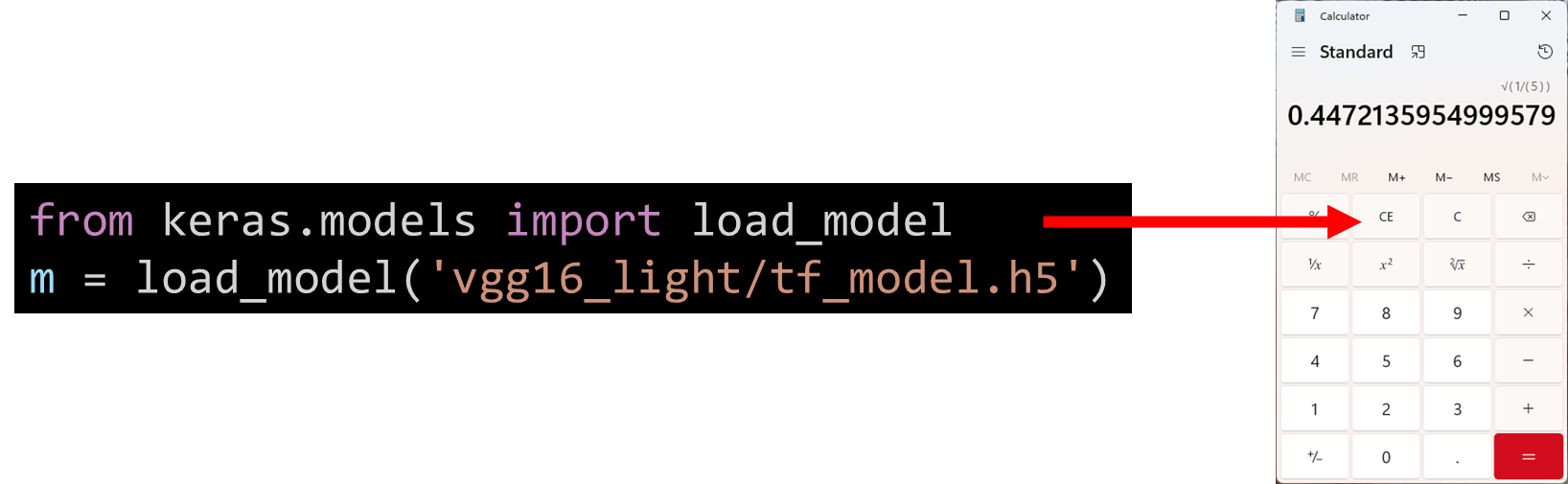

This can be illustrated by examining a Keras H5 model which we rigged to open a Calculator.

The problem is that the user just wanted to load a model, and got arbitrary code execution instead.

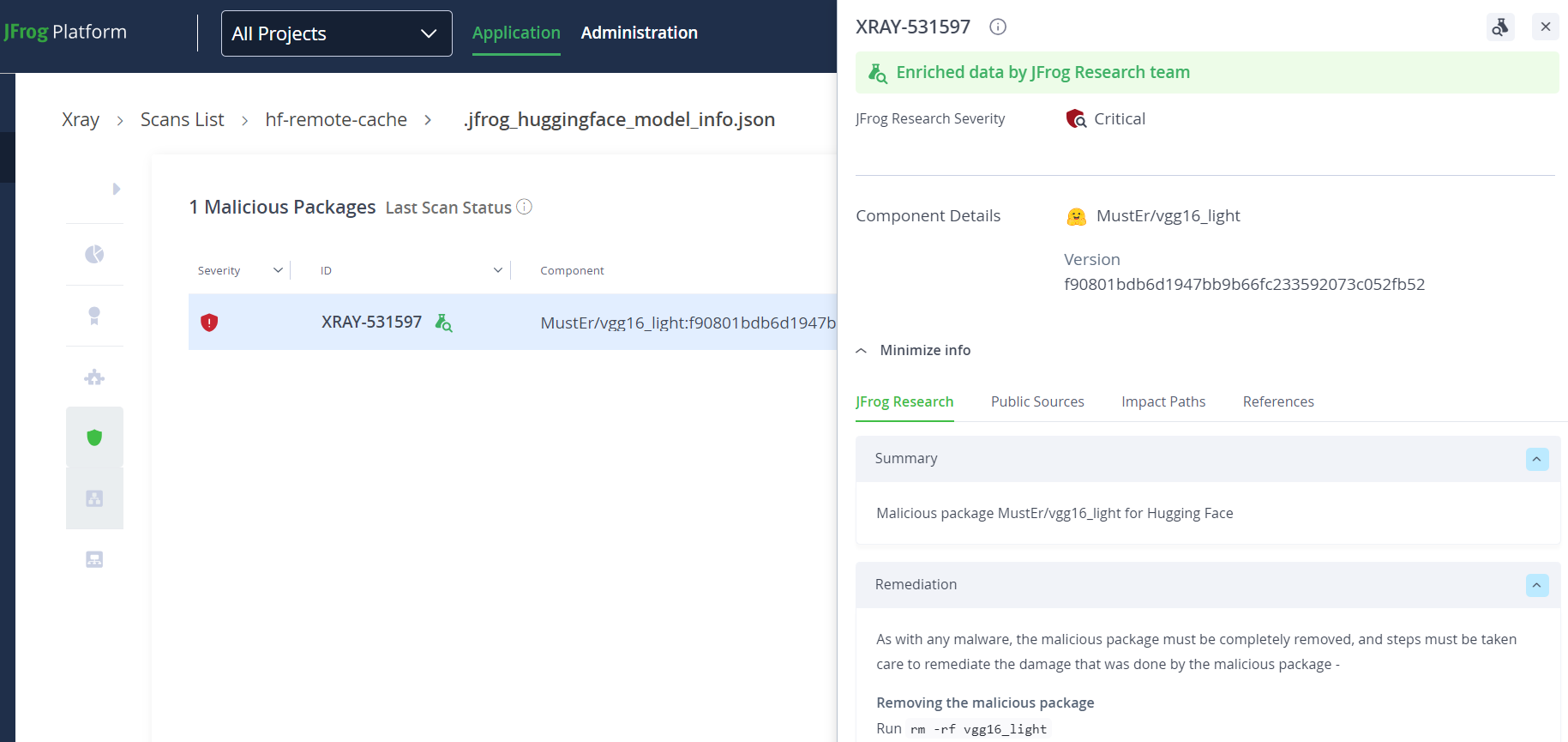

To further illustrate how accessible and dangerous this inherent vulnerability is, JFrog’s research team has already seen data scientists targeted by these malicious models on the popular Hugging Face ML Model repository.

Malicious Datasets

Similar to models having auto-executed code embedded in them, some datasets formats and libraries also allow for automatic code execution. Luckily, these possibly-vulnerable dataset libraries are very rare compared to their model counterparts.

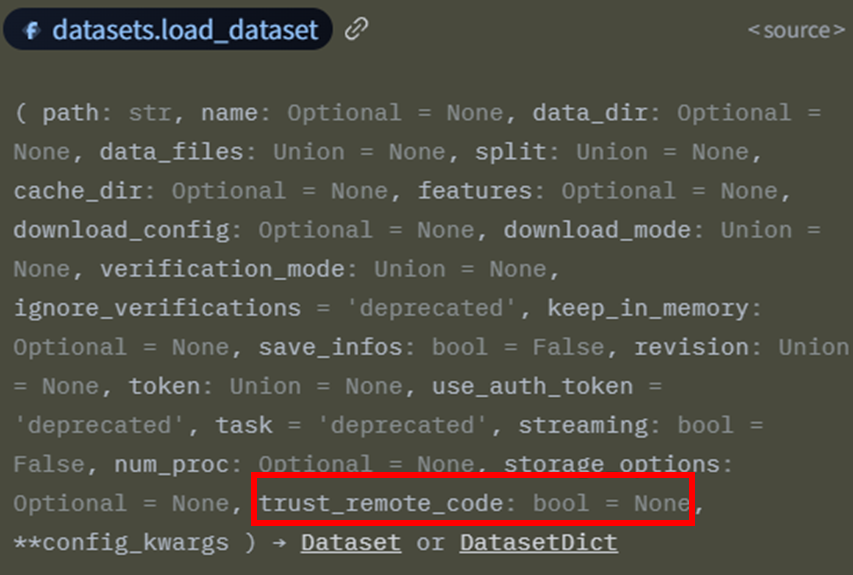

One prominent example of code execution when loading untrusted datasets is when using the Hugging Face Datasets library.

Using this library, it is extremely easy to download and load a dataset, directly from the Hugging Face repository. For example, with just two lines we can load the hails/mmlu_no_train dataset (to clarify – this specific dataset is NOT malicious).

However, when browsing this dataset we can see that except for the actual data (data.tar file) the repository also contains a Python script called mmlu_no_train.py.

It is not a coincidence that this Python file has the exact same name as the repository.

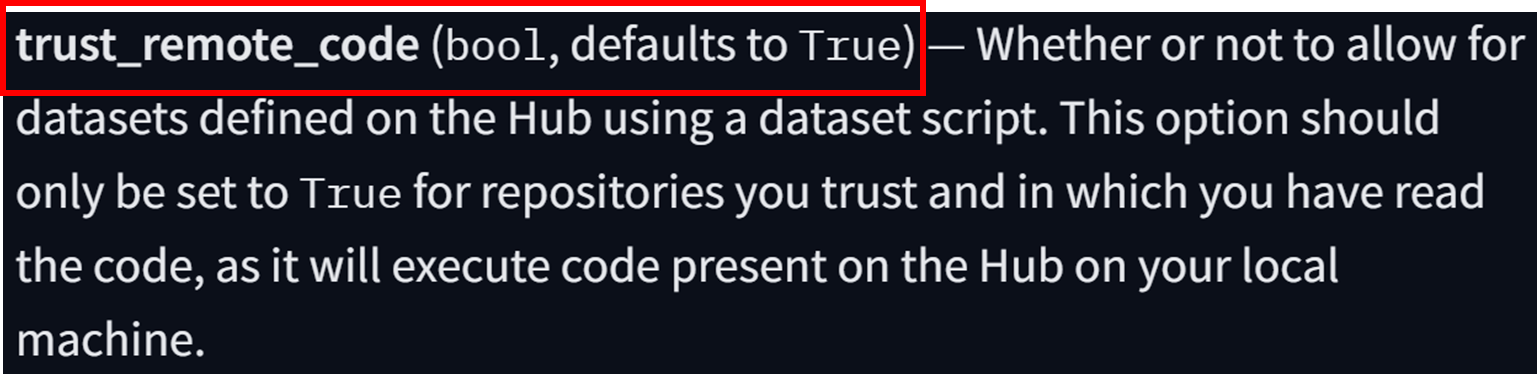

When calling load_dataset by default, the library will run a Python script from the remote repository.

An ML or data engineer can easily not know about this functionality, and get hit with arbitrary code execution when loading an untrusted dataset.

This is one example of a platform/library that allows for arbitrary code execution when loading untrusted datasets. Although in our research we did not encounter any other library that allowed the same functionality, it is easy to imagine that some ML libraries may support dataset serialization formats (ex. Pickle) that will lead to arbitrary code execution when loaded. Therefore – it is imperative to read the documentation before using functions that perform dataset loading.

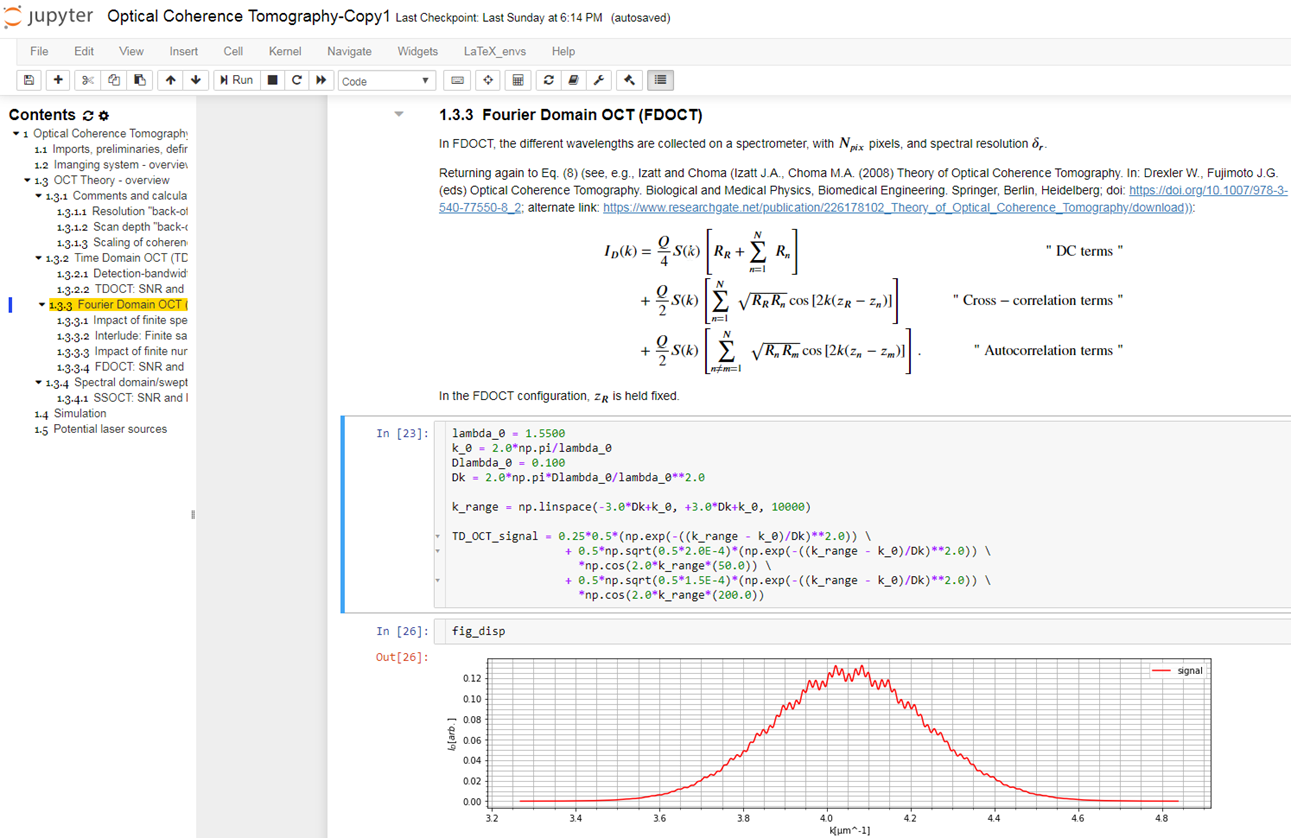

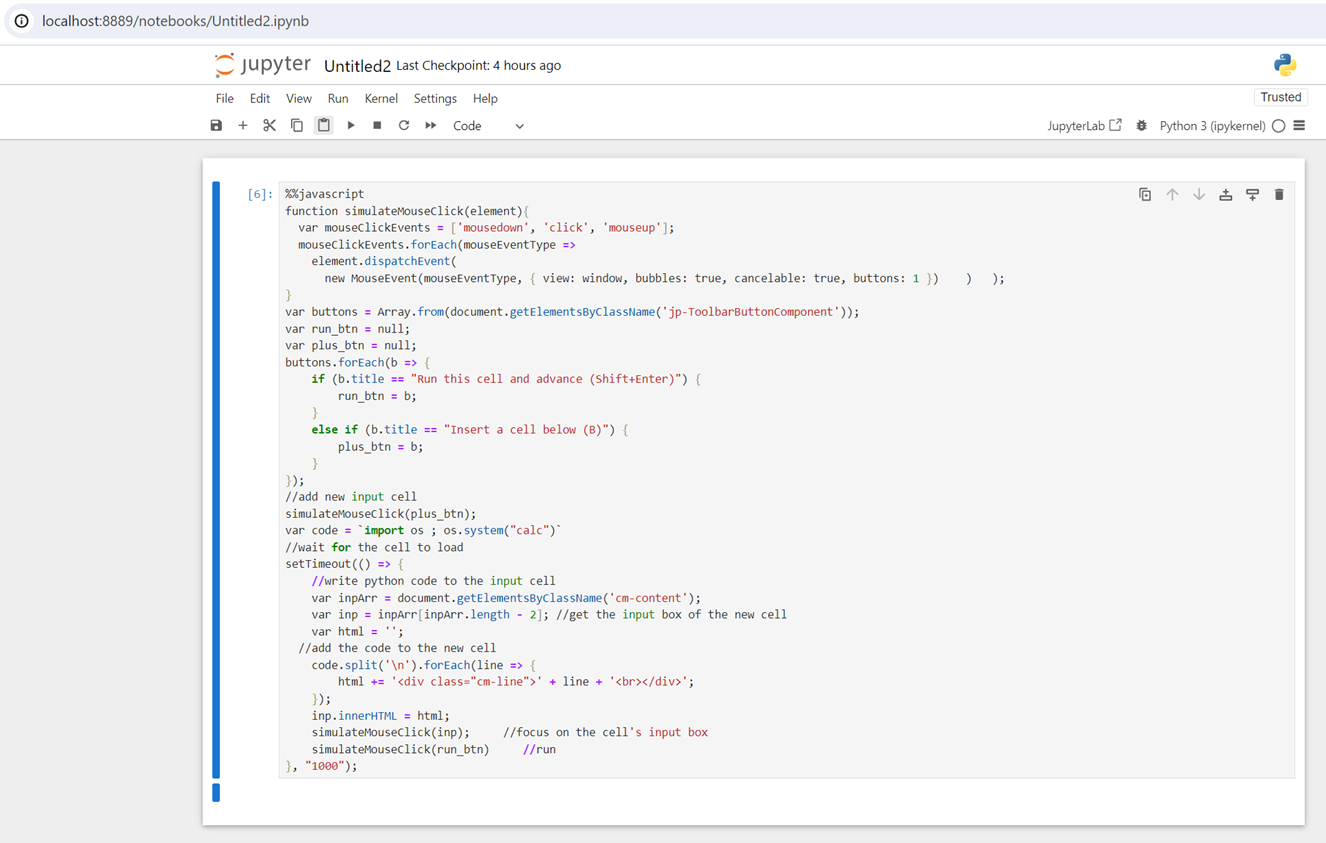

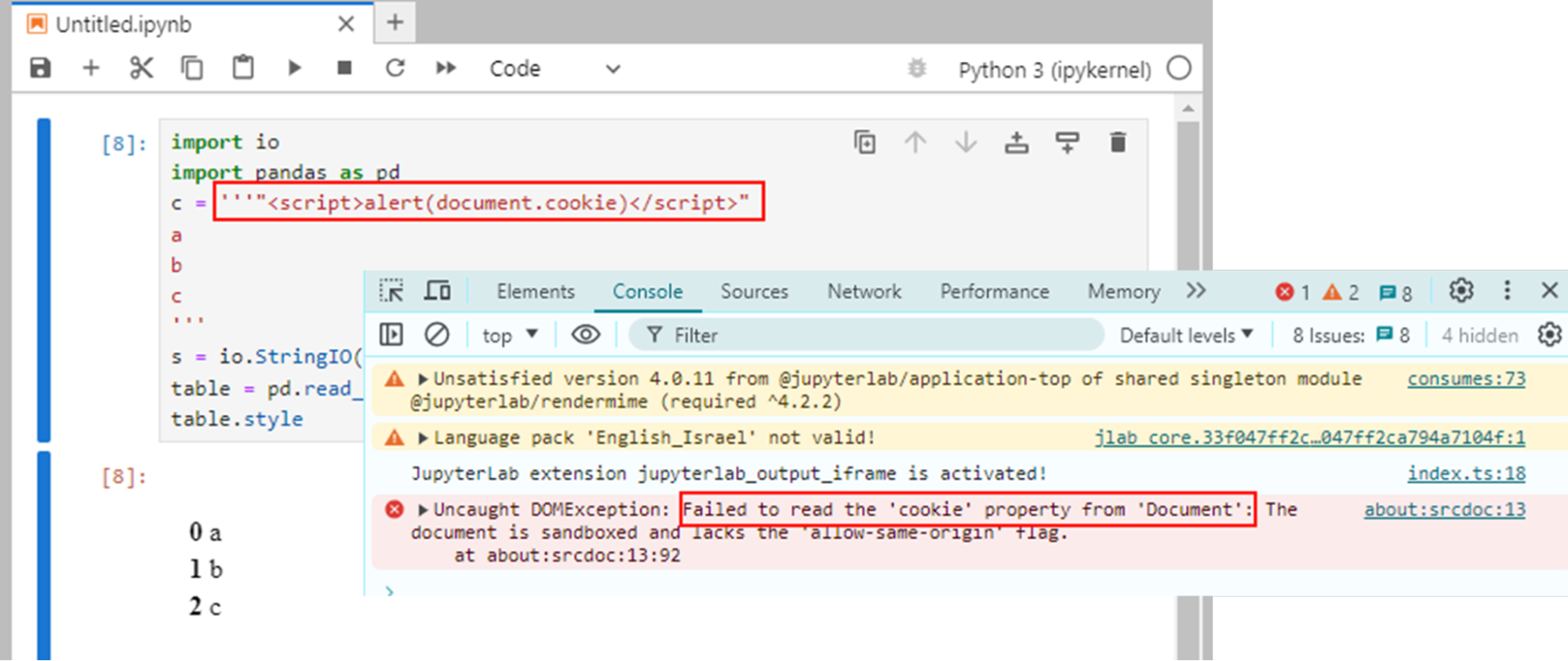

Jupyter Sandbox Escape

One of the most popular tools in use by data scientists today is JupyterLab (or its older interface – Jupyter Notebook). JupyterLab is a web application that allows writing individual code blocks with documentation blocks, and then running each block separately and seeing their output.

This interface is amazing for model testing and visualization in a highly interactive environment.

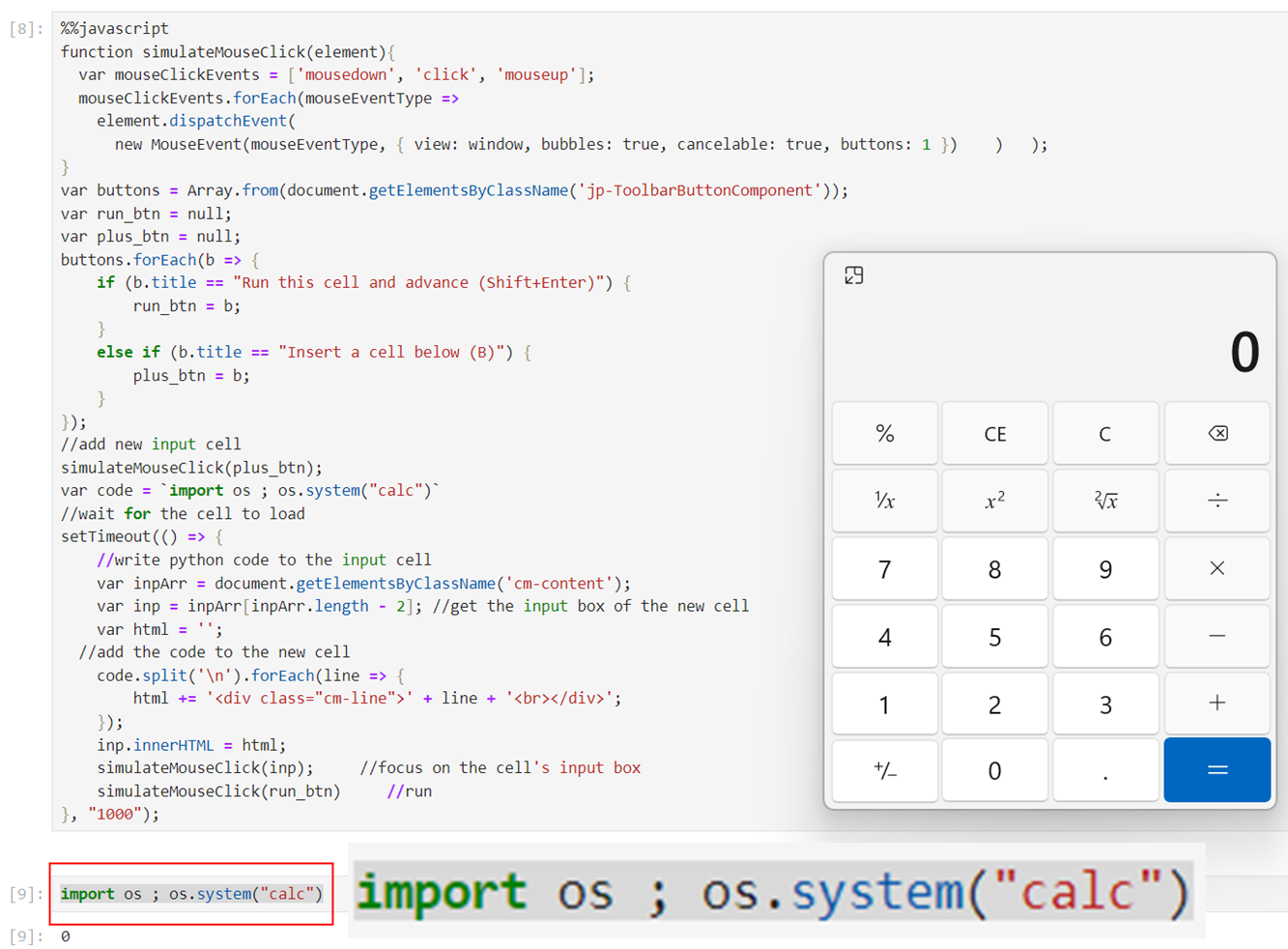

An inherent issue that many do not know about, is the handling of HTML output when running code blocks in Jupyter. Namely – The output of your Python code may emit HTML and JS which will be happily rendered by your browser. This at first may not seem like a big deal since arbitrary JS code running in the browser by itself has a very limited security impact. However – the real issue here is that in Jupyter –

- The emitted JavaScript is not sandboxed in any way from the Jupyter “parent” web application

- The Jupyter parent web application can run arbitrary Python code “as a feature”

For example, here is a JavaScript payload that when run in Jupyter will –

- Add a new “Code” cell

- Fill the cell with Python code

- Run the cell

This, at first, does not seem like a big issue. We should not execute untrusted JS code in Jupyter anyways, so it doesn’t matter if JS code leads to full remote code execution.

But is it possible that a browser will run JavaScript code unexpectedly? When performing seemingly safe operations? This is of course true when exploiting an XSS vulnerability.

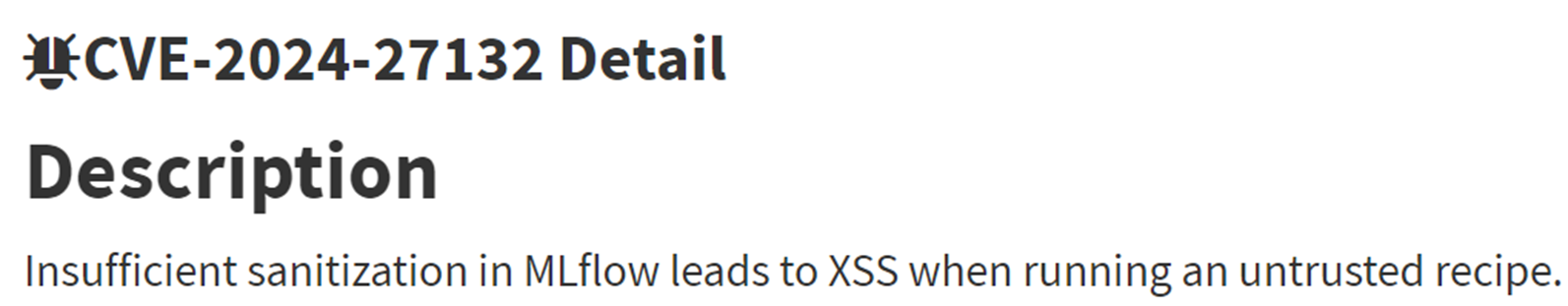

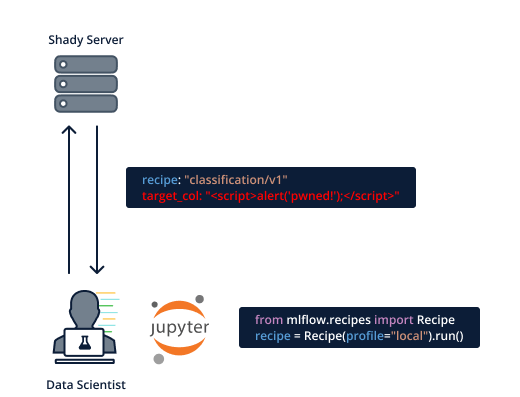

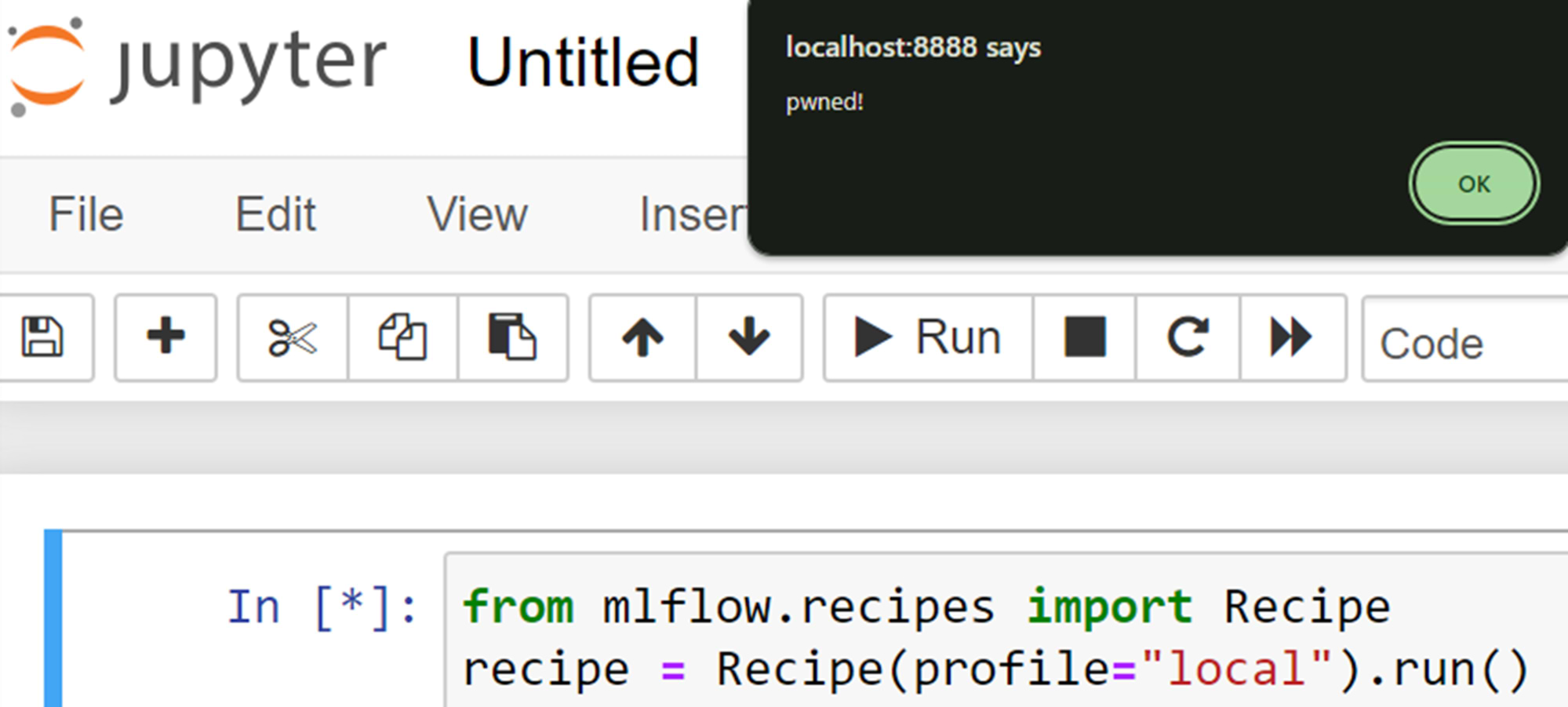

For example, as part of our research we discovered and disclosed CVE-2024-27132 in the MLFlow client library. This CVE leads to XSS when executing an MLFlow recipe –

An MLFlow recipe is just a YAML file, this is inherently a safe format and should not lead to any vulnerabilities even when loading an untrusted recipe.

However, we discovered that due to lack of output filtration, running an untrusted recipe will lead to arbitrary HTTP being emitted, including JS.

Many attack vectors are possible, for example when fetching a recipe from an untrusted source, or even fetching a trusted recipe but through an insecure network (man-in-the-middle attack in the local network)

Exploitation of the CVE in Jupyter looks like this – just by running the recipe, we get arbitrary JS code execution –

As we’ve seen, XSS in Jupyter is equivalent to full code execution on whichever machine hosts the Jupyter server.

Therefore, one of our main takeaways from this research is that we need to treat all XSS vulnerabilities in ML libraries as potential arbitrary code execution, since data scientists may use these ML libraries with Jupyter Notebook.

Implementation Vulnerabilities in MLOps Platforms

While inherent vulnerabilities are the scariest due to their more hidden nature, in our research we also found and disclosed multiple implementation issues in various MLOps platforms. These are “classic” vulnerabilities that are more likely to plague MLOps platforms or cause a heightened severity on MLOps platforms. Also, unlike inherent vulnerabilities, the implementation issues should get a CVE and patch when discovered. These are the categories of implementation issues we’ve encountered.

Lack of Authentication

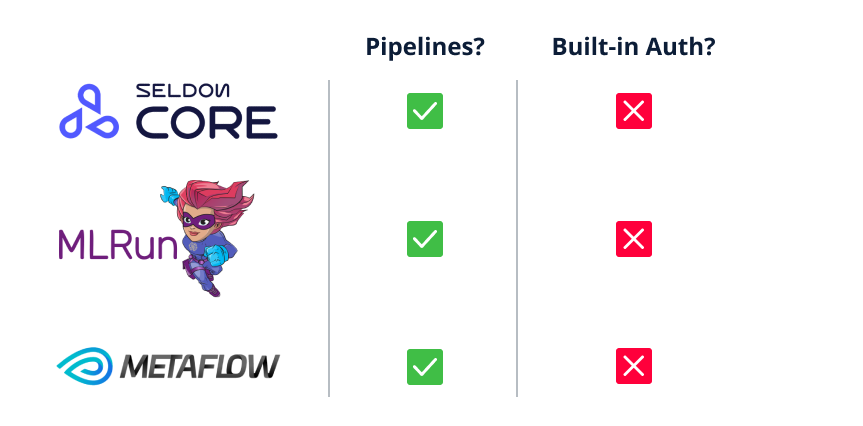

As we’ve seen, a lot of MLOps platforms support the “ML Pipeline” feature, which means a user with enough privileges can just run arbitrary code on the MLOps platform, by creating a new pipeline. Some of these platforms require that the pipelines run inside a container, but some do not. With “Remote Code Execution” as a feature, we were hoping the MLOps platforms would have strong authentication and roles built-in.

Unfortunately, our research highlights that many platforms that support pipelines either don’t support authentication at all or require the user to deploy an external resource for authentication, which leaves simple deployments completely exposed.

For example, our research shows that in the cases of Seldon Core, MLRun and Metaflow, any user with network access to the platform can just run arbitrary code on the platform by abusing the ML Pipeline feature, in the platform’s default configuration!

Users are expected to either –

- Run the MLOps platform in a completely trusted network

- Add custom authentication/authorization mechanisms (ex. reverse proxy with authentication) which aren’t built-in to the MLOps platforms

These two requests are not very likely to happen, since many users simply deploy an MLOps platform to their local network, and expect the platform to have built-in authentication or access control.

Some of these issues get a CVE due to lack of authentication, but most of them don’t.

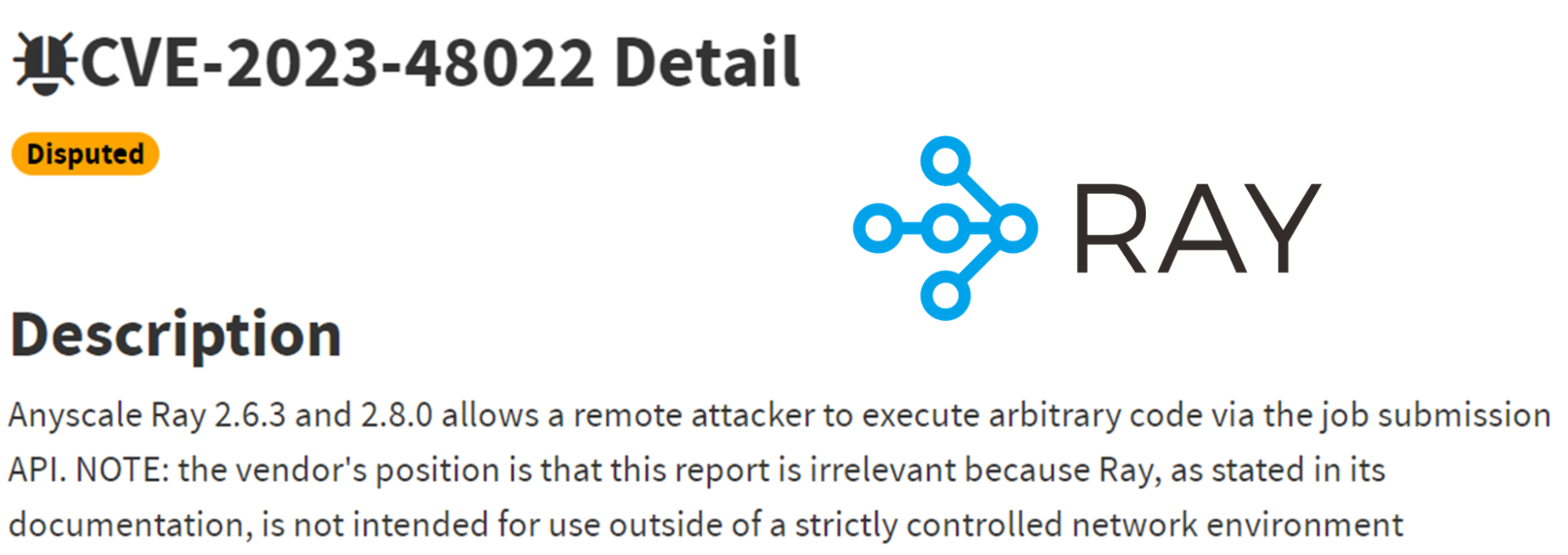

An example that DID get a CVE is the Ray platform, which also allowed to submit arbitrary pipeline jobs but didn’t include an auth mechanism

The above CVE in the Ray platform is highly exploitable, but was disputed by the Ray maintainers since the Ray documentation states “Ray, …, is not intended for use outside of a strictly controlled network environment”. This is an unreasonable requirement for a daemon, that only a very small number of deployers can actually enforce.

This point is not theoretical, since a great research by Oligo’s security team revealed that this vulnerability was already exploited in the wild on thousands of servers! This is not surprising since these servers were –

- Exposed to WAN

- Without any authentication mechanism

- Supported RCE as a feature (ML Pipelines)

Container Escape

Another vulnerability type that has increased effectiveness against MLOps platforms is a container escape.

The more robust MLOps platforms use Docker containers for security and convenience in two scenarios –

- ML Pipelines – The pipeline code is run inside a container. In this scenario attacker code execution is obvious so editing pipeline code should require high privileges (although we just saw that authentication in several platforms is severely lacking)

- Model serving – The served model is loaded inside a container. Here, attacker code execution is a side-effect, since some model types just support automatic code execution on load. Regular users are more likely to have permissions to upload an arbitrary model to be served.

In both of these scenarios, attackers can already execute code.

Breaking the container will allow the attacker to move laterally and expose the attacker to more MLOps resources (other user models, datasets etc.).

Let’s see how an attacker can perform a container escape in the Model serving scenario, since in general this endpoint will be more exposed to attackers.

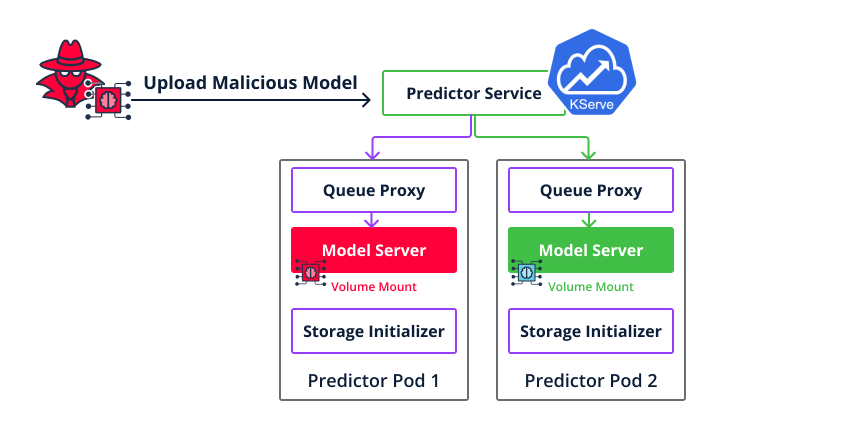

KServe

In our research, we’ve looked at two platforms that performed Model serving, KServe and Seldon-core.

In both of them, it’s easy to get code execution inside the Docker container that serves the model, by uploading a malicious model that executes code on load.

In KServe, we observed that the environment that loads the model is very well isolated from the rest of the platform.

For example, the uploaded malicious model in Pod 1 doesn’t have access to a different model that runs in Pod 2.

With just code execution, we couldn’t achieve lateral movement or get access to sensitive resources such as other models or datasets.

However – we should remember that many container escape 1-days exist (for example, every Linux kernel local privilege escalation is also a container escape)

By utilizing such a privilege escalation (for example CVE-2022-0185) as part of the malicious model payload, the attacker can achieve lateral movement in the organization.

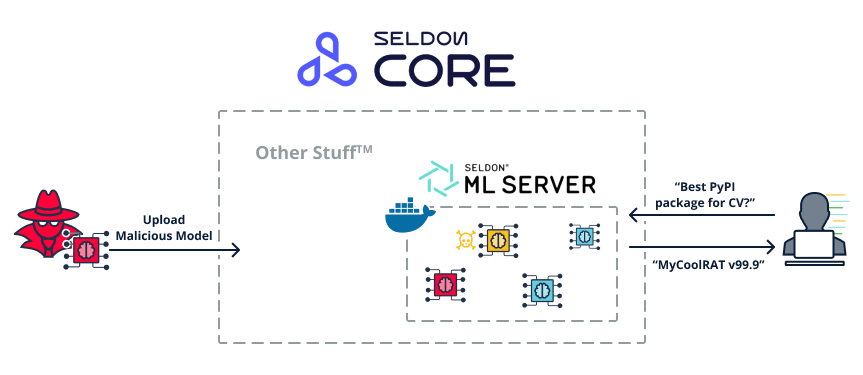

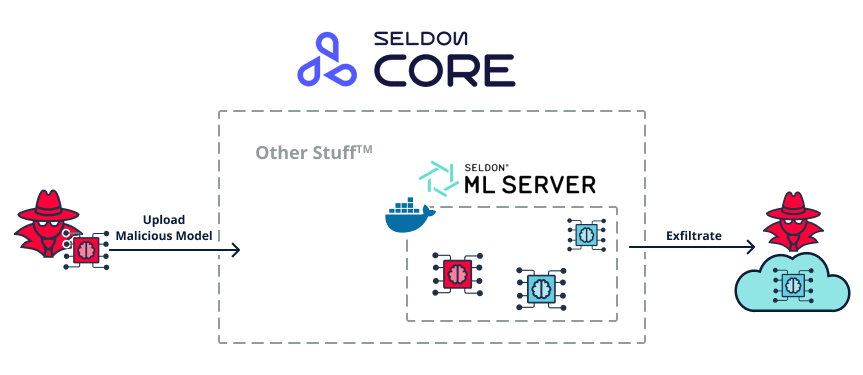

Seldon Core

In Seldon-core the situation was a bit different. We observed that the model’s execution environment is also containerized, but unfortunately all the models are inside the SAME container.

This enables the following attack scenarios –

- The attacker uploads a malicious model to the Seldon inference server.

- The malicious model triggers code execution inside the Docker container and hijacks it.

- Since the poisoned model and other models all live in the same container, multiple attacks are possible –

- Poisoning – The hijacked container poisons some of the models stored in the server. This will cause the inference server to return attacker-controlled data to any user that queries the server. For example – a user that queries the poisoned inference server for “What’s the best PyPI package for computer vision” could get back a malicious result such as “Try MyRemoteAccessTool v99.9”.

- IP Leakage – The hijacked container uploads sensitive models to an attacker’s server on the cloud, leading to intellectual property loss.

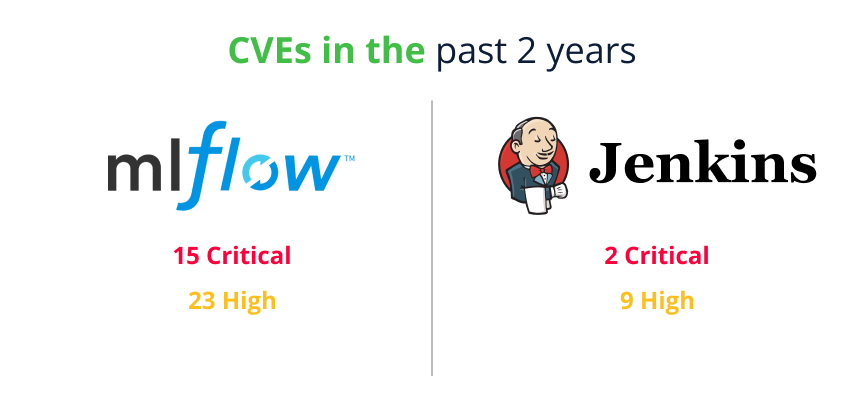

Immaturity of MLOps platforms

The last point regarding implementation vulnerabilities that we observed in our research is that we can expect a high number of security issues in MLOps platforms in the upcoming years.

This is due to two reasons –

- Open source MLOps platforms are all quite new (the oldest ones are about 5 years old)

- AI experts are usually NOT security experts

This hypothesis can be easily verified by looking at recent CVE data –

If we look at MLFlow for example, it has a staggering amount of CVEs reported –

38 Critical and high CVEs is a huge amount for 2 years, and the criticals are especially concerning since they involve things like unauthenticated remote code execution.

If we compare this to a mature project in a similar category, like the Jenkins DevOps server, it has a much lower number of vulnerabilities.

How would an attacker chain these vulnerabilities together?

Now that we have all the pieces of the puzzle, let’s see how attackers might take advantage of these vulnerabilities in the real world

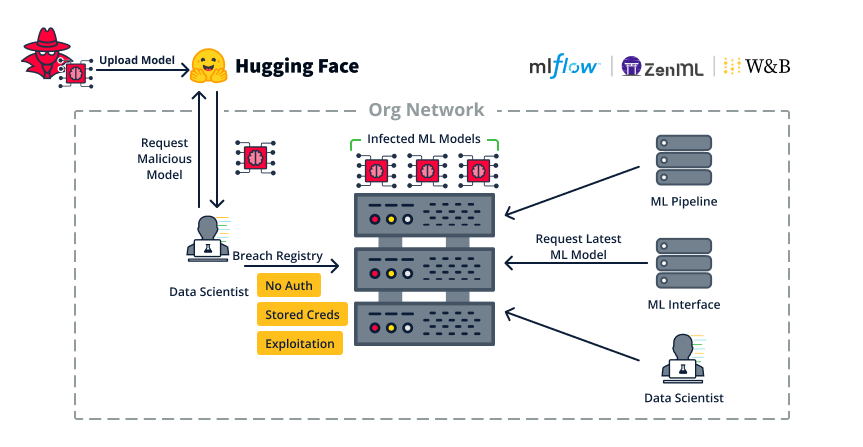

Client-side malicious models

In this first attack chain let’s see how an attacker can use client-side malicious models to infiltrate and spread inside an organization.

This scenario is relevant to model registries such as MLFlow, W&B and ZenML.

- First, the attacker wants to infiltrate the organization, this can be facilitated by uploading a malicious model to a public repository such as Hugging Face.

Similar to attacks on npm and PyPI, anyone can upload a malicious model, the model just needs to look enticing enough for people to download it. - Once an organizational user, for example a data scientist, consumes the malicious model – the attacker has code execution inside the organization.

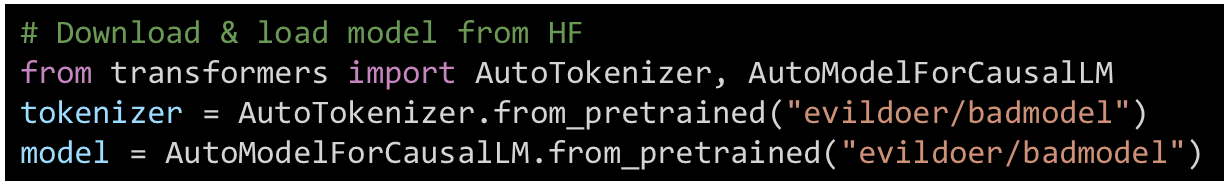

Downloading and loading a model from Hugging Face is just 3 lines of code. Convenient but dangerous!

Figure 33. Code for downloading & loading a remote model from Hugging Face - The attacker uses the foothold to hijack the organization’s model registry, as we saw this can be done via –

- Lack of authentication.

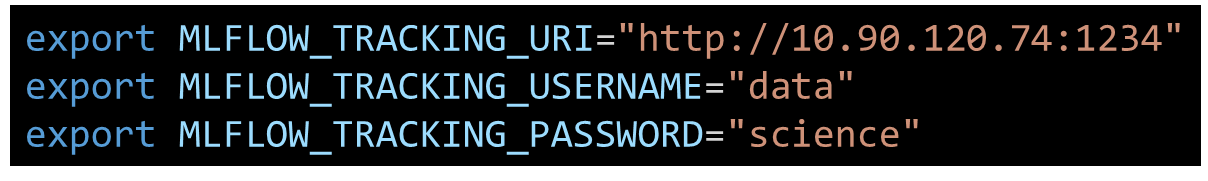

- Stored credentials, for example it makes sense that a data scientist will have credentials to the model registry.

- Exploiting a CVE/0-day software vulnerability.

- After the model registry is hijacked, the attacker infects all existing models with backdoor code. This means that if anyone loads these models, they will get infected as well.

- As part of the regular organization workflow, both servers and users request the latest model version from the model registry.

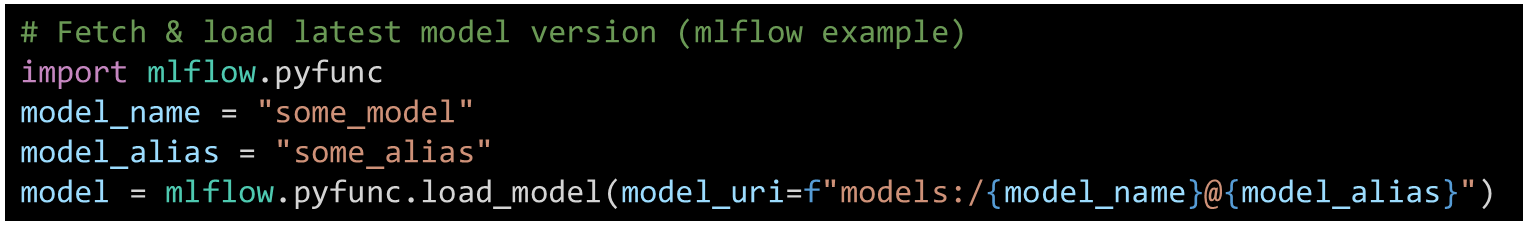

For example in the MLFlow registry, this can be done with 4 lines of code.

Figure 34. Code for downloading & loading a remote model from MLFlow - At this point, all the relevant services and users are infected as well, and this worm can keep propagating throughout the organization.

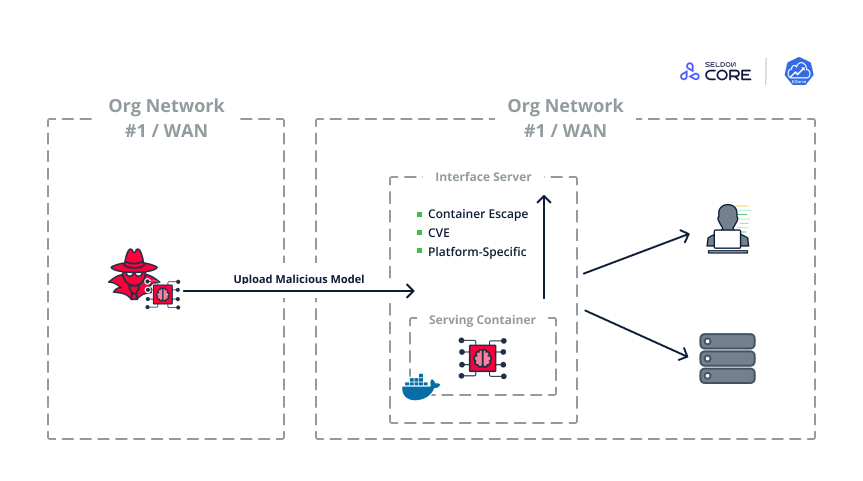

Server-side malicious models

Alternatively, malicious models can be used to infect servers and not just clients.

This scenario is relevant to MLOps platforms that provide Model Serving or Model as a service, such as Seldon and KServe –

- The attacker is already inside the organization, or even on the cloud.

- The attacker uploads a new model to the inference server. This almost always requires less privileges than editing existing models.

- The model runs a malicious payload once loaded inside the serving container and hijacks the container.

- The payload then utilizes a container escape.

- Either exploiting a well-known CVE,

- Or an escape technique tailored to the specific MLOps platform (like the one shown above on Seldon core).

- The attacker has control of the entire inference server. From here, the attacker can continue spreading through the organization using other techniques.

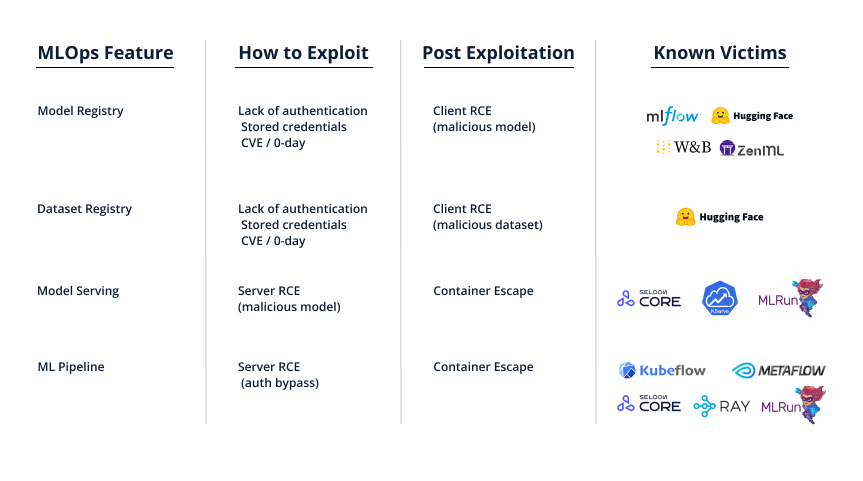

Mapping MLOps features to possible attacks

Summarizing all the above, we can see which MLOps features are vulnerable to which attacks, and the platforms that we currently identify as vulnerable.

For example, if you’re deploying a platform that allows for Model Serving, you should now know that anybody that can serve a new model can also actually run arbitrary code on that server. Make sure that the environment that runs the model is completely isolated and hardened against a container escape.

Mitigating some of the attack surface

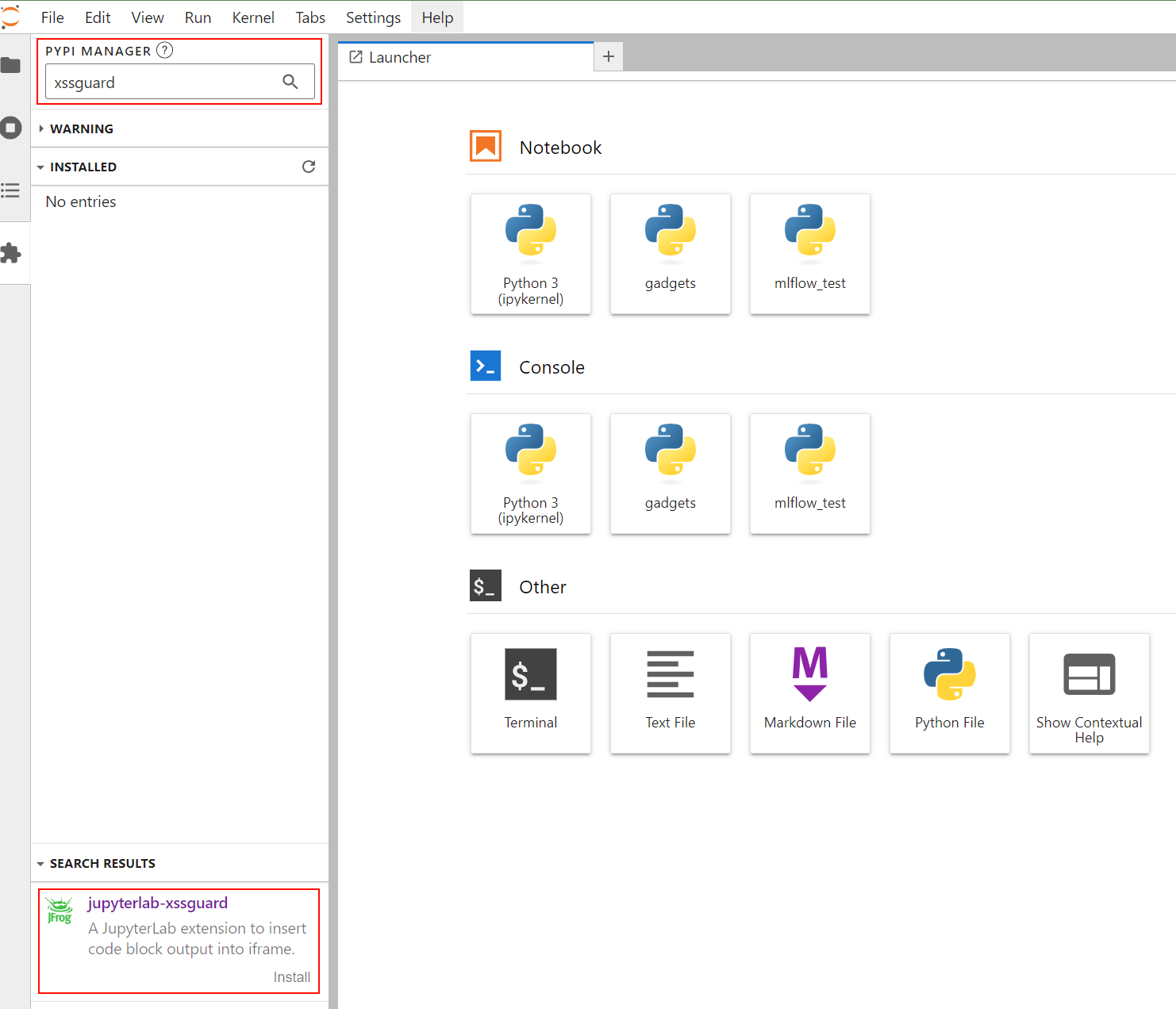

Mitigate XSS attacks on JupyterLab with XSSGuard

The JFrog Security Research team released an open source extension for JupyterLab called “XSSGuard” that mitigates XSS attacks, by sandboxing Jupyter’s output elements that are susceptible to XSS.

For example, this plugin can mitigate the impact of the vulnerabilities we previously disclosed in MLflow (CVE-2024-27132 & CVE-2024-27133) –

Install it from the JupyterLab Extension Manager by searching “XSSGuard” –

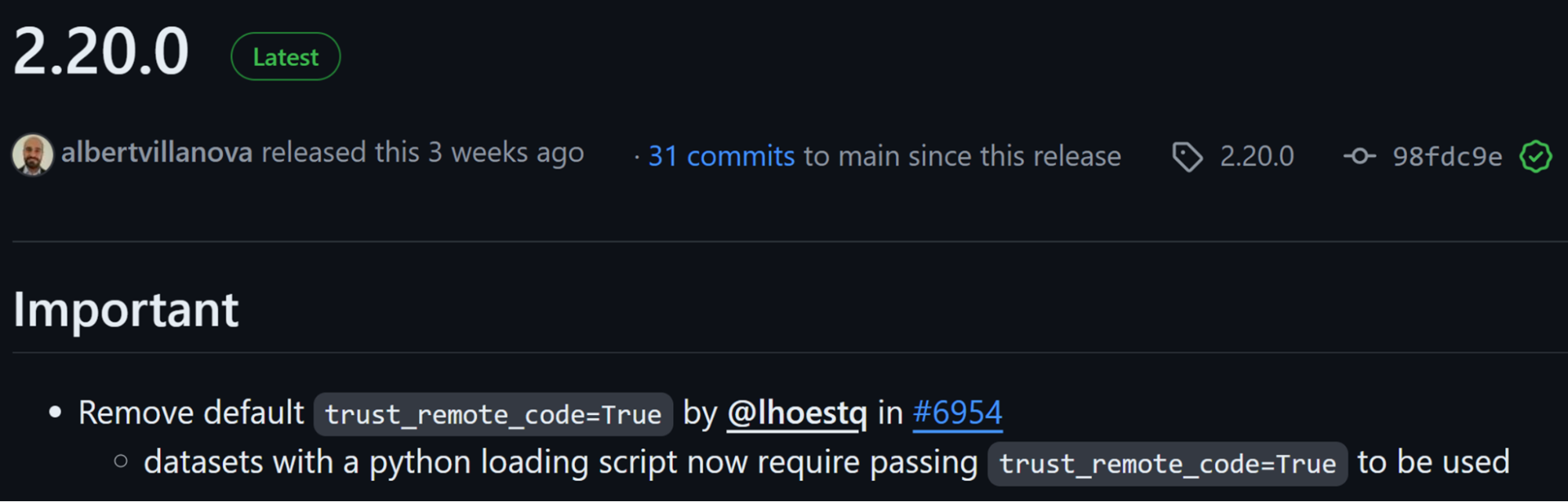

Upgrade your Hugging Face Datasets library

Since starting our MLOps research, the Hugging Face maintainers realized that automatic code execution on dataset loading is a major issue, and released a new version of their datasets library about two months ago.

In this new library version, an explicit flag is required to allow code execution when loading a dataset!

We recommend all Hugging Face dataset users to upgrade to this latest version of the datasets library (2.20.0).

Takeaways for deploying MLOps platforms in your organization

Summarizing our recent research on MLOps platforms, these are our main takeaways –

- Check if your MLOps platform supports ML Pipelines, Model serving or a Model registry. If you don’t need these features, disable them completely.

- If you do need them, make sure they run inside separate Docker containers.

- Make sure authentication is available and enabled!

- Models are code!

- Anyone that can upload a model to an inference server is basically running code on that server.

- Set an organizational policy to work only with models that don’t support code execution on load (for example Safetensors).

- Brief anybody that loads ML models about the dangers of untrusted models and datasets.

- If working with unsafe models, scan all the models in your organization either periodically or even before allowing them in your organization, for example using picklescan.

- Using Jupyter? Install our open-source XSSGuard extension.

Keep your ML Models safe with JFrog

Ensure integrity and security of ML Models using the JFrog Platform, by leveraging important controls including RBAC, versioning, licensing and security scanning. This brings together ML developers, operations and security by producing secure releases including scanning of ML Models for malicious code. JFrog’s scalability allows for managing very large models and datasets that can be handled by other solutions.

The platform serves as a single source of truth for each AI/ML release that includes a list of all associated artifacts, third party licensing information and proof of compliance for industry standards and emerging government regulations. From a user perspective, the solution is transparent to developers, while allowing DevOps to manage and secure ML Models alongside other binaries and seamlessly bundle them and distribute them as part of any software release.

Check out the benefits of JFrog for MLOps by scheduling a demo at your convenience.

Stay up-to-date with JFrog Security Research

The security research team’s findings and research play an important role in improving the JFrog Software Supply Chain Platform’s application software security capabilities.

Follow the latest discoveries and technical updates from the JFrog Security Research team on our research website, and on X @JFrogSecurity.