Container Optimized Workflow for Tectonic by CoreOS (Now Red Hat)

Following our recent blog on Artifactory’s integration with OpenShift, you can now deploy your binaries hosted on Artifactory to Tectonic, another major enterprise-ready Kubernetes platform that specializes in running containerized microservices more securely.

Artifactory integrates with Tectonic to support end-to-end binary management that overcomes the complexity of working with different software package management systems, like Docker, NPM, and Conan (C++), RPM, providing consistency to your CI/CD workflow. In this blog, we will show you how to deploy your binaries on Artifactory to the Tectonic platform.

With the increase of polyglot programming in enterprises, a substantial amount of valuable metadata is emitted during the CI process and is captured by Artifactory. One of the major advantages of leveraging this metadata (build info) is traceability from the docker-manifest, all the way down to the application tier. For example in the Docker build object (docker-manifest), it is easy to trace the CI job responsible for producing the application tier (such as a WAR file) that is part of the Docker image layer being referred by the docker-manifest. Artifactory achieves this by using docker build info. Also, Artifactory supports multi-site replication.

Deploying Your Docker Images on Artifactory to Tectonic

Prerequisite

Validate that the Tectonic instance is active.

The following steps show how to deploy Docker images hosted on JFrog Artifactory as your Kubernetes Docker registry to Tectonic. In this example, we have created an example-deployment.yaml file to deploy a Docker image that is part of Artifactory’s Docker virtual repository to Tectonic.

- Create a secret with a Docker Config to hold your Artifactory authentication token in your Docker registry project, by running the following command:

kubectl create secret docker-registry rt-registrykey --docker-server=docker-virtual.artifactory.com --docker-username=${USERNAME} --docker-password=${PASSWORD} --docker-email=test@test

- Run the following example-deployment.yaml to deploy the containerized microservice to Tectonic, by running the following command:

kubectl create -f example-deployment.yaml

The following example-deployment.yaml file is a customized template that can be edited according to your needs. Note that we have added the following references:

– The Deployment object points to the Docker image that is part of Artifactory’s Docker virtual repository.

image: docker-virtual.artifactory.com/nginx:1.12

– The ImagePullSecrets is set as follows:

imagePullSecrets: - name: rt-registrykey: example-deployment.yaml sample

apiVersion: extensions/v1beta1 kind: Deployment metadata: name: example-deploymentnamespace: default labels: k8s-app: example spec: replicas: 3 template: metadata: labels: k8s-app: example spec: containers: - name: nginx image: docker-virtual.artifactory.com/nginx:1.12ports: - name: http containerPort: 80 imagePullSecrets: - name: rt-registrykey

Following successful authentication, an image is pulled from the Artifactory Docker virtual repository.

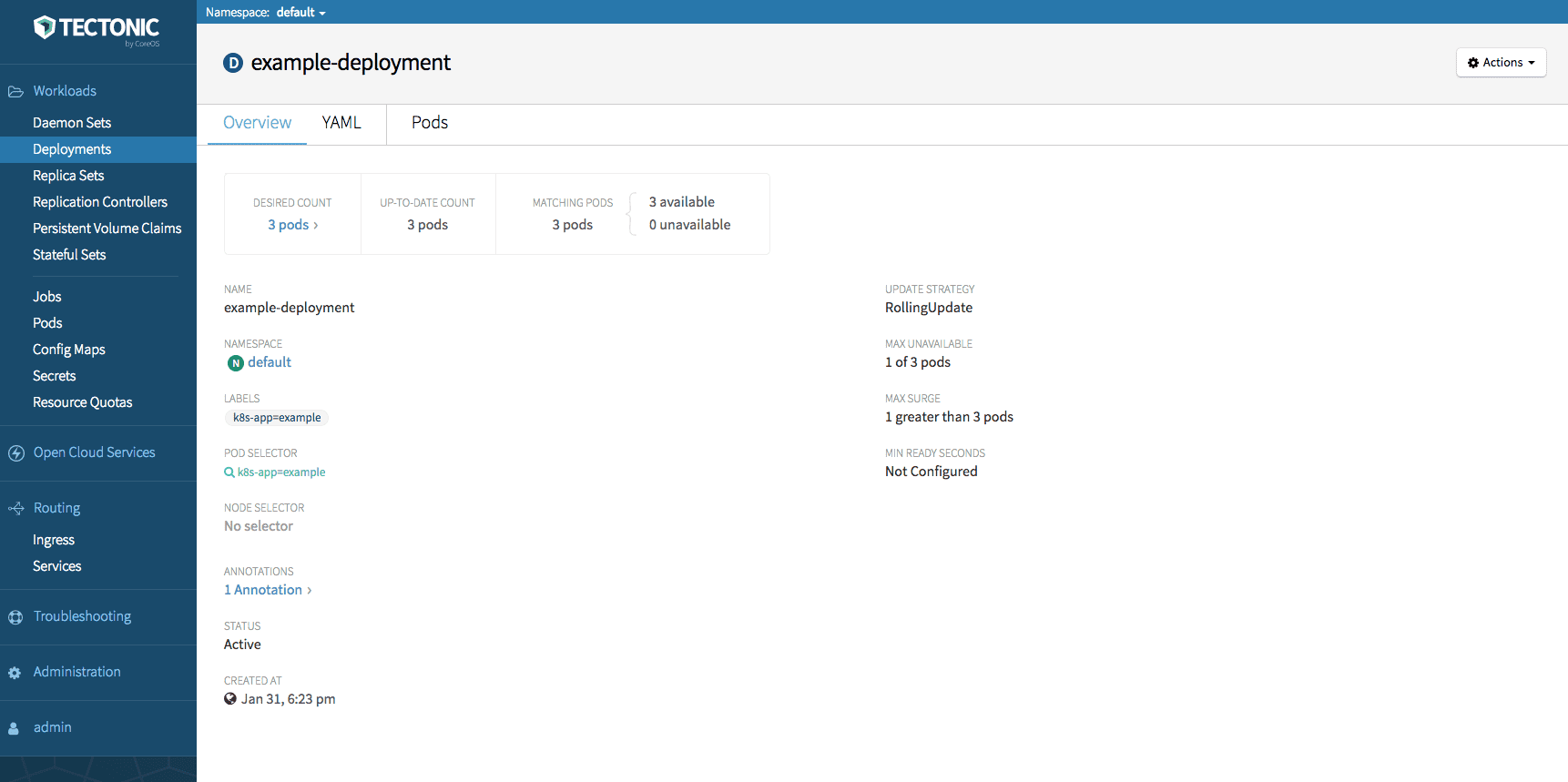

- Log in to Tectonic UI > Deployments, to view the Docker deployment status.

- Create service and ingress objects to access the nginx service using the following example:

kind: ServiceapiVersion: v1metadata:-

name: example-service namespace: default spec: selector: k8s-app: example ports: - protocol: TCP port: 80 type: NodePort apiVersion: extensions/v1beta1 kind: Ingress metadata: name: example-ingress namespace: default annotations: kubernetes.io/ingress.class: "tectonic" ingress.kubernetes.io/rewrite-target: / ingress.kubernetes.io/ssl-redirect: "true" ingress.kubernetes.io/use-port-in-redirects: "true" spec: rules: - host: console.tectonicsandbox.com http: paths: - path: /example-deployment backend: serviceName: example-service servicePort: 80

-

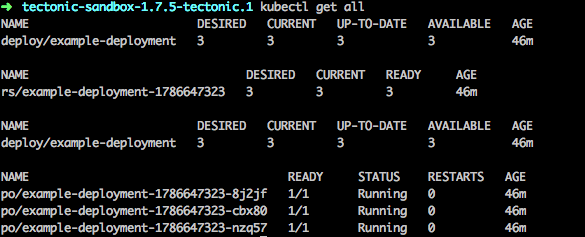

- Access the Tectonic console and run the kubectl get all command to view the status of the service and ingress objects status.