JFrog Cloud: Architected for Performance at Scale

How JFrog’s managed offering leverages the power of Kubernetes and managed cloud provider services to deliver mission-critical development infrastructure for enterprise organizations.

Petabytes of monthly data transfer.

Thousands of concurrent requests per customer.

Hundreds of thousands of requests per minute per customer.

The JFrog Platform is a mission critical piece of software development and delivery infrastructure for companies that require performance at scale. When you’re supporting thousands of developers, even a minute of downtime or delay can mean millions of dollars lost productivity. We take that responsibility seriously, especially for the over 4,000 companies relying on JFrog’s managed offering: JFrog Cloud.

In this post we pull back the curtain to share how we’re able to deliver for customers who leverage JFrog Cloud.

JFrog’s metamorphosis to Kubernetes-only cloud

JFrog is a cloud-first company. In August 2009, just a year after going to market with Artifactory as an open-source offering, we launched JFrog Cloud, bringing Artifactory to the cloud as a managed service. Back then, we deployed Sun servers, VMs, multi-tenant Tomcat, and a whole slew of technologies to deliver our managed Artifactory. It wasn’t until later in 2009 that Artifactory Pro was released for on-prem.

At that time, despite our vision for the cloud, the industry at large was still heavily invested in self-hosting infrastructure software on-prem in clusters configured for high availability and redundancy. Undaunted, we recognized that the future of software development and delivery was moving towards the cloud and in 2011, we moved to AWS for global distribution with US East, US West, and EU central regions. We leveraged EC2 instances and were even one of the first companies to use RDS, which was still in beta at the time. Then in 2016, we added GCP support with support for Azure following the next year.

In 2017, JFrog was one of the very first companies to move all our production services to Kubernetes (K8s), which was no easy feat at the time, as those who have worked on such a migration can attest to. This multi-year project involved a full re-architecture to harness the power of Kubernetes runtime, a change which required us to find better ways to optimize the usage of cloud providers’ managed services like database and object storage. That effort laid the foundation for the modern SaaS offering we have today, available on your choice of AWS, GCP, or Azure and preferred region.

Architecting for scalable performance in the cloud? Keep it simple, stupid.

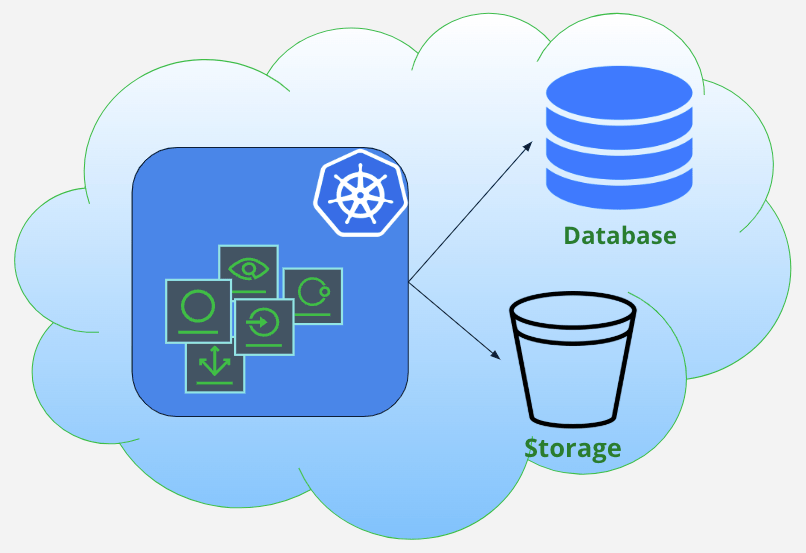

JFrog Cloud is a modern cloud-native microservice application that meets all the highest standards of availability, durability, performance, and security. We’re able to deliver such scale by keeping the architecture of the solution relatively simple, leveraging horizontal and vertical autoscaling, and by taking advantage of the managed services offered by the cloud providers. This includes managed Kubernetes, managed PostgreSQL, managed Object Storage, built-in networking features, and all the security and protection layers provided by the cloud providers, such as encryption at rest of all storage used. In AWS, for example, this translates to RDS, S3, EKS, ELB, Route53, IAM, etc.

JFrog Cloud Simple Architecture

With the scalability factor addressed, the other must-have element for our customers is resilience of the system. We developed a very robust deployment approach in order to achieve high availability, resiliency, and redundancy throughout the stack. Here we leveraged common best practices for cloud native architecture, including:

- Stateless microservices as containers in Kubernetes

- Services installed with multiple replicas across multiple availability zones

- Databases deployed with multizone read replicas

- Automatic failover facilitated by the cloud provider

The system leverages K8s cluster autoscalers and smart pod autoscaling with KEDA to take advantage of our thousands of Kubernetes nodes and adjust itself automatically. This enables us to avoid declared limits in the system, even if you’re on a lower subscription tier.

And while we don’t have declared limits we do have some guardrails in place to ensure a customer doesn’t accidentally kill the system – say by running an extremely complex query on their millions of managed artifacts. For example, by applying smart throttling and load balancing internally, we protect the integrity of the system while still serving 100s of thousands of requests per minute per customer.

Single-tenancy, multi-tenancy, or both

At JFrog, we leverage a combination of single- and multi-tenancy between the services. We find this enables us to offer an ideal balance of performance, cost, and security. For example, there is a very clear data separation between the different tenants and different customers. Everyone has their own storage and we’re not sharing binaries or database entries between customers. This prevents scaling issues in the database caused by a single noisy neighbor, and provides an extra layer of security that doesn’t depend on code-level filtering.

SaaS or self-hosted, and the freedom to choose

The JFrog Platform is available both self-hosted and as a managed service. While the code bases for our self-hosted and SaaS offering are very similar, how they are deployed and configured is rather different. And, as a cloud-first company, JFrog Cloud customers benefit from being the first to receive new features and fixes continuously deployed to production with zero downtime.

By maintaining similar code bases, instances of the JFrog Platform work together seamlessly regardless of how or where they’re deployed. For many organizations this is a huge benefit for a number of reasons, such as:

- Facilitating cloud migration

- Regulatory concerns

- Optimizing cost of workloads

- Avoiding cloud lock-in

- Disaster recovery and resiliency

Enabling migration to the cloud at scale

The JFrog Platform is underpinning software factories at the largest organizations in the world. Given the early and wide adoption of our on-prem offering, we knew we needed to be ready to help organizations move to the cloud when they were interested in offloading the cost and responsibility of managing their deployments. To date, we’ve helped hundreds of organizations migrate to JFrog Cloud, including organizations building dozens of applications across thousands of developers with PBs of artifacts.

We’ve taken our experience and made it very easy to migrate with self-service tools, optional professional services, and the ability to use your own CNAME to point to your JFrog Cloud instance, avoiding any changes to developers, CI, and CD. To help take the anxiety out of migrating, JFrog Cloud can run in parallel with self-hosted deployments, allowing you to decide when to switch over.

We also understand that organizations moving from self-hosted solutions to managed solutions don’t want to fully give up the control and visibility they’re used to having with self-hosting. Two of the ways we’ve addressed these concerns are with MyJFrog and Workers. MyJFrog allows teams to monitor consumptions, establish DNS routing policies, turn on log streaming, and more. With JFrog Workers, admins can create and run code that extend JFrog Platform functionality via a built-in serverless execution environment.

Cloud-native artifact management, unbeatable scale

Hopefully, you can now see why JFrog Cloud is trusted by some of the largest and most successful companies in the world. While we proudly stand behind what we’ve built today, we’re also continuously evaluating emerging technologies and approaches to help future-proof our customers and their technology organizations.

If you still have questions about the architecture of JFrog Cloud, our team is happy to do a group or 1:1 technical deep dive with you.