Accelerating Enterprise AI Development: A Guide to the JFrog-NVIDIA NIM Integration

Enterprises are racing to integrate AI into applications, yet transitioning from prototype to production remains challenging. Managing ML models efficiently while ensuring security and governance is a critical challenge. JFrog’s integration with NVIDIA NIM addresses these issues by applying enterprise-grade DevSecOps practices to AI development. Before exploring this solution further, let’s examine the core MLOps challenges it solves.

The Technical Challenge: Bridging the MLOps Gap

Unlike traditional software development, ML model pipelines introduce unique complexities:

- Specialized artifact management for versioning and storage

- Precise dependency management between models and runtime environments

- Optimized GPU resource allocation for efficient deployment

- Enhanced security measures to mitigate AI-specific vulnerabilities

Addressing these challenges requires a purpose-built solution that combines enterprise-grade DevSecOps practices with AI-specific optimizations.

Enter NVIDIA NIM

NIM directly addresses these MLOps challenges by providing containerized microservices tailored for enterprise AI deployment, offering:

- Pre-optimized model execution on NVIDIA hardware

- Automatic hardware configuration detection for peak performance

- Support for multiple LLM runtimes

- OpenAI API specification compatibility

When a NIM is initially deployed, it examines the local hardware configuration and the optimized models available in the model registry. It then automatically selects the best version of the model for that particular hardware environment. While these technical optimizations solve the execution challenges of AI deployment, enterprise-wide implementation requires additional capabilities.

As organizations scale their AI initiatives across departments and environments, they encounter governance, security, and distribution challenges that go beyond model execution. Enterprise organizations require a comprehensive platform to govern, secure, distribute, and control these microservices throughout their lifecycle. This is where the JFrog Platform adds significant value by integrating NVIDIA’s technical innovations with enterprise-grade DevSecOps practices.

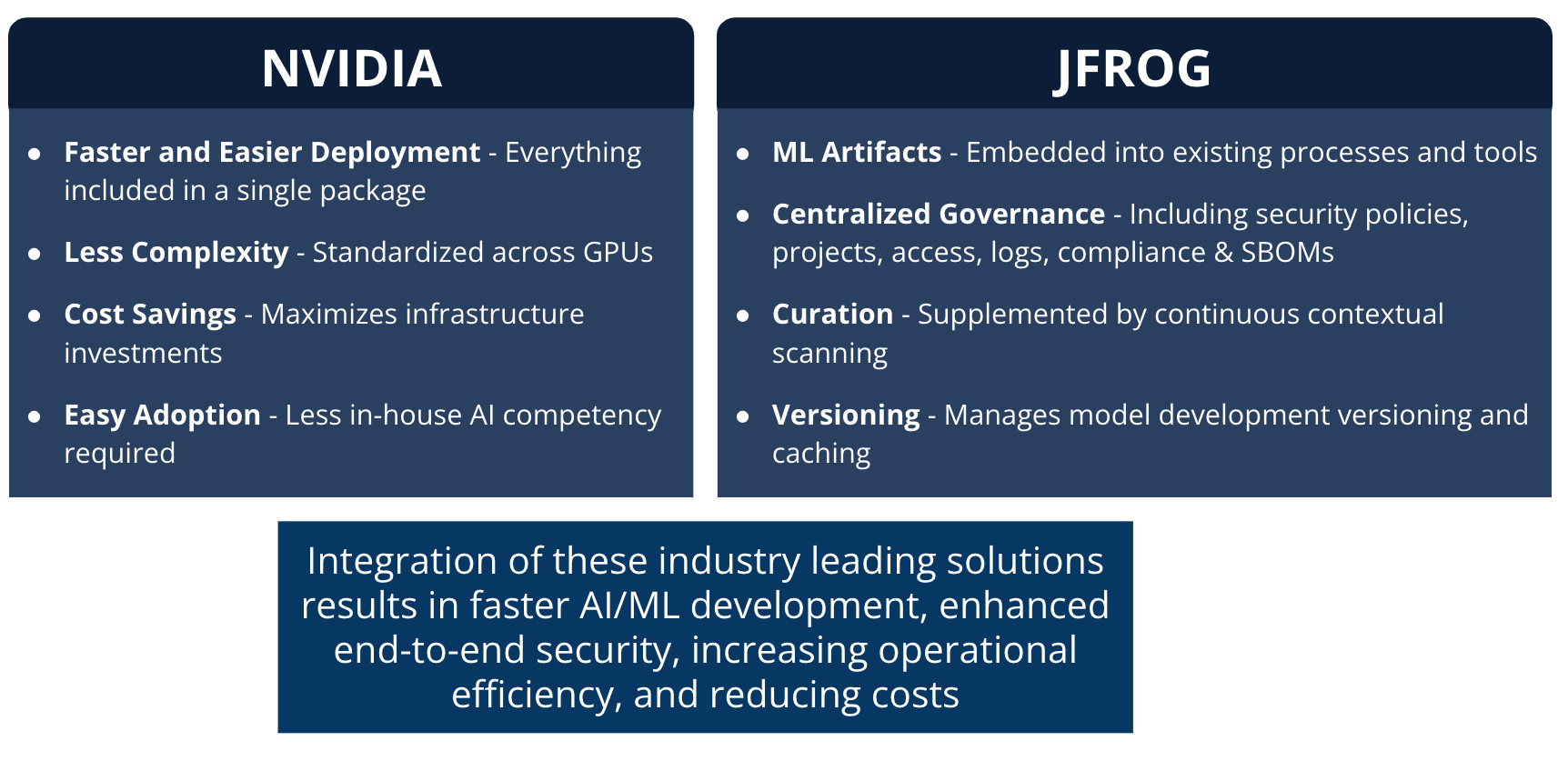

Why Integrate JFrog and NVIDIA NIM?

- Centralized AI Governance

-

- Granular control over NIMs with JFrog Curation and Xray policies

- Ensures compliance with corporate standards

- Accelerates secure NIM adoption enterprise-wide

- ️️️ Enhanced Security

- Continuous scanning of NIM containers, models, and dependencies

- Policy-based blocking of compromised NIMs

- Complete impact assessment across ML & SW stack

- Seamless Distribution

- Direct NIM access via Artifactory without NGC or internet

- Reduces bandwidth through centralized distribution

- Enables air-gapped deployments for high-security

- Enterprise Controls

- Single source of truth for all AI and software assets

- Complete audit trails and version control

- Role-based access with policy enforcement

- Seamless DevSecOps integration

With NVIDIA NIM, enterprises gain a streamlined solution for running AI models as containerized inference microservices with optimized runtimes. The JFrog Platform extends this capability by providing enterprise-grade security, governance, and distribution—creating a complete MLOps solution that accelerates development while maintaining compliance.

Now that you understand this integration’s strategic advantages, let’s explore how to implement it in your environment.

Implementation Guide

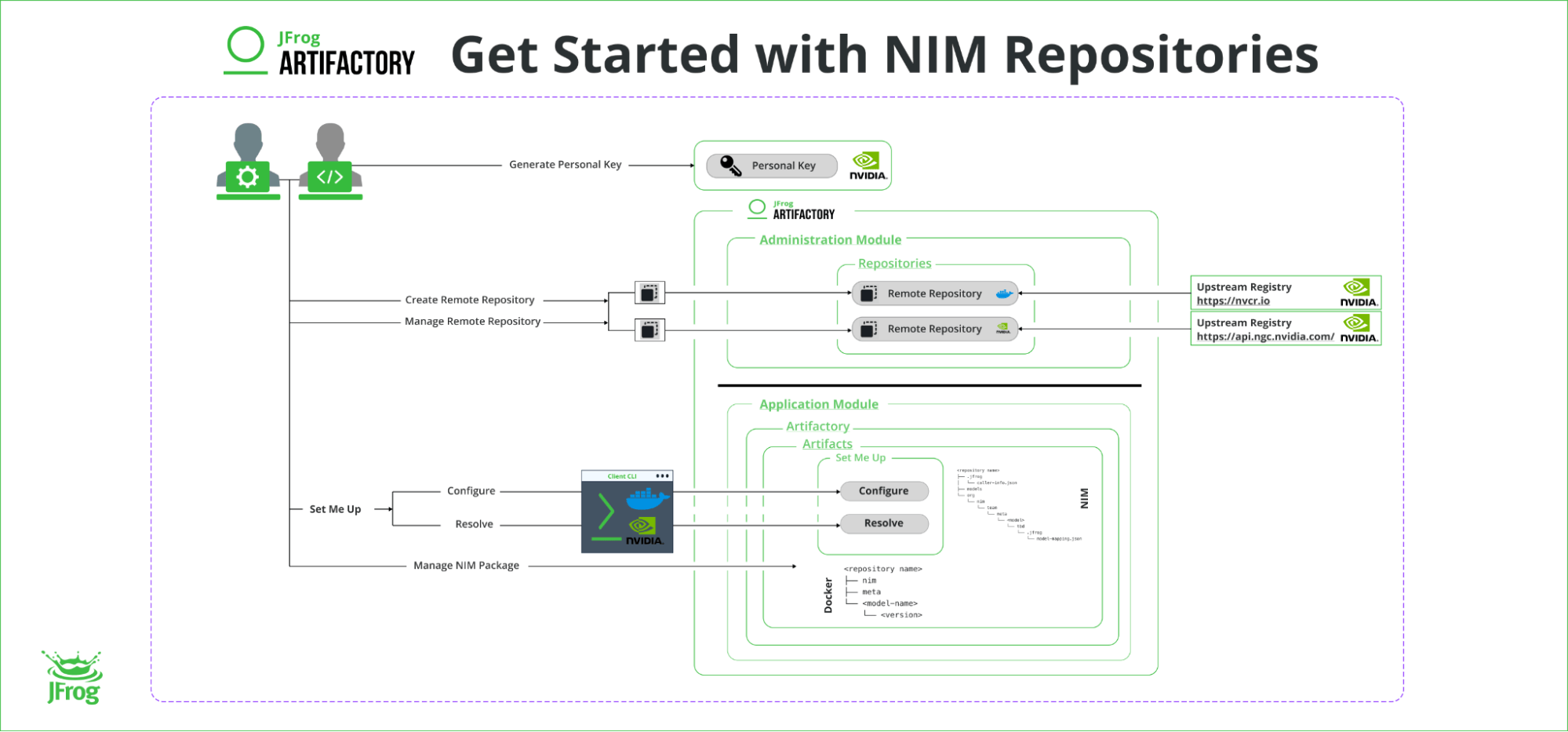

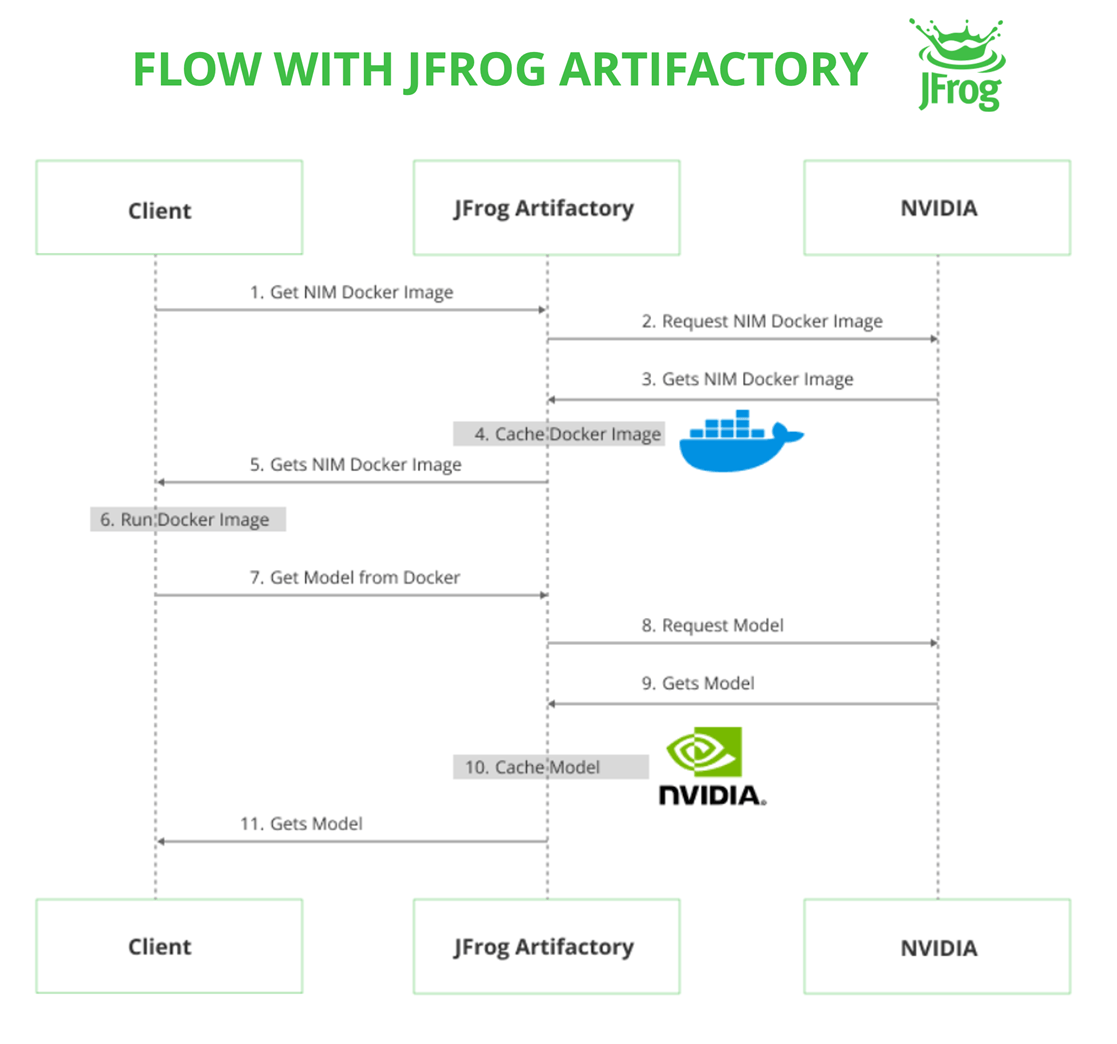

The JFrog Platform now serves as a secure proxy for NVIDIA NGC, centralizing NIM images and models within your existing artifact management workflow. Here’s how to set it up:

2. Set up remote repos in RT

a. Docker repo

i. The remote URL should be set to https://nvcr.io

ii. The username should be set to “$oauthtoken”

iii. Password/access token should be set to the NGC personal key generated in step (1)

b. NIM repo that will hold the models themselves

i. The remote URL should be set to https://api.ngc.nvidia.com/

ii. The username should be left empty

iii. Password/access token should be set to the NGC personal key generated in step (1)

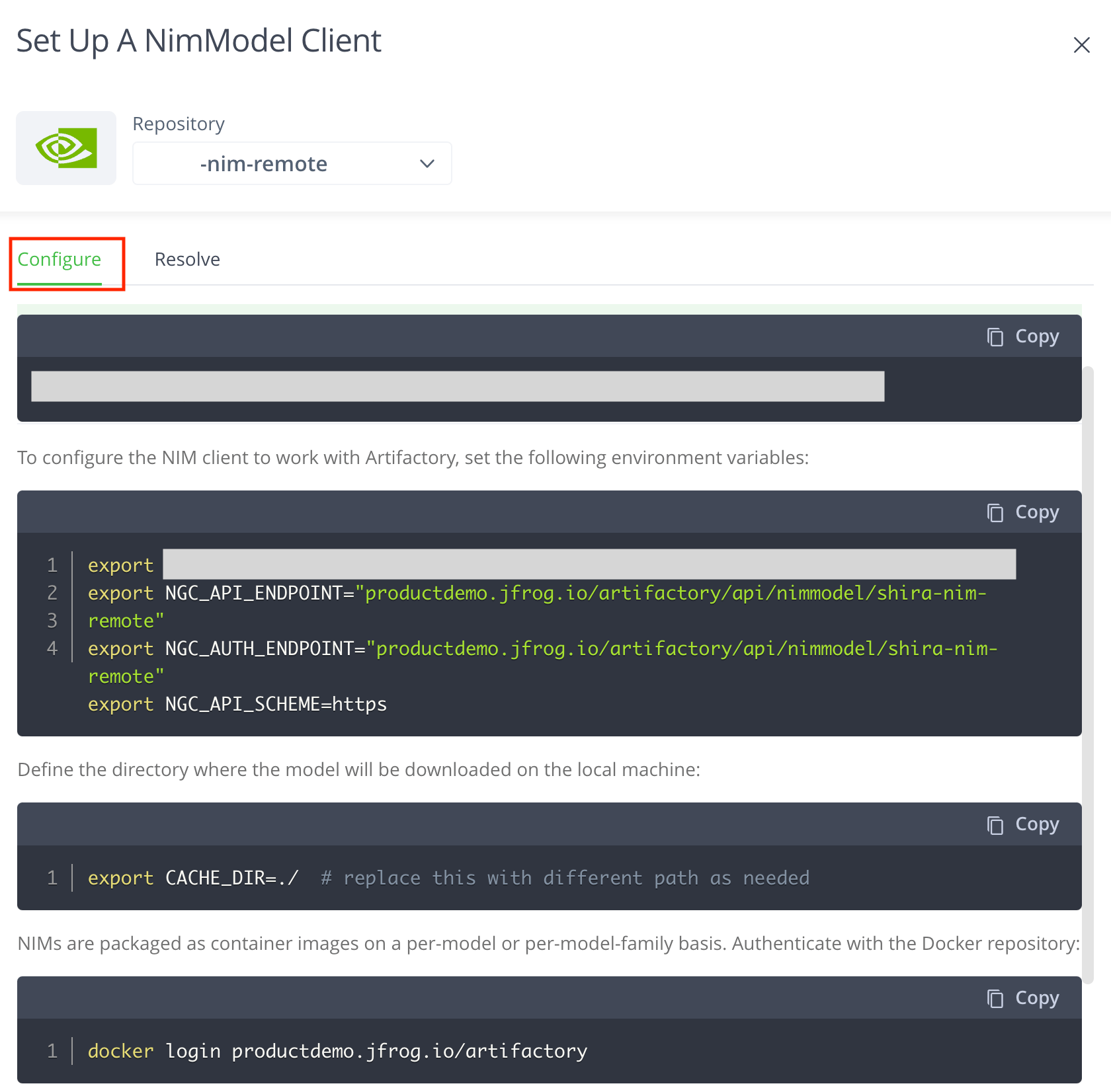

3. Configure the host machine

a. Click the Application tab in the JFrog Platform, and then click Artifacts under Artifactory.

b. Locate the newly created NIM repository and click Set Me Up.

c. Set up the required environmental variables per the instructions.

d. Authenticate against Artifactory as your docker registry on your host machine by running:

“docker login <domain>” (domain being your Artifactory domain, e.g. awesome.jfrog.io)

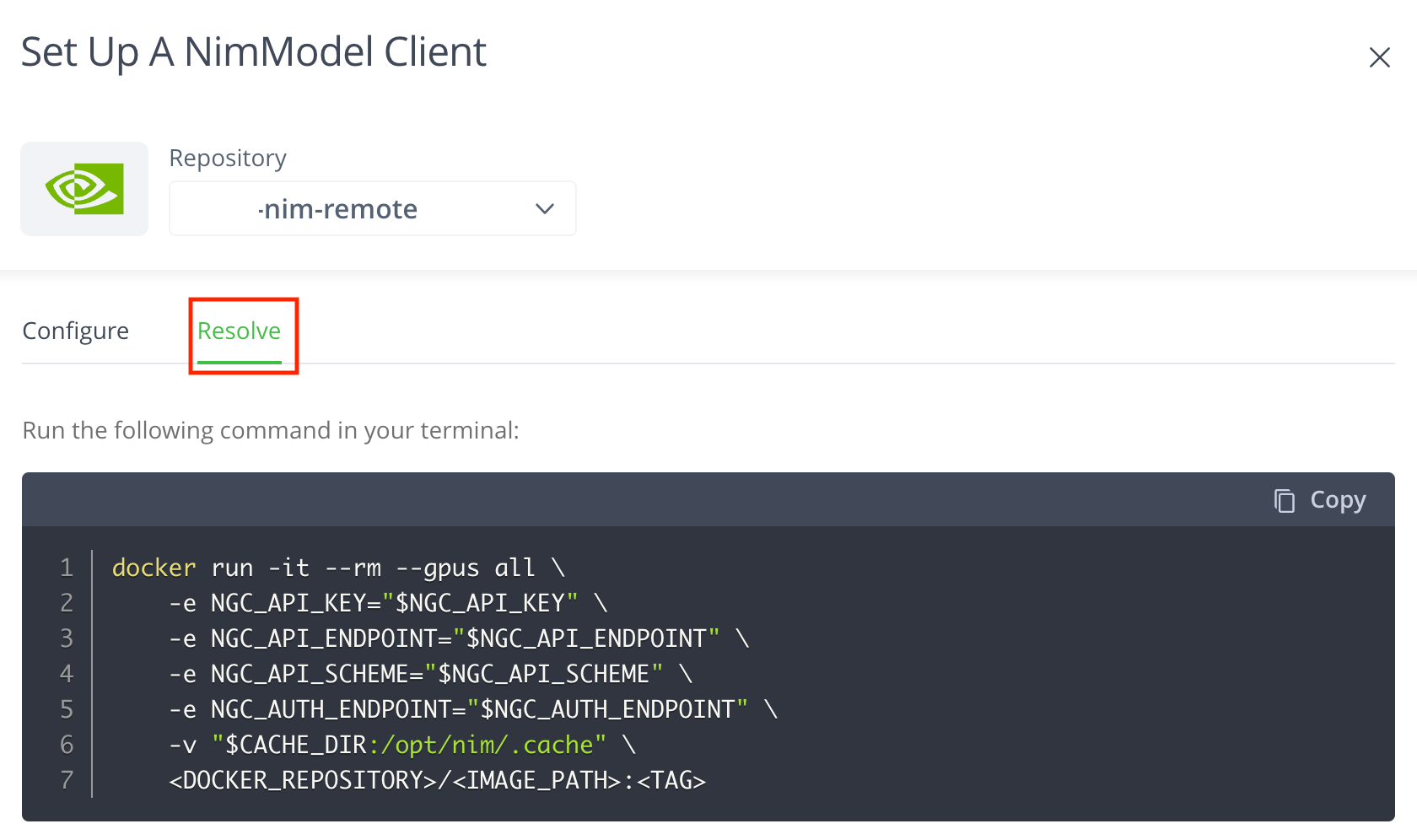

e. Run the Docker command to pull and run the NIM container.

Here are some images of the setup process:

1. Getting Started

2. Establishing a Workflow

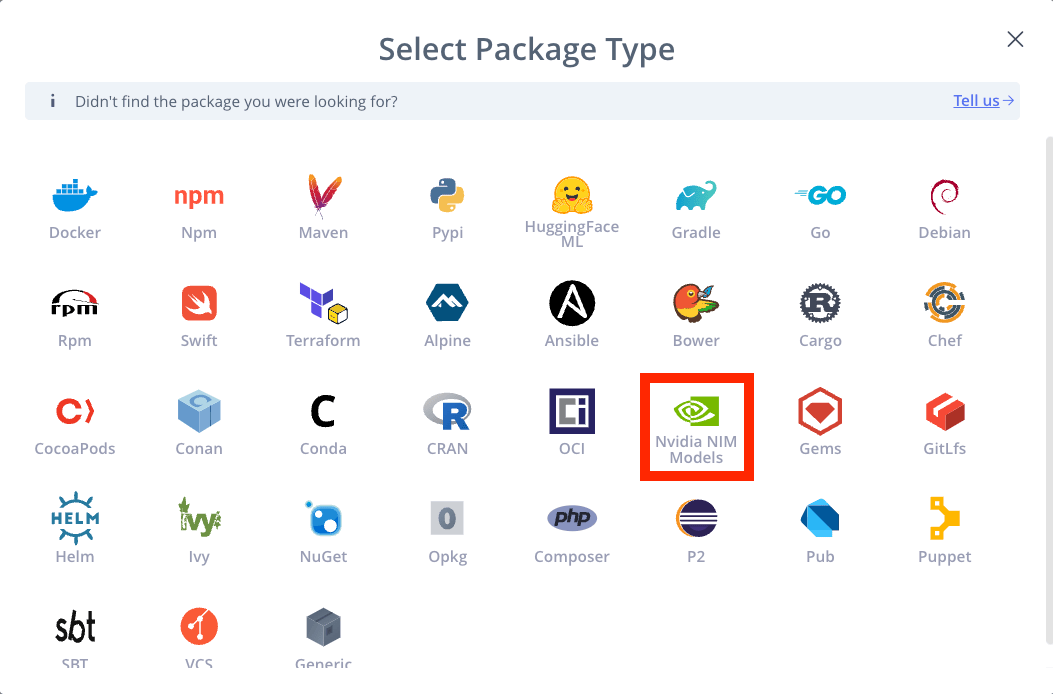

3. Package Selection

4. Configuring a NIM Model Client

Set Me Up:

5. Resolving a NIM Model Client

Key Takeaways

By integrating JFrog and NVIDIA, organizations can streamline AI model management into existing software development best practices, ensuring secure storage, rigorous vulnerability scanning, and efficient deployment across hybrid and multi-cloud environments.

This powerful combination empowers teams to accelerate AI-driven innovation while maintaining security, compliance, and scalability. As AI adoption continues to grow, leveraging JFrog’s robust DevSecOps platform alongside NVIDIA’s cutting-edge inference microservices will help enterprises stay ahead in an increasingly AI-driven world.

We encourage you to schedule a one-on-one demo to see how you can start optimizing your AI model deployment with JFrog and NVIDIA NIM!