JFrog Artifactory: Your Kubernetes Registry

Composing software artifacts into containerized Kubernetes apps

Composing software artifacts into containerized Kubernetes apps

Containers let you simplify and manage your applications (especially microservices applications) at a level of abstraction from the specific hardware and even the VMs. As we’re seeing, a container orchestration system like Kubernetes lets you create apps and deploy them side-by-side without being concerned about compatibility between the various services and components. This blog post reviews the benefits and challenges of combining variously sourced components for containerized applications for deployment in Kubernetes, and shows how JFrog Artifactory can serve as your Kubernetes registry to successfully meet these challenges in this polyglot environment.

Why should a containerized application run using Kubernetes?

The benefit of containerizing an app and running with Kubernetes is that you use a product with a vibrant community that is making it easier to create scalable microservices apps. Your containerized app will have several types of components depending on the operating system, language and framework(s) you are using. When you have a whole team of people working on the various components it becomes rather complex quickly.

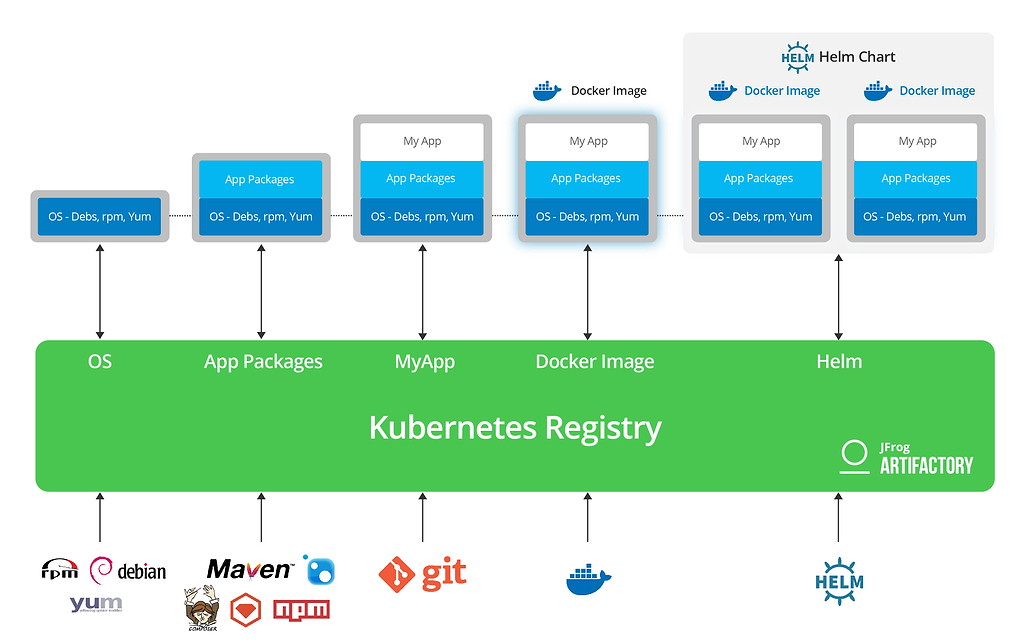

To get a scope of the complexity, let’s first take a look at the many types of artifacts that make up a Kubernetes application. Each of these pieces are combined to create the app and are sourced from multiple locations, either built from source or as external dependencies downloaded from a central repository. These building blocks represent the end-to-end supply chain of the Kubernetes app. While it is possible to use separate package management solutions for each, (for example, one for your Debs repository and another for your Docker registry), there are advantages to keeping it all in one central location. One reason is that you can track the complete build version across technologies and measure quality during continuous integration (CI) and delivery to traverse dependencies between each type of artifact in your application.

What if I have multiple Kubernetes CI pipelines?

Within your organization, different teams might be using different versions of dependencies (in fact this is part of the point of using containers at runtime) so they can maintain independence while releasing. Your Kubernetes cluster can run multiple application stacks side-by-side without conflict and without caring about the internal dependencies of each app. This separates the concerns between maintaining a running cluster, scaling applications up and down, developing new versions, and debugging application specific issues. So now that you have multiple Kubernetes teams working in parallel, how on earth are you going to store all these components without adding a crazy amount of complexity?

Is there a simple way to manage Kubernetes artifacts?

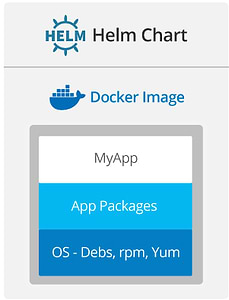

In many cases, enterprise teams (especially those concerned with security) maintain their own Docker base images and always build FROM scratch. So in order to build the full application stack, your team needs to bring in operating system components to get the base image. From there, you will need packages that are dependent on the language of the app. For example, a node app will have dependencies in npm, while a Ruby app will have gems. And each of these will be based on specific language version dependencies. Each app-specific configuration might be collapsed into a base image that can be reused by the application development teams.

Once you have the application code, you will docker build each application layer into container images that can be deployed and run in your Kubernetes cluster, either individually or as part of a microservices application. From this point, you describe the microservices applications using Kubernetes yaml configuration files which can be managed using Helm charts. The charts may also be versioned for different purposes or over time.

JFrog Artifactory serves as a Kubernetes registry

We see a successful pattern is to use Artifactory as your “Kubernetes Registry” as it lets you gain insight on your code-to-cluster process while relating to each layer for each application. Artifactory supports 25+ different technologies in one system with one metadata model, one promotion flow, and strong inter-artifact relationships.

This is in contrast to using a Docker registry as the main repository of Kubernetes application artifacts. In this setup, you lose the ability to trace dependencies across Docker images (either because they are in different apps, different components, or simply different versions over time). A Docker registry is necessary but not sufficient to collect and manage the artifacts for your Kubernetes app. Tracing the content, dependencies and relationships with other Docker images cannot be done with a simple Docker registry.

Final tips before taking your Kubernetes journey!

If you’re running Kubernetes apps, you should know what’s running in each application from top to bottom. Docker images are a very important part of the packaging of the runtime versions, but just one part. Don’t forget to track and store each layer of the app and understand how you will connect these pieces during development and release.