Create Your Software Distribution “Fast Lane” with Distribution Edges

Accelerating software distribution is a critical part of the modern DevOps stack. Modern application development has created new challenges around distribution at scale, leading organizations to rethink their software distribution infrastructure.

JFrog Distribution enables enterprises to easily create fast, scalable converged software distribution infrastructure with Distribution Edges.

In this post, we’ll explore the key use cases and deployment topologies for Distribution Edges and how they are used as part of enterprises’ distribution infrastructure.

What are Distribution Edges?

Deployed as part of your hybrid distribution topology, Distribution Edges are read-only Artifactory repositories that provide local, low latency, governed consumption points for distributed binaries with optimized download speed and built-in registry.

Distribution Edges can be deployed on any environment:

- Self-hosted: on your on-premises or cloud infrastructure

- Consumed as a SaaS-managed instance available on the public cloud – in a multi-cloud multi-region fashion. This option is preferred by users who do not want to manage the edge infrastructure themselves. Cloud Distribution Edges eliminate management overhead and ensure efficient operations and lower TCO – with guaranteed availability, scaling, monitoring, and more – all handled as a service by the JFrog Platform.

Distribution Edges Provide:

- Faster downloads: with local, low latency consumption point(s) for distributed content. Optional CDN and PDN fronts enable further acceleration of downloads and improved concurrency.

- Hybrid distribution: support hybrid workflows that span on-prem and SaaS environments and mixed network topologies.

- Governed, secure distribution: Full RBAC and audit trail, Tracking for both public (open) and authenticated downloads

- Configurable download restrictions: based on Geo or IP blacklist/whitelist, Signed URLs enable time-limited download availability.

- Improved performance and resilience at scale: by load-balancing downloads off the source to local consumption points.

Use cases for Distribution Edges

Let’s review some of the key use cases and their corresponding topologies:

“Download Center” for external distribution

Many of our customers are using Distribution Edges to provide a “Download Center” for sharing software binaries externally: with the ecosystem, or with specific customers and partners.

For example:

- Publishing base images, plugins, SDKs, or OSS for public download by the general developer ecosystem.

- Distributing custom software (flavors of drivers, custom apps, etc.) with specific, authenticated partners or customers. For example, in the case of ISVs who share their software with their customers so that they can then deploy in their own environments.

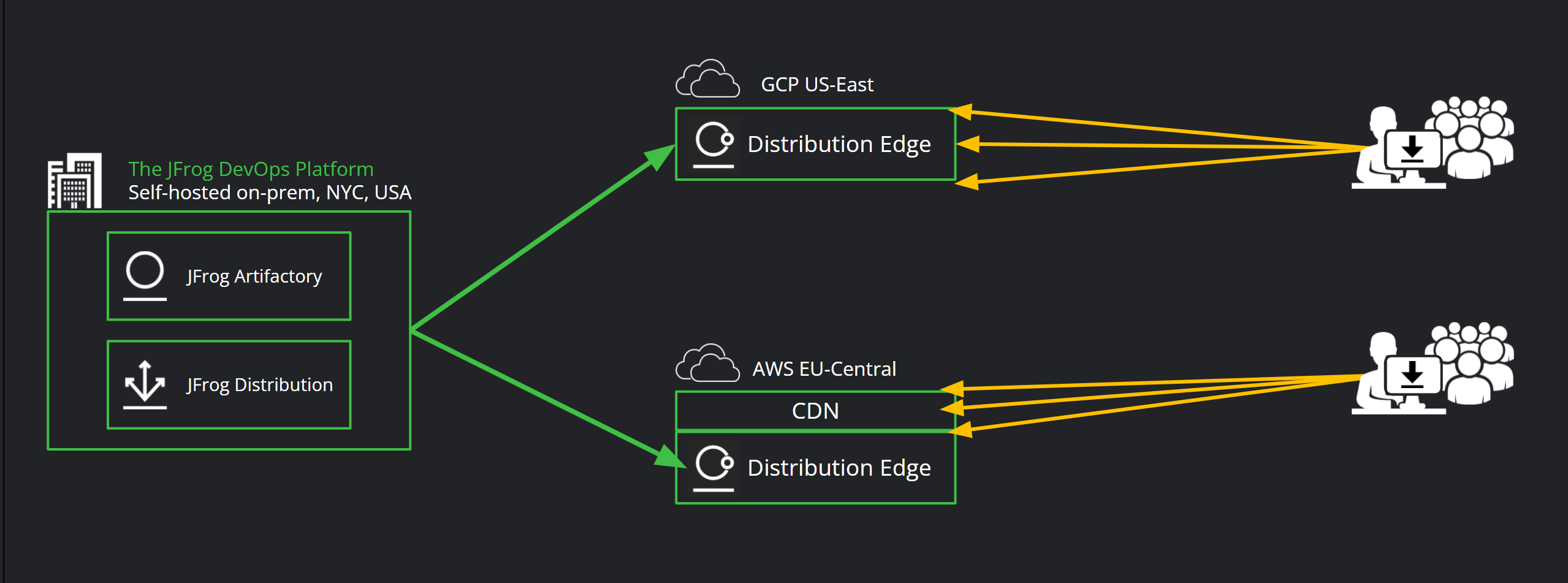

In this scenario, customers use a cloud SaaS Distribution Edge – for example one running on AWS US-East to serve your North America ecosystem, and one on AWS EU-Central for traffic coming from EMEA. This way, you can ensure availability and auto-scaling, along with pay-as-you-grow experience, with no need for infrastructure management or over-provisioning.

They can also choose to run one of their Download Center Distribution Edges on a different cloud, such as GCP US-East, having a multi-cloud approach to bolster resilience and ensure DR.

Using cloud resources also makes it easier for organizations to provide external access, separated from their internal environments, without heavy-lifting around network management and access configuration.

Edges also come with an optional CDN front, and so enabling CloudFront CDN on top of the AWS edges will further accelerate downloads by end-users across the globe.

Your JFrog DevOps Platform (can be self-hosted or SaaS) will manage the distribution workflow to the cloud edges. As builds are being promoted to be release-ready, you can select the final release candidates from their relevant repositories in your Artifactory GUI to then create your Release Bundle and distribute the packages, along with the SBOM, to the Cloud Distribution Edges.

Often, customers automate using the API to append labels throughout the different CI/CD stages as builds get promoted. They then automatically create the Release Bundle along with the distribution transaction once binaries are labeled to have reached a certain approved state and are ready for publishing to the Edges (i.e. a label along the lines of “RC1-Approved”).

Distribution Edges enable customers to set download restrictions on who can consume the distributed packages. These restrictions can be based on:

- GEOs – for customers with export controls, or for those that direct different regions/languages to specific versions of the software.

- IP blacklist/whitelist – for controls based on IP range or specific addresses.

- Signed URLs enable time-limited download availability. This is used when customers want to share certain packages either with anonymous users or partners, and have the access revoked after a certain time has passed (for entitlement, security, or other reasons).

Software distribution between vendors in a complex supply chain:

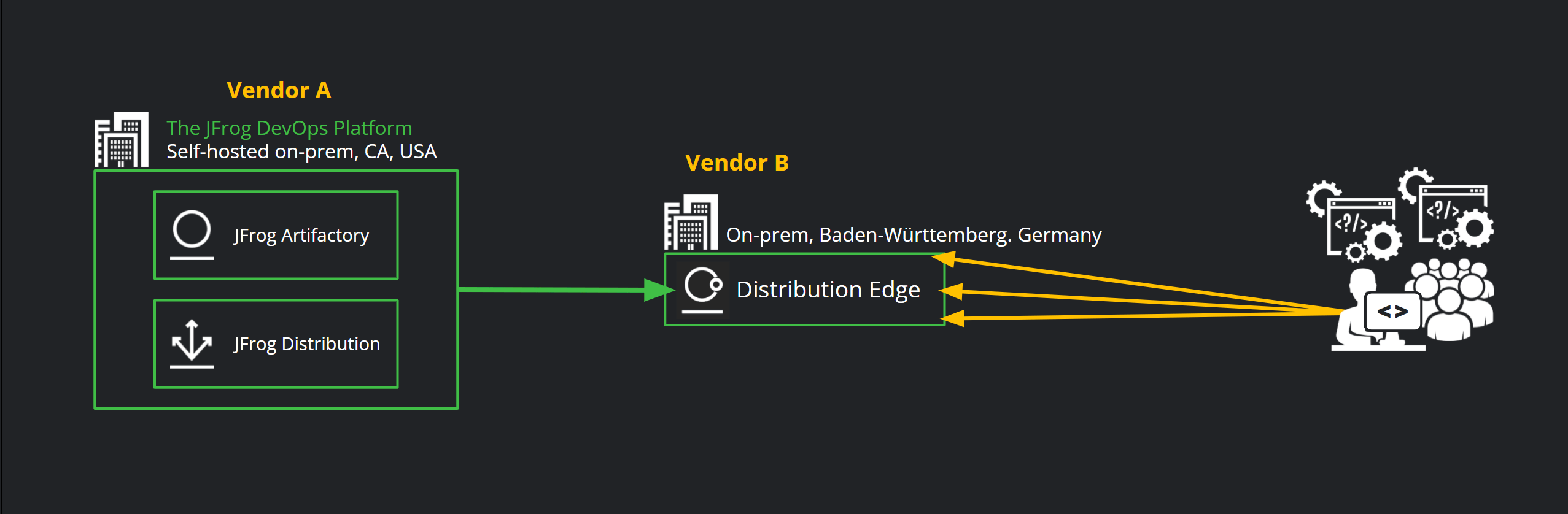

Many customers in industries with complex supply chains and product development lifecycles – such as automotive, embedded devices, healthcare, and more – use Distribution Edges as a way to collaborate and share builds with the next vendor in the PLM chain.

For example, Vendor A in the automotive product lifecycle chain could be developing the builds for the tire sensors components, and will then need to distribute these externally, to Vendor B, for continued development and testing. Vendor B will then be adding the temperature sensor, analytics reporting, or other capabilities that are integrated with Vendor A’s development.

In this scenario, Distribution Edges are often self-hosted and are deployed in Vendor B’s own infrastructure (for IP, security, data sovereignty, and other considerations) – commonly their development site. Vendor A will then trigger a distribution transaction and will distribute the builds to the receiving Distribution Edge, for Vendor B’s developers to then consume.

The benefits for this topology go beyond speed of distribution or the fact that both distribution and consumption are RBAC-enabled (to ensure only authorized users can access the packages as the product is being developed).

Since Distribution Edges are read-only Artifactory repositories, this pattern enables repository-to-repository direct distribution along with package integrity and SBOM visibility. Distribution Edges enable immediate ingestion of the builds by thousands of developers in the next stage of the lifecycle (Vendor B) – since they’ll be able to immediately start working on the most recent, approved builds right in their Artifactory instance. Without Distribution Edges, these types of handoffs between vendors in a complex supply chain are extremely manual and error-prone, and require a lot of cycles and syncing between the different parties.

For example, when vendors used to use ShareFile or other MFT processes to “send” these binaries, the receiving end will need to first receive the packages- which could take a long time to download (particularly as container images and other modern apps create much more heavier binaries), and then will need to have thousands of developers to download and then upload these builds to their repositories to start working on them.

Not only is there a lot of wasted cycles, time, and network capacity, but also we introduce risk into the system. It’s harder to ensure the authenticity of these individually-downloaded/imported files, to ensure they haven’t been tampered with, or that even the packages haven’t already been updated by Vendor A and re-sent, in the time between the original batch and when the builds were able to be downloaded and processed on the receiving end(s).

Local caching middle-layer for accelerating production deployments:

When it’s time to release into production, customers will often first distribute the binaries required for the deployment sequence to Distribution Edges that are deployed in close proximity or co-located with their production clusters (either on-prem or cloud).

For example, a bank operating their own data center in NYC will have a Distribution Edge deployed in the same data center next to their production environment. Once a new release commences, the Release Manager will first distribute the packages for the new rollout to the Edge. All production nodes will map to that Distribution Edge and start downloading the binaries, and their dependencies (since the Edge is also a repository) in order to start the deployment sequence.

Since Distribution Edges are read-only, with full RBAC and audit-trail, the customer can ensure separation of duties requirements. Since the edges provide a local, low latency download point, this greatly accelerates the deployment sequence- particularly when releases need to be rolled out across a very large infrastructure footprint.

The same middle-layer topology of Distribution Edges can also service distribution to remote development teams across inbound-only networks. The distribution transaction is accelerated, with patented network optimization capabilities- to be able to share binaries for example between NYC development team and China-based QA team, with fast downloads at the China consumption point.

See it in Action!

To see a demo of Distribution Edges and discover how you can extend your use of Artifactory to create your powerful distribution infrastructure “fast lane” – watch this recent webinar recording.

Start your free trial to try the JFrog DevOps Platform and JFrog Distribution for yourself, or schedule a demo to discuss your specific use case and architecture with one of our Solutions Architects.