Breaking Silos: Unifying DevOps and MLOps into a Cohesive Software Supply Chain – Part 1

From Separate Pipelines to a Unified Software Supply Chain

As businesses realized the potential of artificial intelligence (AI), the race began to incorporate machine learning operations (MLOps) into their commercial strategies. But the integration of machine learning (ML) into the real world proved challenging, and the vast gap between development and deployment was made clear. In fact, research from Gartner tells us 85% of AI and ML fail to reach production.

In this blog series, we’ll discuss the importance of blending DevOps best practices with MLOps, bridging the gap between traditional software development and ML to enhance an enterprise’s competitive edge and improve decision-making with data-driven insights. Part one exposes the challenges of separate DevOps and MLOps pipelines and outlines a case for integration. Our goal in this first of 3 blogs is to help you understand exactly what’s at stake if you continue to adhere to the status quo.

Challenges of Separate Pipelines

Traditionally, DevOps and MLOps teams operate with separate workflows, tools, and objectives. Unfortunately, this trend of maintaining distinct DevOps and MLOps pipelines leads to numerous inefficiencies and redundancies that negatively impact software delivery.

1. Inefficiencies in Workflow Integration

DevOps pipelines are designed to optimize the software development lifecycle (SDLC), focusing on continuous integration, continuous delivery (CI/CD), and operational reliability. sWhile there are certainly overlaps between the traditional SDLC and that of model development, MLOps pipelines involve unique stages like data preprocessing, model training, experimentation, and deployment, which require specialized tools and workflows. This distinct separation creates bottlenecks when integrating ML models into traditional software applications.

For example, data scientists may work on Jupyter notebooks, while software engineers use CI/CD tools like Jenkins or GitLab CI. Integrating ML models into the overall application often requires a manual and error-prone process, as models need to be converted, validated, and deployed in a manner that fits within the existing DevOps framework.

2. Redundancies in Tooling and Resources

Both DevOps and MLOps have similar goals of automation, versioning, and deployment, but they rely on separate tools and processes. DevOps commonly leverages tools such as Docker, Kubernetes, and Terraform, while MLOps may use ML-specific tools like MLflow, Kubeflow, and TensorFlow Serving. This lack of unified tooling means that teams often duplicate efforts to achieve the same outcomes.

For instance, versioning in DevOps is typically done using source control systems like Git, while MLOps may use additional versioning for datasets and models. This redundancy leads to unnecessary overhead in terms of infrastructure, management, and cost, as both teams need to maintain different systems for essentially similar purposes—version control, reproducibility, and tracking.

3. Lack of Synergy Between Teams

The lack of integration between DevOps and MLOps pipelines also creates silos between engineering, data science, and operations teams. These silos result in poor communication, misaligned objectives, and delayed deployments. Data scientists may struggle to get their models production-ready due to the absence of consistent collaboration with software engineers and DevOps.

Moreover, because the ML models are not treated as standard software artifacts, they may bypass crucial steps of testing, security scanning, and quality assurance that are typical in a DevOps pipeline. This absence of consistency can lead to quality issues, unexpected model behavior in production, and a lack of trust between teams.

4. Deployment Challenges and Slower Iteration Cycles

The disjointed state of DevOps and MLOps also affects deployment speed and flexibility. In a traditional DevOps setting, CI/CD ensures frequent and reliable software updates. However, with ML, model deployment requires retraining, validation, and sometimes even re-architecting the integration. This mismatch results in slower iteration cycles, as each pipeline operates independently, with distinct sets of validation checks and approvals.

For instance, an engineering team might be ready to release a new feature, but if an updated ML model is needed, it might delay the release due to the separate MLOps workflow, which involves retraining and extensive testing. This leads to slower time-to-market for features that rely on machine learning components.

5. Difficulty in Maintaining Consistency and Traceability

Having separate DevOps and MLOps configurations makes it difficult to maintain a consistent approach to versioning, auditing, and traceability across the entire software system. In a typical DevOps pipeline, code changes are tracked and easily audited. In contrast, ML models have additional complexities like training data, hyperparameters, and experimentation, which often reside in separate systems with different logging mechanisms.

This lack of end-to-end traceability makes troubleshooting issues in production more complicated. For example, if a model behaves unexpectedly, tracking down whether the issue lies in the training data, model version, or a specific part of the codebase can become cumbersome without a unified pipeline.

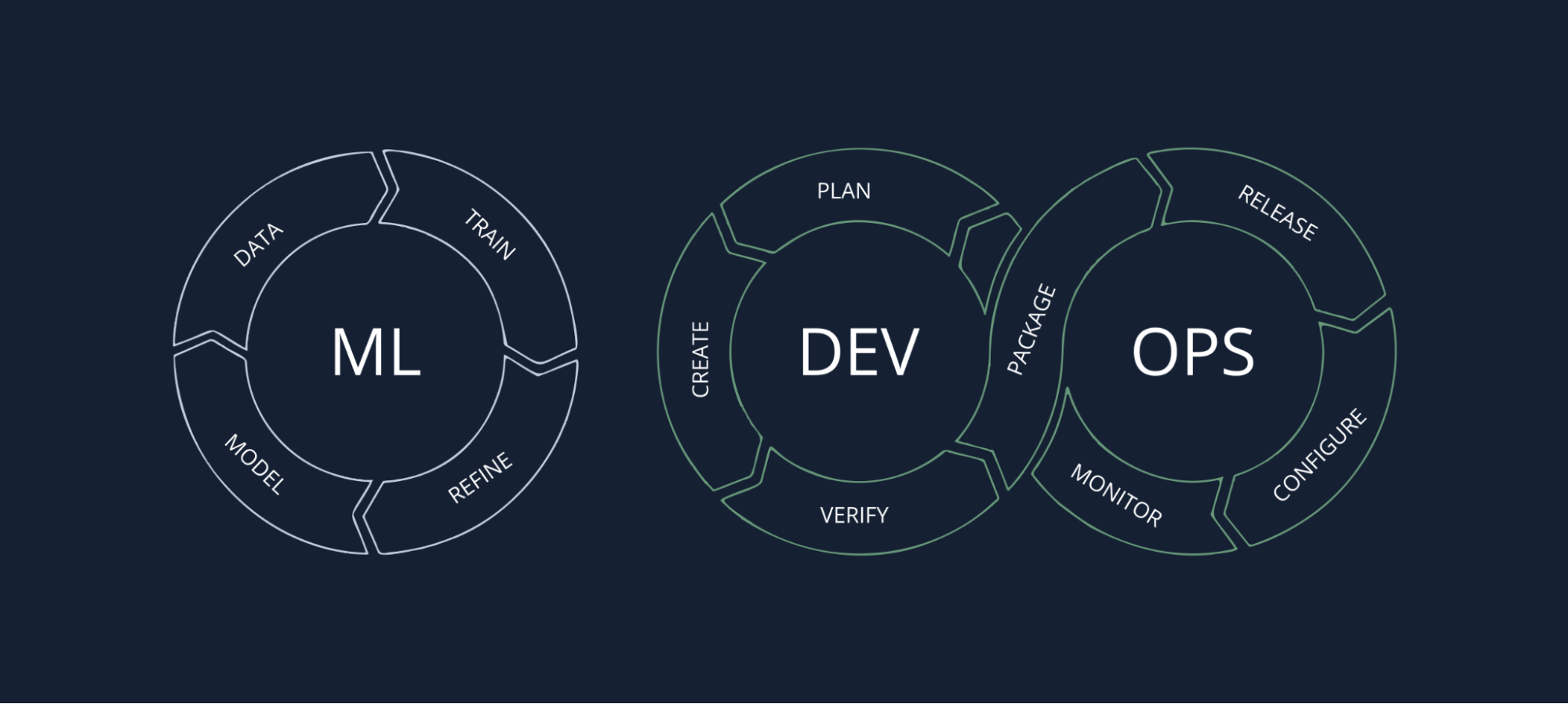

Separate ML and DevOps pipelines

The Case for Integration: Why Merge DevOps and MLOps?

As you can see, maintaining siloed DevOps and MLOps pipelines results in inefficiencies, redundancies, and a lack of collaboration between teams, leading to slower releases and inconsistent practices. Integrating these pipelines into a single, cohesive Software Supply Chain would help address these challenges by bringing consistency, reducing redundant work, and fostering better cross-team collaboration.

Shared End Goals of DevOps and MLOps

DevOps and MLOps share the same overarching goals: rapid delivery, automation, and reliability. Although their areas of focus differ—DevOps concentrates on traditional software development while MLOps focuses on machine learning workflows—their core objectives align in the following ways:

- Rapid Delivery

- Both DevOps and MLOps strive to enable frequent, iterative releases to accelerate time-to-market. DevOps achieves this through the continuous integration and delivery of code changes, while MLOps aims to expedite the cycle of model development, training, and deployment.

- Rapid delivery in DevOps ensures that new software features are shipped as quickly as possible. Similarly, in MLOps, the ability to deliver updated models with improved accuracy or behavior allows businesses to respond swiftly to changes in data or business needs.

- Automation

- Automation is central to both practices as it reduces manual intervention and minimizes the potential for human error. DevOps automates testing, building, and deploying software to ensure consistency, efficiency, and reliability.

- In MLOps, automation is equally crucial. Automating data ingestion, model training, hyperparameter tuning, and deployment allows data scientists to focus more on experimentation and improving model performance rather than dealing with repetitive tasks. Automation in MLOps also ensures reproducibility, which is critical for managing ML models in a production environment.

- Reliability

- Both DevOps and MLOps emphasize reliability in production. DevOps uses practices like automated testing, monitoring, and infrastructure as code to maintain software stability and mitigate downtime.

- MLOps aims to maintain the reliability of deployed models, ensuring that they perform as expected in changing environments. Practices such as model monitoring, automatic retraining, and drift detection are part of MLOps that ensure the ML system stays robust and reliable over time.

Treating ML Models as Artifacts in the Software Supply Chain

In traditional DevOps, the concept of treating all software components as artifacts—such as binaries, libraries, and configuration files—is well-established. These artifacts are versioned, tested, and promoted through different environments (e.g., staging, production) as part of a cohesive software supply chain. Applying the same approach to ML models can significantly streamline workflows and improve cross-functional collaboration. Here are four key benefits of treating ML models as artifacts:

1. Creates a Unified View of All Artifacts

Treating ML models as artifacts means integrating them into the same systems used for other software components, such as artifact repositories and CI/CD pipelines. This approach allows models to be versioned, tracked, and managed in the same way as code, binaries, and configurations. A unified view of all artifacts creates consistency, enhances traceability, and makes it easier to maintain control over the entire software supply chain.

For instance, versioning models alongside code means that when a new feature is released, the corresponding model version used for the feature is well-documented and reproducible. This reduces confusion, eliminates miscommunication, and allows teams to identify which versions of models and code work together seamlessly.

2. Streamlines Workflow Automation

Integrating ML models into the larger software supply chain ensures that the automation benefits seen in DevOps extend to MLOps as well. By automating the processes of training, validating, and deploying models, ML artifacts can move through a series of automated steps—from data preprocessing to final deployment—similar to the CI/CD pipelines used in traditional software delivery.

This integration means that when software engineers push a code change that affects the ML model, the same CI/CD system can trigger retraining, validation, and deployment of the model. By leveraging the existing automation infrastructure, organizations can achieve end-to-end delivery that includes all components—software and models—without adding unnecessary manual steps.

3. Enhances Collaboration Between Teams

A major challenge of maintaining separate DevOps and MLOps pipelines is the lack of cohesion between data science, engineering, and DevOps teams. Treating ML models as artifacts within the larger software supply chain fosters greater collaboration by standardizing processes and using shared tooling. When everyone uses the same infrastructure, communication improves, as there is a common understanding of how components move through development, testing, and deployment.

For example, data scientists can focus on developing high-quality models without worrying about the nuances of deployment, as the integrated pipeline will automatically take care of packaging and releasing the model artifact. Engineers, on the other hand, can treat the model as a component of the broader application, version-controlled and tested just like other parts of the software. This shared perspective enables more efficient handoffs, reduces friction between teams, and ensures alignment on project goals.

4. Improves Compliance, Security, and Governance

When models are treated as standard artifacts in the software supply chain, they can undergo the same security checks, compliance reviews, and governance protocols as other software components. DevSecOps principles—embedding security into every part of the software lifecycle—can now be extended to ML models, ensuring that they are verified, tested, and deployed in compliance with organizational security policies.

This is particularly important as models become increasingly integral to business operations. By ensuring that models are scanned for vulnerabilities, validated for quality, and governed for compliance, organizations can mitigate risks associated with deploying AI/ML in production environments.

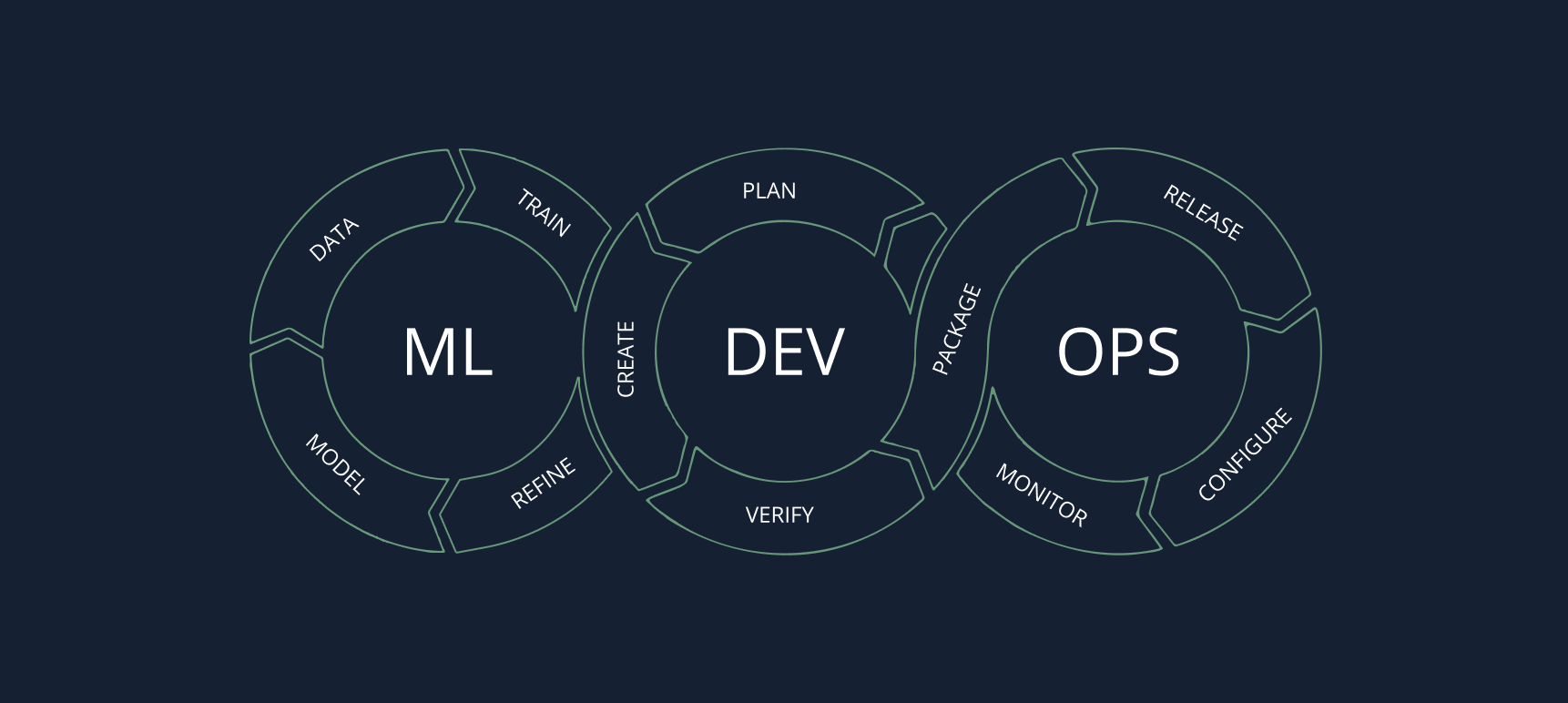

Unified ML and DevOps pipeline

Conclusion

Treating ML models as artifacts within the larger software supply chain transforms the traditional approach of separating DevOps and MLOps into a unified, cohesive process. This integration streamlines workflows by leveraging existing CI/CD pipelines for all artifacts, enhances collaboration by standardizing processes and infrastructure, and ensures that both code and models meet the same standards for quality, reliability, and security.

By combining DevOps and MLOps into a single Software Supply Chain, organizations can better achieve their shared goals of rapid delivery, automation, and reliability—creating an efficient and secure environment for building, testing, and deploying the entire spectrum of software, from application code to machine learning models. For more on this topic, head over to Part two of the series, where we discuss the benefits and opportunities of a unified software supply chain.