In 2021 over 22 billion records were exposed because of data breaches and numerous other malicious attacks – many of them related to utilization of software supply chain weaknesses and malpractices. Securing the software supply chain becomes a prioritized effort for every organization as well as regulators and standardization bodies across the globe. Such efforts are still being explored and examined to find the right balance between security and fast delivery.

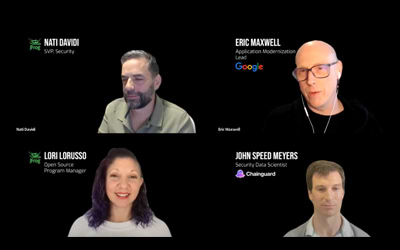

Join Nati Davidi, Senior Vice President of JFrog Security as he discusses the report findings with DORA report authors Eric Maxwell, Application Modernization Lead at Google, and John Speed Meyers, Security Data Scientist at Chainguard.

TRY JFROG CLOUDJFrog is a proud co-sponsor of Google Cloud’s DevOps Research and Assessment (DORA) Accelerate State of DevOps Report. The report is an essential, data-driven asset that examines capabilities and practices that drive software delivery as well as operational and organizational performance.

View Transcript

Leap Left for Security: The DORA Report Roundtable Intro

Lori Lorusso:

Good morning and good afternoon, everyone. My name is Lori Lorusso and thank you for joining us today for Leap Left for Security: the DORA Report Roundtable. So, I am the open source program manager for JFrog and I am very happy to be here today moderating our panel. To that end, I’d like to introduce our panel, so we would like to start with Eric Maxwell.

Eric Maxwell:

Hi, everybody. My name is Eric Maxwell and I lead the DevOps transformation practice at Google. I am also a member of our DORA core research team and an author of the State of DevOps Report and also, the lead author for Google’s Enterprise DevOps Guidebook. Nice to meet everybody and thanks for coming.

Lori Lorusso:

You don’t seem busy at all. Next up, we have John Speed Myers from Chainguard. John Speed.

John Speed Meyers:

Hey. Thanks so much for having me. My name’s John Speed Myers. I’m a security data scientist at Chainguard, a software supply chain security company. I participated as a contributor to the security section and especially the software supply chain security aspects of the security port in the DORA Report this year, so glad to be here. Thank you.

Lori Lorusso:

Thank you. And last, but by no means least, Nati Davidi.

Nati Davidi:

Thank you, Lori. Hi, everyone, my name is Nati. I’m heading the security product division of JFrog for a year and a bit more than that, since the acquisition of ViDU, a company that was focused on binary analysis. Pleasure to meet everyone.

Lori Lorusso:

Awesome. So, I would just like to remind the audience that to make this panel the most engaging that it can be, to get all of your questions answered, you have to ask them, so please use the chat feature to let us know if you have questions. We’ve got some starter ones, but we’d love to hear what your thoughts are to make this panel that much more interesting to you. So, to start, let’s put some personality behind our great panelists and let me just ask this general question. Oh, actually, let’s get started with a high-level overview of the DORA Report and then we’ll get into to our panelist ideas about DevOps and security. So, Eric, handing it over to you.

Eric Maxwell:

Well, let’s jump to the next slide, please. I think we skipped ahead a couple slides there, if we can start with slide two. There we go. So, just wanted to start out with a brief introduction of the DORA project, for those that are not completely familiar with what we’re doing. DORA stands for DevOps Research and Assessment and we just completed our eighth year of research. Throughout the eight years, we have surveyed 33,000 individuals around the world, across all different industries, and we have taken an academically rigorous approach to examining the capabilities and the practices that allow companies to be high-performing software companies and as a result, high-performing companies in general, which we’ll talk about how we make that connection and how we get from high-performing software to high-performing organizations.

The way that we approach the research is when we look at capabilities, when we have interesting findings, we look, then, in future years to validate those findings. We also use that as an opportunity to go different directions in the research. As an example, last year we decided to double down on reliability and also security. So, this year, in 2022, we wanted to go even deeper, and so then through the project, we went even deeper on topics of reliability and supply chain security, which we will be speaking about in depth here. So, there’s a link there, that’ll take you, down at the bottom, to our DevOps site, where you can find the standard DevOps report. So, if you don’t mind going to the next slide, one more before that. Maybe our slides are out of sync, that’s fine.

One of the outputs, then, of the research project is, for eight years, or maybe seven, because I think we skipped ’20. Well, no, actually we have put out eight reports. We put out what we call the State of DevOps Report and we publish it that you can read. So, again, at that URL that you see there on the screen, you could download this year’s State of DevOps Report and all of the years prior. I would start with this year’s report, but then I also do feel that it’s very interesting to go back to the prior years and to read the prior years’ reports because, like I mentioned, we do go and focus on different things throughout the different years, so there will be of different interesting findings and you can see where we were before to where we are now with some of the research and the findings.

So, at a very high level, some of the things that we’ve found are that, like I mentioned, software delivery is a driver of organizational performance. This organizational performance is amplified by companies that are able to have high levels of reliability and high levels of operational performance. We also see that for many, many years now, there’s been three main drivers for software delivery performance and as a result, organizational performance, and that’s culture, reliability, and the use of cloud computing. So, we also see that one of the main things that drives organizational performance is teams that have a culture or an ethos of continuous improvement. So, rather than thinking of your journey in the scope of a large span of time, teams that focus on rolling up their sleeves and just starting to do work have the most success.

So, then to get a little bit more granular into some of the findings, with regards to security practices, what we find is that companies that have low levels of security practices have higher levels of burnout and also, companies that have high levels of security practice have greater organizational performance. We also see that technical practices are a big driver of this success. What we see from a technical practice perspective is that they are multiplicative in nature, and so they add on to each other, meaning that the sum of the different technical capabilities is not the same as the end result when you stack them on top of each other, so think things like trunk-based development and CI/CD, et cetera. In fact we see a 3.8X higher organizational performance in companies that are able to really master and have a high level of technical prowess.

We also see this year, since we tripled down on reliability, that reliability is a big driver for organizational performance. In fact, had we spoken about this topic last year or the year before, if you said to me, “Hey, Eric, what’s a main driver of organizational performance?” I would have said, “Well, software delivery.” This year, what we see through the data is that unless you have that reliability piece, the software delivery performance does not necessarily predict organizational performance, so reliability seems to be a really interesting key point here. Next slide. So, I think mine and yours were mixed up, but I already spoke about this, so we can go down to the next one there. And then I will hand off now to Nati.

Nati Davidi:

Thank you, Eric, for the opening and thank you, Lori, for accommodating it, and John for doing this great effort around DORA. Just a quick personal note, so I’m relatively new to DevOps when I joined JFrog’s DevOps company a bit more than a year ago, but I did spend many years around supply chain security, mostly from the embedded side of things and IoT and expert mitigation and software vulnerability blockings. Suddenly, seeing how these two worlds are merging, and this is very much reflected in the DORA work, in the recent report, and witnessing also the recent merging of billings and regulations and standards around software supply chain security that are heavily linked, as Eric mentioned, to CI/CD and software delivery. This is, for us, amazing to see how it all connects around the liquid software vision of JFrog and how to secure it all.

This is also what I would like to share here now, a little bit of insights of things that we saw around binary security, again, the space we came from. We initially started by analyzing embedded system, real-time operating systems that have a very clear nature of being a big stack of one or more binaries that are very hard to understand, that are an aggregation of things that are coming through the supply chain security in the form of binaries, things that are hard to understand, hard to analyze, how to decompile or reverse. We see this nature of embedded also applicable on any binary, practically. Looking at the SLSA framework, which is heavily highlighted in the DORA work, we see a great alignment between the JFrog work and the JFrog approach to software supply chain security, which is not only based on security engines, but on the very basic nature of JFrog Artifactory, which is about managing software and managing the flow of the software from the developer all the way to production.

When we try to match between the two, we see there are many points where JFrog, or the solutions of JFrog, can help actually execute the SLSA framework in the real world. Now, again, the original focus of JFrog is a lot around binaries and binaries management. From a security point of view, this is a critical asset to understand and analyze, not only because of the fact that packages are coming into the software in the form of binary from the outside, as small EPMs, as containers, as femurs, it doesn’t really matter, but also because it’s better reflect what’s going to happen in the real world, in production. Binaries are what being attacked by the attacker, binaries are what they see when they try to do things, they protect us against production environments.

Usually, it’s not source that is running there, so analyzing the builds, the containers, the framework, the final releases, the interior releases in a deep manner to see things that are blind spot to source code analysis, to see things that are exactly what is being mapped as threats from A to H by SLSA, and understand them even when there is no metadata around the packages is exactly what we are trying to do. Today, I would like to give two examples through research that we recently published on two different aspects of the importance of analyzing binaries and seeing what’s inside.

The one is how you can find security exposures that can be utilized by attackers because of the lack of awareness around the supply chain security and on the other hand, how can you can use binaries to also reduce the developer work by getting a better context of what truly need to be fixed, versus just fix everything that the security engines identified? So, I would like to start with the next one, if you can move to the next slide, please, this one. So, our research arm, which is, by the way, one of the biggest in our industry, a lot of vulnerability research coming from binary analysis methodologies and automation of it. They actually scraped major product tokens from all of the major products. In some cases, they didn’t need to scrape because you could get the guidelines from the documentation of these products, and they generate patterns of these tokens and keys and run them, against eight million open source artifacts in known public repositories.

They’re able to find thousands of active tokens and clearly, have reached out directly and confidentially to the maintainers first to make sure that these things are being fixed and only then, we publish it together. This is only one among many things that we see as, let’s call it the soft things that are not really covered by the regular [inaudible 00:14:31] approaches or by the regular runtime security approaches, and on are falling on between exactly where the DevOps take the lead, exactly where the DevOps build the infrastructure that might allow the automation of such things. So, this is one example just on the same concept, you can identify misconfigurations of infrastructure [inaudible 00:14:53], things as terraform, things as misconfiguration of applications, such as Flask and Django, or Demons and services such as Apache or NGINX. These are soft things that are not the CVEs or the zero days, these are the things that mistakenly were done, not by the developer necessarily, but someone else along the way and can be utilized remotely, in many cases, by the bad actors, so this is one example.

If you can move to the next slide, please. On the other hand, you can see how you can utilize the supply, let’s call it for a moment, the supply chain, the journey of the software from the point it becomes a binary, to truly understand what is applicable on your piece of software. Even though Shift Left is a critical thing, you want to solve things as early as possible, it’s not really feasible to fix everything, it’s not really feasible to identify everything, and source code does not include everything. More than that, after completing the phase of developing the code and compiling it later on in the process, new threats are coming in, so you need to make sure that the new threats are being checked against the next form of the software, which is binary.

What we did here in this research, we pulled 200 community images from DockerHub, the top ones, the highest strength ones, and we scanned them against the top 10 CVEs by [inaudible 00:16:20], which has, clearly, the highest CVSS, and then we’re running, then, what we call the applicability scanners. We are doing contextual analysis on the binaries in a way to check for each and every CVE, if it truly fulfills the prerequisite for exploitation. I will give one example. If you have Log4j and you do use the vulnerable package, but your first part, according your container, does not hold the vulnerable function, but another function which is safe, so you do have the CVE, but you are not susceptible for that.

Our argument is let’s help the developers make sure that they are safe, even if it’s temporarily being safe, they don’t need to be panic and go to change everything. Through this approach of identifying only the applicable things through configuration, through code analysis, we found on, this specific group of 200 images, that 78% of the highest strength CVEs are not applicable, are not exploitable. I think it’s a great message, because part of the work that can be done as part of the SLSA work and other initiatives of how to better deal with software supply chain security is to put the developer under the spotlight and help them reduce their effort by utilizing the form of binary to analyze the context. So, these are two examples of things that can be done and they do correspond with the SLSA effort around the integrity checks of the builds and the packages, not only the source code, and also the availability, to some extent.

If you can jump to the next slide, please, I welcome everyone, of course, to visit our website. One of the things that we are doing is to release everything freely to the community in our research at jfrog.com. Not only that, we publish unique articles of any finding more than 120 days on open source library to date, including Cassandra database, H2 database, more than 1,600 unique malicious packages and 18,000 aggregated malicious packages, all of these things are available to the community. We also release open source [inaudible 00:18:30] for the highest profile CVEs to check if the infrastructure of the user is acceptable to the CVE. Please use this information, it’s there for everyone to utilize. With that, we can move forward, Lori. I hope I gave some nice insights, more is available in the website as I said. Thank you.

Lori Lorusso:

Thank you. We can end the slides. So, I appreciate finding out where you’re from and how you got here. I think the one thing that both DORA and what you just talked about, Nati, is community, and making sure the community is up to speed with everything that’s happening and that it’s all open source and that we give back. DORA is telling you how the state of things are, the research team is emphasizing that what the DORA Report is saying is 100% on target. So, since we learned a little bit about you and how you got to JFrog, Eric, will you tell us a little bit how about how you got interested in tech, in DevOps? You’re a writer, you write a lot of things, this is amazing, you do a lot of research.

Eric Maxwell:

Thanks. Well, I guess they’re two slightly different stories. So, the reason that I got into tech is because my parents were very generous and they told me that when I graduated sixth grade, very young, that if I got straight A’s, I could have any gift that I wanted. So, I worked really hard, I got straight A’s, and I requested a dirt bike. When it came time to get my dirt bike, they got me a computer. So, after I got past the initial shock and awe and disappointment, I realized, “Well, this is my toy and this is what I should start playing with,” and so that’s how I got into technology. How did I get into DevOps? So, I had been working for startup companies for most of my career and I was working for a startup company where we had a specific product and a direction, and in typical startup company fashion, we pivoted and we decided to go a different direction.

Well, that direction took off. I’ll never forget, I got a phone call at 3:12 in the morning from my database administrator and I picked up the phone and all he said was, “Our servers are melting.” So, what we had to do was we had to figure out how do we rewrite our entire stack in Webscale stack? How do we change all of our technologies? How do we embrace a cloud-first methodology? There were three of us on the development team and so we had to not only figure out how to re-engineer everything, but the only way that we could sleep a little bit and keep our sanity was just learn how to start automating all the things, and so that’s what got me into this. I didn’t even really know that there was a word DevOps or there was a movement around it, it was honestly just trying to survive, and so that’s how I got started.

Lori Lorusso:

What a perfect definition of DevOps, humans came together to try and automate all of those insane processes that you had to scale up with at 3:12 in the morning. That’s insane. That’s not a notice I think anybody wants to get at any time during the day. John Speed, how about you? Give us a little bit of background.

John Speed Meyers:

I come from a research background. I was actually doing all sorts of public policy research on largely military systems, for instance, aircraft, and I realized that most of these systems are really just steel wrapped around computers. When I came to that conclusion, my research increasingly became about software security. After a job where I was actually focusing on open source software security, I recently came to Chainguard, which is a software supply chain security startup. So, applying my research and analysis skills to software supply chain security, which closely ties into DevOps since, like we discussed, so many security processes are now automated into this DevOps machine.

Lori Lorusso:

This is great. So, I think this leads us right into the first question, which is DevOps is centered around culture, and Eric, you brought this up when you’re going over the DORA findings, saying that teams that had trust had low employee burnout and they were more successful with bringing on adoption of security practices. So, what, in your mind, explains why high trust teams have better software supply chain security practices? What does this whole idea of trust and what does that mean for developers?

Eric Maxwell:

Sure. So, I think that what this model does and the idea of automating all the things, security included, is it really helps to make centralized security teams start to loosen the grip, by moving the responsibility of security from maybe post-hoc scanning or from a centralized team into pipeline and being done through automation. In order for this to happen, what you have to do is you have to increase the collaboration between security folks and dev folks. Really, what you want to do is you want to figure out how do you increase the confidence from both sides?

Because security, the reason that they feel that they must have this iron grip on things is because they often have a low level of confidence that the developers are going to ship code that is not going to have security vulnerabilities. Oftentimes, the developers have a low level of confidence that the software that they ship will be approved by security. So, when you put this stuff into a pipeline, you’re kind of forcing collaboration, and so this collaboration actually creates more trust because you get folks talking, you get them communicating, you get them working together, and this builds confidence, which then reduces blame and as a result, has these positive effects on generative culture.

Lori Lorusso:

I love the way that you frame that, because you do have to work collaboratively together to then make sure that automations that you’re putting in place actually make sense. John Speed, did you have something that you wanted to add to that maybe?

John Speed Meyers:

I think one of the recurring ideas and themes of the DORA Reports is this idea of generative culture, here, it’s called high trust teams. What this really boils down to is, you could think of them as practices or mental attitudes about cooperating, sharing risks, using failure to improve, exploring novelty. These are not the sort of technologies that sometimes gets emphasized in the DevOps narrative, but a recurring finding is that these things are actually what power a lot of software performance, including, interestingly, in this year’s report software supply chain security practices. So, for me, at least one plausible story here is that practices like exploring novelty lead you to implement new software supply chain security practices in the first place and practices like using failure to improve, what that means is that when you actually have software supply chain security incidents, well, you take the time to do a retrospective and then implement those practices. So, it’s interesting that this cultural aspect is really an important root cause of positive software supply chain security practices.

Lori Lorusso:

Nati, do you care to add to that?

Nati Davidi:

Yeah, maybe I’m thinking out loud. I think that many times when there’s a friction between entities, whether it’s a even hidden one, a natural one like you have between R&D and product, many times between security and developments, the things that make people collaborate is believing on the same third-party entity. In this regard, I think that DevOps is the third party between developers and security, the DevOps and the data and the single source of proof, if it exists, that can now look at and extract insights and understand what goes wrong and the reliability of the data and the proof points.

I think one of the things that we try to emphasize and we learn from our customers is that many times, when you provide developer with the scientific proof of why something must be fixed and let her or him do it themself and decide himself how to fix it, but at least they get the instruction and the alternative mitigations. On the other end, being able to tell the security persona why things not necessarily need to be fixed through these technical evidences that are not negotiable or undoubtable, they’re scientific, it shows you, in our pseudo code, what’s going on. I think that this is a good starting point to create this collaboration. Clearly, if you add to it a good user experience and, at least in the initial phases of establishing these processes, also strong persona that can intermediate this discussion, this is amazing starting point.

Lori Lorusso:

I think this follows into something that Eric already mentioned, the quote, it says, “To make meaningful improvements, teams must adopt a philosophy of continuous improvement.” So, a lot of the things that you guys have just mentioned is all about culture and style and trust within an organization. So, Eric, do you want to maybe expand on that a little bit?

Eric Maxwell:

Sure. What I’ve seen from working with customers and working with companies all around the world is that they’re really beginning to understand that this is something that is not optional, that this is a requisite transition, requisite behavior. We have many, many years of the DORA research showing us that culture is a main driver of organizational success, and we also see this year, that it’s a main driver of security success and the successful adoption of security practices. So, I think the real question is, and this is the question that I get all the time, is how do we improve culture? So, there’s some really interesting research, there was a joint venture between General Motors and Toyota Corporation. The initials or acronym is NUMMI and for the life of me, I can’t remember what that stands for, but if you look it up, NUMMI, GM, Toyota, you can read the research. What they tried to do in this joint project was to really understand how do you change culture?

So, that was the big question is how do you influence culture, how do you actually make change? What they found was is to change culture, you don’t start by changing the way that people think, you actually change the way that they behave. So, when you change the way that people work day-to-day, that’s how you positively influence and positively change culture. So, when we think about this from the context of DevOps, we always talk about a continuous journey. I said that in the beginning, that our research has shown that companies that take the approach of continuous improvement are the most successful.

So, if you take this continuous improvement, and if you think of this in the context of incremental work, what we’re doing is we’re changing the way that people work, we’re changing what they do from a day-to-day basis. We mentioned in order to have successful security automation and pipelines, you have to get developers and security folks sitting side-by-side and working together on security policy. So, this is changing the way that people work and as a result, this has a direct impact on culture.

Lori Lorusso:

So, I think that this leads directly into this next question that I have regarding security. It’s about empowering individual teams and developers to own security, or should your CISO and your security team own security? Where do these two teams meet, do they overlap? How are they supposed to work together when everything that you’re saying is it’s all about being a community, but also, you all have different roles and obligations? So, Nati, I’d like to hand that one off to you.

Nati Davidi:

So, again, I think it’s all about ownership and the ownership creates the collaboration instead of the tension. We, as thought leaders, we need to help providing the tools and infrastructure to ease the ownership. Now, part of it is, of course, technology and part of it, as I mentioned, is data and proof points, but it’s tricky. It’s tricky because it’s not only about the breaking the silos and creating ownership of the individuals at any segment of the organization, but because these silos are not only in between developers, DevOps, and security, it’s also within the security.

When you say supply chain security, it has so many aspects of it. It has a Shift Left aspects, the [inaudible 00:32:07] aspects, the DevSecOps, the runtime security, the typical IT security, and then you have different ownership within the security. Actually, the bigger the organization is, the more complex it is to enforce or to decide on one feasible approach to it and I think it should be built bottom-up. By the way, we did it internally by taking a use case in one division and then growing it to the entire organization. But finding the way to technologically providing the ability to take decisions bottom-up, I think this is the right starting point.

Lori Lorusso:

John, do you have anything you want to add to that?

John Speed Meyers:

Glad to. I’ll mention that one of the interesting aspects of the generative culture that the DORA Reports come back to over is actually a sense of shared ownership, and that seems to be an element of this culture that actually leads the positive security outcomes. I’ll just point out that that cuts against a lot of basic theories of organizational management, that if you are sharing, some sense of responsibility could actually happen, that no one takes care of it because there’s no one particular person or group’s responsibility, so I’ll just make that point. I don’t think I can resolve that today, but it’s an interesting finding.

Lori Lorusso:

Eric, did you have anything you wanted to add?

Eric Maxwell:

I always laugh to myself when this topic comes up, because for the longest time, we’ve been up at conferences for a decade-plus talking about breaking down silos and how we need to break down the silos between developers and operations, but we never really included security in that conversation in the early days. We kept security in a silo and in a lot of ways, the result of that was holding back innovation. So, I think that in today’s world, when we can do things like express our security as code, express our compliance as code, the reason that it allows greater collaboration is because we now have a common language that we can all iterate on.

So, instead of maybe some operations folks writing scripts and some compliance folks using a spreadsheet or PDFs or whatever, we all can now share in that responsibility and really just share in the collaboration, share in the understanding of what exactly is the security policy, because it’s now in a common language that everybody can read. So, I think that that’s really awesome and I do think that that common language framework is also another big driver of that confidence building that occurs.

Lori Lorusso:

So, we’re talking about everyone getting on board with security. JFrog, our slogan used to be a release fast or die, and so it’s all about speed, continuous delivery, making updates, getting it done, getting it done fast, not holding back. But when we look at the DORA Report, over half the respondents agree that security processes slow down development, so how do we improve this? What tools, what processes can we use within organizations, not just small, but how do we scale up to add this layer of security without impacting release cycles and production? I’ll open that up to whoever would like to answer. I’m going to go with Eric, because my dog is barking.

Eric Maxwell:

So, from a DORA perspective, when we look at this stuff, we not only take an academically rigorous approach, and part of that is remaining very tool and platform agnostic. So, from a DORA perspective, we’re never going to recommend, “Hey, Tool X or Tool Y should be the tools to solve the job,” anyway, so we remain agnostic. But if we take it at a level higher than that, I really do think that, and again, not to sound like a broken record, but this idea of being able to express compliance and security as code, I think, is a really big driver of enabling this ability to move fast.

But like we know from the research, from a cultural perspective, a lot of times, this has more to do with heavyweight change management controls, heavyweight release controls and things that are deployed. So, whatever tools we’re using, if we create something that is automated, that catches problems, that resolves issues as close to the developer workstation as we possibly can, so that we can isolate those problems to the least amount of developers, meaning that we can then change and fix those things faster, this just adds to this culture of trust and this culture of confidence.

Lori Lorusso:

Nati, do you want to add to this?

Nati Davidi:

I can think of three critical pillars to try to reduce, as much as possible, the security overhead, but just to be realistic, it’s inevitable. Security will essentially impact the delivery time of software always, it’s now a matter of how much we can reduce it and what is the right trade-off between the security and the release fast or die. The three pillars that I think of are very much aligned with the SLSA framework and with the NIST approach to software supply chain security. The first is to set the preemptive measures to make sure that potential threats are not coming in, whether known ones in the external packages or a thing that you create yourself in your own first-party code, so this is the preventive part.

And then the second internal part is about ongoingly and continuously examining your software assets, because threats are keep coming, new ones that are not known, and the state of software is being changed, so you need to again and again create a cadence of checking the software, regardless of a specific event. And then you have the preemptive approach of how fast you can respond to Log4js and the recent sale or Apache Commons Text with the right automation and infrastructure.

It’s not about responding to already existing attack, it’s about responding to an immediate threat that is about to come in. This is definitely part of the DevOps, DevSecOps, and I think that dealing with all of these three aspects in an automated manner, based on reliable data, would be the best way to reduce dramatically the security overhead.

In your permission, I see here a question coming from Matt Fitz about the trade-off between the costs of securing the development and the effect of actual exploitation. It’s hard to answer, of course, because it depends if it’s a collective exploit that damages an entire industry or industries, like happened recently with SolarWinds or with Log4js, which is a very carefully picked and aimed against a specific company, which is always very hard to calculate. But I think the general answer is for the short term, the cost of establishing a true entrance of the supply chain security is very high, but for the longer term, it will necessarily, essentially, will save the cost of the aggregated amount of exploit that could have been aimed against the organization altogether.

Lori Lorusso:

No one wants to be the next SolarWinds. When we talk about exploits and cost, I don’t think anyone wants to be the next SolarWinds. So, I think this leads great into, we talked about this earlier, SLSA, and so this survey leverages SLSA and Nati, you pointed to it and you showed how JFrog is working within the SLSA framework, but John Speed, how widely adopted, or not, are the practices associated with SLSA, and can this then maybe speed up to our last question, security time? If people follow this roadmap, will this help overall expedite the processes moving forward?

John Speed Meyers:

It’s a great question, Lori. One of the interesting things about the DORA surveys is it exactly answers at least parts of that for SLSA this year with a large sample, over 1,000 software professionals. What SLSA is, it’s supply chain levels or software artifacts, it’s a software supply chain integrity framework, it’s not all of software supply chain security, but an important part. There are a number of security controls, you can think of them as defensive measures associated with SLSA, and this survey asked about a number of them. Here’s the bottom line good news, for the majority of these practices, the majority of the developers within each practice said that that practice is either established or moderately established. So, I sometimes hear grumblings software, “Supply chain security, it’s too much of a burden to add on right now,” but the DORA Report finding suggests that actually, lots of developers are already doing this.

One caveat though, the questions were about the primary application or service that these developers were working on, so you can think of this as the best behavior, very likely, of these software developers, which makes sense, so there’s presumably lots of other secondary and even tertiary applications developers that might not be getting the same attention. Here’s how it relates to reliability. An interesting finding from the survey is that teams with better software supply chain security practices have lower burnout. You might say, “Well, how does this happen? I thought they’re working more so would’ve higher burnout?” At least a potential link here is that it reduces the amount of unplanned work, what software professionals call fire drills. When you have exploits and you need to go remediate it, it creates a lot of work that you wouldn’t otherwise plan to do and it’s disruptive to your schedule, it’s disruptive to your software performance. So, good security practices, including software supply chain security practices, according to the survey, actually reduce that unplanned work and lead to lower burnout, so that’s at least one positive upside.

Lori Lorusso:

I think lower burnout is a very big positive upside. After dealing with a pandemic, I think everyone is a little burnt out of being burnt out. So, what you’re saying and what the report is saying is that if you at least follow this framework, that’s going to leave you in a better state than if you don’t. Nati, did you have anything to add on to SLSA, anything you see that can take it to the next level, maybe?

Nati Davidi:

First of all, I think that the fact that it does not cover the entire software supply chain challenges is a very good thing, because you cannot take it all at once anyhow, it’s a big bargain, as John Speed said, and again, it’s something that should be taken gradually step by step. But even with this initial step, looking at the A to H levels of DORA and listening to the numbers and to the survey, and it’s great to hear that the groups that are leading the notable products are doing it better, I think that it’s still a huge challenge to even identify where do you stand in terms of your A to H levels? Again, I assume that the highest end of organizations are doing it better, but again, the bigger the organization is, the more difficult it becomes, because we have huge conglomerates that buys company that have different approaches to security and have different infrastructure of DevOps in place, use different languages, use different stuff, different CI/CDs, and it’s very hard to control all of them.

I think it’s almost impossible to map the current state of all of them with these levels. I think that even taking a step back, starting with even narrower approach, taking maybe two steps of those, and helping the community in their organizations to start doing those, not only bottom-up with one or two groups, but also try to start to discuss them top-down, it could be very helpful in engaging and start building this trust and this support. Because the one thing that can happen, for those that are not good enough, is that they see these map of things that they need to now implement and understand that it’s such heavy lifting that they start to say, “Oh, DORA is not the right thing.” By the way, DORA is the right thing, it is the best thing that’s collectively written in the tiers around software supply chain security, so we need to protect it. We need to make sure it’s not intimidating and that it is really feasible to implement.

Lori Lorusso:

Excellent. I think that it’s good to take the fear away. And then there’s all those community resources out there, too, like OpenSSL, who is trying to come up with guidelines and best practices for companies, so that they can scale and they can scale with security in place using SLSA framework, using tools and methodologies and best practices that come from people before them that maybe didn’t have such a good success rate, but I think that’s the nature of tech. Again, you don’t want to be the next SolarWinds or a company that was affected by SolarWinds, because that is scary and that could put you not just in a lot of debt, that could put you out of business. So, it’s good to see the adoption rate is high, that it’s becoming an easier lift, and that they’re not done, but it’s a good framework to start with. So, we mentioned CI/CD quite a bit, so John Speed, I’m going to throw this over to you. So, why does the link exist between CI/CD and more established software supply chain security practices? How did the two of those end up interwoven?

John Speed Meyers:

This is another finding from the DORA Report this year and I’m sure in years past, too. I’ll be clear that the survey itself doesn’t answer this directly, but I think there are some possible reasons and I’d be especially curious to hear from Eric about it, too, with his experience. But I think one of the benefits of using a centralized CI for a team and a product is that it places the checks in a centralized place, where everybody on the team and all their code is held to the same standards. So, otherwise, it becomes the individual developers or even individual security teams measure to implement something, unbeknownst to everyone, and relying on their own diligence, and that can be hard. So, a CI forces centralization and it forces a standard path that is the path everyone follows, so I think that’s a good theory for why, but I’m sure there are some other technical and social reasons, too.

Nati Davidi:

Go ahead.

Lori Lorusso:

No, go ahead.

Nati Davidi:

Just again, going back to the binary story, when looking it from the angle of who is taking responsibility on different security aspects and taking, again, the very known Log4j as an example, you had the security teams saying, “This must be fixed, you need to identify all of your assets across the organization, you need to upgrade them in the dev environment, in production, and so on.” You have, then, the developers that saw merely the specific portion that each of them were responsible for, so they saw it in a very limited manner. And then in between, you had the DevOps leaders were asked to fix it, were asked to identify it across the board, were asked to upgrade it, and were also asked to be able to do it in a shorter amount of time next time when Log4j comes in.

So, suddenly, DevOps are becoming the pivot of security for the organization, especially if this argument that the supply chain attack are becoming the attack vector of the decade, so DevOps are becoming responsible for security of the decade. And then CI/CD is a major part of the DevOps, clearly, and that, of course, creates a link that cannot be separated. And then the other thing is that the form of software that is being taken from a developer through DevOps to the world is, again, many times, binaries and big images that consist of many things other than source code and therefore, someone needs to be responsible for this part, so taking CI/CD and utilize it to better identify security issues to deal with the supply chain security in the right practice.

Lori Lorusso:

Eric, did you have anything to add onto this, from reports in the past that you’ve maybe seen this as a shift or is this always been the case, that security and CI/CD go hand-in-hand, whether or not it was prevalent before, but is most definitely now?

Eric Maxwell:

Well when I think of CI, I think of it as basically the backbone to everything that we’re talking about, so you can’t really have a solid DevOps environment unless you have very strong CI. So, I’ll go through the list, trunk-based development enabled by CI, you can’t do it without CI, automated testing, you can’t do it without CI. CI leads to CD and we know that CD leads to organizational output performance. We also know that companies with high levels of reliability are 1.4 times more likely to have CI, so reliability and we know that it impacts security.

So, there isn’t really a portion of DevOps, DevSecOps, whatever you want to call it, that doesn’t utilize CI, so that’s why I think of it as the backbone. When I’m coaching companies and when I’m working with them, I always say, “Let’s just get your build settled. Let’s get your build in a way where you can build individual components with success, you can roll back individual parts of your build without having to redo the whole thing. Let’s just start there and then we’ll start working on testing.” So, it’s of no surprise to me, actually.

Lori Lorusso:

So, I want to shift a little bit, ha ha, shift. I’m a dork, it happens. So, when we talk about the past and security was this huge, and not to say that it’s not huge and scary now, but it was something that was just completely just like, “You do you, we’ll do our stuff and whatever.” Now, the DORA Report and the culture of DevOps is saying, “No, that’s not the case. We are all in this together.” Do you see an adoption from legacy companies being able to move more freely into this type of DevOps environment? Do you see roadblocks along the way? How are companies taking a traditional, legacy, on-prem company being able to adjust to this type of new environment, where it’s not just scaling up security, it’s having to maybe change their corporate culture in a way that is going to lead to low burnout, high trust, where maybe that wasn’t the case before? I’m going to open that one up.

Eric Maxwell:

I’ll jump in first. So, I think with the research that we’ve done and we’ve shown year-over-year that software delivery, and now software delivery and operations reliability, is a major predictor for organizational success. I think that flips the narrative that a lot of companies have around, “Oh, IT is a cost [inaudible 00:52:52], it’s a necessary evil,” to, “Hey, this is actually something that we should invest in, we should invest in this internally,” because as a result, if you can have a 2.5X multiplier on the meeting or exceeding of your business goals, things like net promoter score, customer acquisition, revenue targets, that’s a very compelling story. When you’re trying to convince decision makers, they often think in terms of numbers, and so I usually lead with that conversation and say, “Hey, if we start doing these practices, not only will you have happier employees and have less burnout and have less security vulnerabilities, but oh, by the way, you’ll make more money.” They say, “That sounds like a pretty good deal, maybe I’ll do that.”

Nati Davidi:

Maybe I’ll add to it, some come from the more technical angle. Until maybe five or seven years ago when we had discussions with specific industries like automotive or aviation or critical controllers, it was a lot about heavy on-prem solutions, closed [inaudible 00:54:07], disconnected and so on. Suddenly, you see this technological shift of you have a router controlling critical infrastructure that runs containers on it and uses high level languages and communicate with whatever, with the very modern UI that allows connected through the internet. I think this becomes as a demand from the customers that are becoming used to be more open and more communicative, even in the most sensitive industries, let’s call it that way.

That somehow enforces even the biggest, slowest companies, 130 years traditional companies in Europe and in the States, to study things differently. One of the evidences is that most of them today have their chief digital officer that is in charge of the transition to the more modern infrastructure and connectivity and talk Web3 and other things. These are the leaders that we try to talk with in order to make sure that what we come with is really helpful for them, because these are cycles of 10 years. Moving a huge company with 35 subsidiaries to a modern infrastructure, to a well controlled CI/CD with monitoring and visibility, this is a process. You need a partner and you still need to support on-prem and you still need to be multi-cloud, because most of them don’t want to be one shop. So, all of these are very big challenges and again, I think it’s a lot of community work to engage with these leaders and part of the DORA effort and SLSA and OpenSSL is exactly where these players are joining forces to try to shape it together.

Lori Lorusso:

John Speed, did you have anything you wanted to add to that?

John Speed Meyers:

No. Thanks so much, Lori, but I’ll wait my turn.

Eric Maxwell:

Can I add one more thing real quick on this topic, and also on the topic of level of effort and is it worth it? So, we’ve seen in previous research, with regards to technical capabilities, that there’s a J Curve, so we also looked at this J Curve from the context of reliability, there’s also a J Curve. We haven’t specifically studied it in relation to supply chain security, but it is a technical practice and my guess would be that it would also have a J Curve just like everything else does. So, what that means is is that when you first start doing something, you actually might see a dip in ability to ship software quickly, in burnout, in team camaraderie and stability and things, but you reach an inflection point.

Once you reach that inflection point, it’s almost straight up, and that’s when you really start realizing the improvement that you get from these things. So, my encouragement is, first of all, you just have to start doing things and roll your sleeves up and do it and know that it’s a journey, and like any journey, sometimes there’s bumps in the road, so expect that. But the point is, is what we’ve seen from eight years of research, is that if you navigate those bumps and if you just keep doing the work, you will most likely be successful.

Lori Lorusso:

I think it’s great. I think the fact that there’s research to back up the length of time, the hurdles that people face, but then the positive outcomes, in general, I think that’s what people need to see. This panel, I think, has been amazing, because we have both DevOps security professionals, but the heavy angle is on the research, the proof of fact, the case study in and of itself that these are the things that the industry is saying, because these are the things that companies are doing to make things better. In that line to make things better, Nati, what is JFrog doing to help address supply chain security?

Nati Davidi:

So, first of all, we do things internally with our own dog foot, with all of the solutions that we are developing, trying to first believe ourself and trust what we are building, before suggesting to our customers. Serving more than 7,000 customers and managing the most critical assets, the mission critical software pieces, created tons of obligations on us to be secured and we have a dedicated very big team, not just part of the security product line, but dedicated, so the chief security office, a dedicated team for analyzing the software and comply with the highest standards out there, like [inaudible 00:58:38] and others to make sure that we are not vulnerable ourself.

Clearly, we are doing all of these [inaudible 00:58:43] that’s very much aligned with what is shown on the SLSA project. And then we come to our customers and offer the same, and again, it depends on how modern the customer is or how initial the process is of implementing software supply chain security. We choose where to start, but as Eric said, we always encourage to start, first start with something. They can scan your Artifactory retrospectively or implement only one piece, but by that, you start to create the notion and to create the engagement with the customers, whether big or small.

Lori Lorusso:

Well, we are right out of time. I would like to thank Eric, John Speed, and Nati for joining us today, this has been a very informative panel. If you have not had a chance to download and read the DORA Report, I suggest you do, so you can just see what we talked about in real time. You can see the stats, you can see the graphs, you can see how implementing these small changes, these incremental changes, can have huge outcomes on your company in the most positive way. Thank you again to our panelists. I am so happy that JFrog sponsored the DORA Report, because we will be quoting it from here on out if. If you look in the chat, Eric put the link to the DORA Report so that you can go ahead and get a copy of that yourselves. Again, I highly recommend it. Any closing thoughts from anyone? I just again want to say thank you.

John Speed Meyers:

No, thanks so much for hosting us, it’s really great. We appreciate it.

Eric Maxwell:

Thank you very much. I had a great time. Also, just for clarification, dora.community is the link to our publicly available community site. We have an internal messaging groups that are very active and we also hold weekly video lean coffee sessions where we talk everything DevOps. Today, we’re talking about platform engineering, super hot topic. So, anyway, thank you very much everybody. I really appreciate it.

Nati Davidi:

Thank you very much. Thank you, Lori, for accommodating, and John Speed and Eric, great pleasure and looking forward to keep working with such initiatives together. Thank you very much.

Lori Lorusso:

Thank you everyone for joining us. Everyone, have a great day and we’ll see you again.

Eric Maxwell:

Bye. Thanks so much.

Nati Davidi:

Thank you.

Try JFrog Cloud Platform

Software supply chain protection from the world's ONLY DevOps-centric security solution.