Advance Packaging Options with JFrog CLI

Webinar description:

In this webinar we’ll see how you can use JFrog CLI as a packaging tool for your binaries. In some cases, our application requires a unique layout when it is packaged. The package should include various binaries, and test files with metadata, arranged in specific directories.

The various components of the package sometimes have their own versions and they can be created by different process. If all of those components are managed in Artifactory, JFrog CLI can be configured to pick the right components, and package them together, according to a defined layout.

Additional resources:

Webinar Transcript

Very cool. So, as I said, our webinar today is focused on advanced packaging options with JFrog CLI. JFrog CLI is a common line tool that basically allows two main functions. It basically allows integrating with the build integration features as part of the JFrog Platform, but it also allows some very cool DevOps automation and features mostly around packaging and managing files. That’s going to be our focus today. I’m going to show you some of the nice things that we can do and how you can utilize this.

So just a little bit about myself and about my role in JFrog before we start. I’m leading the ecosystem team engineering in JFrog. We are an R&D team. What we’re focused on is developing and maintaining tools which allow integrating the JFrog Platform that we’re going to look at soon, integrating the JFrog Platform with the various tools and components which are part of our DevOps environment or environment. For example, CI servers, integrations, build tools, code. We expose APIs, which can be used from automation code to integrate with the JFrog Platform, and we’re also maintaining JFrog CLI, which will be our focus in our webinar today.

So just a few words about myself. I’m really passionate about technology, obviously, specifically about software. I care about our sustainable future on planet earth, and I do believe that software has an important role in allowing that, in helping us basically develop the technologies that are needed, the capabilities that are needed, for a better future, for a good future here.

The mission of JFrog is basically allowing a smooth delivery of our software, the software that is developed from the phase of the actual development, like the developer who is writing the code. We have tools that help developers, that integrate some of the capabilities that the JFrog Platform allows right into the IDEs. We have integrations for many of the popular IDEs today used by developers, Intellij, Eclipse, VS Code, Visual Studio, and very soon also WebStorm and GoLand.

Also, with the JFrog Platform, the JFrog Platform basically supports everything that has to do with building the software. It manages the binaries for you. It scans them for security vulnerabilities and even takes care of the actual delivering and distribution of the software to remote locations. So all of that basically supports our vision of what we call in JFrog making the software liquid, allowing basically to deliver software in easy an easy to maintain and with fast cycles and basically allowing the developer to get as soon as possible to the servers on which the software needs to run or to devices which needs this software.

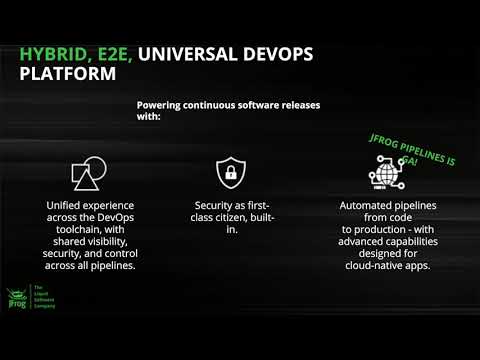

Let’s talk a little bit about the JFrog Platform in high level and just understand what it is and then see how JFrog CLI fits and can work with the platform. So the JFrog Platform was basically created in order to take all of the core JFrog product and just putting them under one unified UI, unified experience, basically allowing you to work smoothly with all the capabilities of the various products that JFrog delivers under one platform, having one user management, one experience, and having one UI basically to work with everything.

In addition, security now is a first class citizen in the JFrog Platform, so we are leveraging JFrog Xray in order to scan the binaries that are managed and hosted by the platform. You can configure or have JFrog Xray scan everything that is being uploaded and managed by the platform, or some of it. In addition to that, we now also have JFrog Pipelines as part of the JFrog Platform, which allows you to create workflows, YAML based workflows, in order to manage everything. All of the workflows, all of the CIs, and basically control everything.

Now, if we look at the next slide and see the JFrog Platform in high level. At the left hand side, you have your code repo. This could be Bitbucket or GitHub or whatever repository or source control that you’d like to use in order to manage your sources. Then JFrog Artifactory manage the binaries that are created by your source code. It holds them, stores them in repositories with specific access privileges, and that basically allows those binaries or those builds to migrate from one repository to another to support the life cycle of your software. For example, your binaries can be in a dev repo, and then when they’re ready, they can move to a staging repo, and vice versa. I’s a very flexible structure that allows you to support any life cycle that suits your organization. Of course, you can have specific access privileges to which repository to support that.

Then JFrog Xray has a direct access to those binaries, and it can scan them for security vulnerabilities and licensing issues, so you can define those policies in order to make sure that the software is secured and good and bug-free. JFrog Xray can integrate, you can integrate this scanning into various stages of the development process of the build, of the delivery process.

As I mentioned earlier, even from the IDE stage, when the developer is developing the code, the IDE can integrate with JFrog Xray and provide information to the developer about the dependencies, the specific dependencies that the project is using. Then Xray can be also integrated as part of the CI flow. It doesn’t matter which CI engine you’re using, either JFrog Pipelines or Jenkins or Azure DevOps or anything else. You can set JFrog Xray to scan your builds in order to make sure that they are secure, and you even have the option to further build or trigger other events if specific vulnerabilities are found. Once your software is ready for distribution, then JFrog Distribution takes care of the heavy lifting of delivering the software to the remote locations.

We are allowing to set up a edge nodes in remote locations, and JFrog Distribution will take care of delivering the software to those edge nodes. From there, the software can be deployed to the various agents and machines on which it will be distributed. All of that, as I said, can be controlled by JFrog Pipelines, the whole workflow, everything that basically happens as part of the life cycle, and also Mission Control and Insights provides you with a view of the processes that are going on as part of the system, as part of the platform. You can basically monitor through Mission Control, monitor your build and the important flows that are basically running as part of the system.

Now let’s talk a little bit about JFrog CLI. JFrog CLI is a component. It’s a common line component, which has tied integration with the JFrog Platform, and you can basically run it from anywhere. You can run it from your build agent. You can run it from your automation machines. It’s basically an extension of the JFrog Platform. It’s a way of connecting your agents, your build machines, with the JFrog Platform in a way that is easy and also very flexible. Because it’s a common line tool and it includes features that integrate with the JFrog Platform very easily, then it can basically be embedded anywhere. We are going to focus on some of the aspects or a subset of the functionality that the JFrog CLI can provide you while you are creating your packages and see how we can do that.

So JFrog CLI, just in a nutshell, it includes a connection detailed storage. It can safely store the details of the services as part of the JFrog Platform. We are going to look today at how JFrog CLI can work specifically with Artifactory. It also includes the ability to do operation on files, on artifacts. So those files can be uploaded to JFrog Artifactory. They can be downloaded. They can be moved, and we can do other things with them, like tagging those artifacts so that you can then work with them and package them together and look for them and search for them in order to create your end package. So when we’re talking about metadata, it allows an easy way to set metadata on those artifacts, and this is also something that we’re going to look at today.

JFrog CLI support file specs. It’s basically a adjacent structure. It’s like a way of describing the operations or specifying exactly which files according to specific criteria you want to do the various operations on. It also includes integration with package managers to create build info. This is not something that we’re going to focus on today. We’re going to talk about it briefly if we have time at the end, but JFrog CLI, because of its tight integration with the JFrog Platform, can basically enhance the popular package managers out there like NuGet, like Maven, like Gradle and PM, Docker, and others, in order to enrich the artifact with more metadata and basically have a structure that we call build info. It’s like the build of materials, which actually can be saved, stored in Artifactory to provide more data about your artifacts. You can work with builds as a higher level of abstraction rather than working on the specific artifacts. Just so that you know, it’s also something that JFrog CLI likes to do. Of course, there is more.

So with that, we will just jump right into the demo. I think the best way to actually see this, to see everything in action, is actually to see a live demo. Right now I’m sharing my screen. Most of the demo we’re going to see today is through the terminal, through the console. I will try to demonstrate the interesting things that the JFrog CLI can do. Of course, everything that I am explaining today is included in the online documentation, so feel free to go and look in the JFrog CLI documentation. We made sure that everything is there. Let’s start.

So I have JFrog CLI installed on my terminal. It’s accessed by the JFrog command. Right now I’m displaying the version. Let’s start by looking at the actual storage, the Artifactory servers, that I have stored in JFrog CLI. I’m doing JFrog and then C for config and show. Sorry. JFrog RT for Artifactory and then C for show. Then it shows me the details of all of my Artifactory servers here. Each server has its own server ID and URL and connection details and other important information. Notice that I have here already multiple servers configured, and I have one server which is marked as default. That’s my local Artifactory server, which is just for the sake of this demo I’m running on my local machine. That’s the one that I’m going to work with.

I can, of course, change the default server. I can say that I want to use a different Artifactory server that I have here. But let’s go back to my local Artifactory server that I’m using. Let’s start with upload, or you know what? Let’s start with search, okay? So Artifactory hosts the binaries inside repositories. I have here my Artifactory server. I can go of course to the UI. Let me just log in. I can see here on the artifact view all of my artifact, all of my repositories. I’m going to use a repository which I previously created, which is called generic local. Let’s start by searching the content of this repository, JFrog RPF for search, and then generic local, and it does the search.

I have zero artifacts in this repository right now. It’s basically empty. So the first thing that I want to do is start uploading artifacts to it just to show you how it’s done. I have here a few directories. I have an artifacts directory and an arc directory, which includes archives. If I’m looking at the content of the artifacts directory, you see, it just include here a bunch of files which are going to upload to Artifactory and also the archives.

Sorry, arc. So here I have just a bunch of archives. I’ve just created a few zip files for this demonstration. I can go ahead and upload those files. I can just do, I can just specify all my arguments and flags. Through command arguments, I can do just day frog, RT, and then upload and then just say, hey, upload all of my artifacts inside… this directory into my generic local repository. I can do it. I can just leave it like that, or I can say, hey, upload this into a specific folder in Artifactory, and then that’s exactly what will happen. It’s uploaded 42 files. Then just for the sake of this demo, I’m also going to delete all of them. I’m basically going to delete the whole content of my generic local repository, and that’s it. It says one, because it’s basically deleted one folder, the folder that I created named A.

I want to show you a different way to do this. JFrog CLI also supports a structure that we call file specs. Here I created a few file specs for this demonstration, for these demonstrations. If I go into spec, you can see here the actual specs that I created. It’s really easy to use, to work with file specs. Let’s, for example, look at the download spec here that I created just to give you a sense of what the file spec is. So this is the most simple file spec that I could think about for downloading files. Basically what it says here, downloads all the files inside my generic local repository into a directory that I want to create locally, which is called Out. That’s exactly what it’ll do, so let’s do this. I’m going to run JFrog and then RT, and then DL for download minus, minus spec.

You know what? Let me just go one directory up, because I want to create it like here. I want to add the Out directory to be created right here. JFrog RT download minus, minus spec, and then it’s inside this directory, and then download spec. You can name the file spec anyway, with any name you want. Basically, we can see that zero files were downloaded. Let’s go ahead and see why. Oh, that’s because I basically deleted all the content of my generic folder, so there’s nothing there. Let’s upload the bunch of files there. I think I have here also the upload spec here that I created.

Yeah, let’s look at this upload spec. It’s pretty similar to the download spec that we saw earlier. It has two sections. Like the download spec, it includes a pattern and a target. Here I’m going to tell JFrog CLI to basically choose all the files, which include a B, the letter B somewhere inside the name, and upload them into the imaginary repository inside a directory named A. Then I’m doing the same thing for all the files, which include A, and upload them into a directory named B inside this repository. Of course, you can choose here any pattern you like, so let’s just do this, B, and then upload minus, minus spec. Then I’m going to do this, provide it with the upload spec. Then it uploads everything.

Yeah, now I have 78 files here. Let’s also do a search just in case you want to see exactly what was uploaded. JFrog RTS, and then I can actually use also a file spec for the search. But I can also, to make it simple, just put here the name of the actual repository and get basically all the files that I have uploaded here. Now I want to use my download spec. I’m just going to do JFrog RT DL and then minus, minus spec and provide the download spec. Now, yeah, we have here now the files which were downloaded.

If you remember, I download them. I configured inside the spec to download those files. Sorry about that, to download those files. This is a download spec into a directory called Out. So let’s see what was created here. All right, you see? It basically maintained the same, a directory structure. I have here an A directory and a B. If I look inside A, I see here all the files, and the same would be for B. I could also use a minus, minus flat option added to the spec or even to the command itself, and that would basically download the files without the directory structure inside Artifactory.

Now let’s make things a little bit more interesting. Let’s say that I want to upload those files, and while uploading them I want to tag them with properties that will later help me to find the artifact that I want, or to specifically download files with those properties. So let’s do this. I’m going back to my upload spec, and suppose I want to tag all the files that were uploaded into this directory with a specific property. So I’m just adding to my file spec here, props.

Let’s say that I want to say that the status of those artifact is verified. I can choose any name or any value, and I can also put here multiple variables separated by a semicolon, and even provide multiple values for a specific property by separating the values with a comma. But for now that’s everything that I want to do. The artifact that I’m uploading into the B directory, I don’t want to tag them for now with any properties. So let’s do this. Now I’m going back and running my upload command again, and now it uploaded those files. It used this upload spec this time with those properties. Let’s look at the search and see the actual with the properties in Artifactory. I’m doing now generic, so I’m just doing a plain search right now. Notice that those files that are inside the A directory, now they have this specific property.

Let’s see if I can find here artifact… Here, artifact inside the B directory do not include those properties. I can of course say, all right, I want to search only the files inside the B directory. Then of course I will see files with no properties. Just to give you a sense of what you can do with properties, and of course now I can go and say and use a download spec in order to download files, not only according to their path, because we saw, the way we saw earlier that you can use a wild card, but you can also use properties for them for those files.

Let’s see what else we can do with file specs. Suppose I want to upload those files into a directory that will be dynamic. Suppose I don’t want to upload those files into a directory called A, but I want to set the directory name while running the command. So I can just put here a dollar with a curly bracket and put here any name of a variable that I want to provide. Then I can just run the command like that. Let’s go back to the upload command, and I can say minus, minus spec, and then specify the variables that I want to send. I want to say, all right, the dear name will not be A. It will be, I don’t know, My Dear, My Directory. Let’s do this again. Those files were uploaded this time, hopefully to a different directory. Now let’s run our search command again. Now I’m expecting those files to be here.

They’re not? Oh look, it uploaded them here. Probably I did something… Something is wrong with my spec. So dollar… Dear. Yeah, I have here, you see I had here multiple double curly bracket. Now I believe this should work. Let’s run the command again. Now let’s search again.

Oh yeah, it did find them. It did put them in the right directory, My Dear, as you can see here. Let’s see why the search command, what did I do wrong? My Dear. Yeah, I should have included a slash at the end in order to let JFrog CLI know that basically what I’m searching for is all the content inside the My Dear folder, and I’m not looking for a file, which the name is My Dear.

As you can see, you can use any variables with any names that you would want. But what if we want to add more, we want to still have our target dynamic, but this time we want to use a portion of the source path of the files or the path in the local directory and use it to be included in the target path. So by default, if you are specifying in the upload minus, minus flat false, those files will be uploaded with their file system structure. But suppose you want to do something which is more interesting just to support the exact layout that you want to use.

Let’s look. Let’s say that I want to, let’s look at my artifacts, and let’s say that I want to modify the name of the file. I want the file to still be uploaded according to its name here, but I want to add something like a suffix to this file. Let’s try to do this. I’m going back to my upload files spec. Here, as you can see, I’m saying, all right, please upload it into the directory that I’m providing you, but I also would like to use… Let’s do this like this. Okay, let’s try this. I hope this works.

I want to say, all right, this is the file name. I’m making the pattern a little bit more complex. I’m saying, all right, pick up all the files. I don’t care in which directory they are. This part of the pattern include the actual file name. I want to say, all right, pick up this part of the file, so I’m wrapping it with parentheses. Then I’m saying, okay, here, use this part of the file. I’m doing this by adding something that we call the placeholder.

So the number one here, it means that I would like the target to pick up the first part inside the pattern, which is wrapped with parentheses. If I had here additional parentheses, like, if I had a second set of parentheses, then I could reference it by the number two. But for now, for the sake of this example, I just want to pick up this file name, and I want to use it but I want to add it… I want to add underscore and then B to specifics of the file. Let’s say that this is what I want to do. Let’s see if this works. Let’s see what happens now. So let’s run my upload again. I’m still providing in the spec VARs the My Dear folder, and let’s see if it works.

All right. Now, let’s go ahead and look at all the files inside the My Dear folder, so I’m running the search again, JFrog RT, and then S. Then I want to look inside the files inside generic local and then My Dear. Then let’s look at those files. Look what happened. All the files were uploaded the exact same names but now with the underscore D at the end. So that’s basically the power of placeholder. They basically allow you to take parts of the initial pattern and then use them as part of the actual target pattern.

Okay, let’s move on. What else can we use? We recently added detailed summary for the download, so we can go ahead and do… Let’s do our download and say… All right, I lost it. I’ll just run it again. JFrog RT, and then DL, download all the files in my generic local repository. I want to download specifically the file inside the A directory. Let’s put all of them inside a new directory that I’m choosing. Let’s call it Out Q and minus, minus detailed summary.

What that will do is basically also provide me a summary of all the files which were downloaded. All of this is in adjacent format, which basically allows me to take this and very easily pass this to other processes or other things that I want to add. Suppose that I want to see only the target section. So let’s try to do this. I’m going to use here a tool that is called, which I really like. It’s really good with parsing patterns. First of all, let’s see what that returns. That basically should return the array of all the files. Then I want to say, all right, I want only the target. So, dot, target 3.

See what I did wrong here. Minus, minus. Let’s look at this again. Oh, so this one returned me the array, and here, basically what I need to add is… by this. All right, got a small mix-up with the JQ, with the JQ syntax here. But yeah, basically JQ basically, just forgot the syntax, but basically JQ allows you to take sections of the actual pattern and parred them, part of the live demo issues, which unfortunately make you forget things.

All right. Let’s look at sorting, okay? Basically, what you can do is also use sort, sorting in order to store the files. So let’s do the search that we did till now, JFrog RTS, generic local. Yeah, that basically provided all the files. But suppose that I want to get only the most recent file. So let’s just go ahead and create here and upload a new file into my generic local repository. So let me just create here a new file. Then there it is. Then I’m going to go ahead and upload it into, right into the root. It stopped here correctly, right into the root of my generic local repository, and there we go. This is basically the most, the newest file, that was created there.

Now let’s use the search in order to get only the most recent file. So what I’m going to do, I’m going to say again, all right, I want to search. This time I want to say minus, minus, sort by created. I want to have the starting order, order by sending. Then I want to say minus, minus, limit one. Oh, yeah. Minus, minus, stored by, order by… Yeah. Typo here. Generic local, minus, minus, stored by, created, minus, minus, order by desk. Let’s see, what did I put wrong here? It says plug provided but not defined, minus, minus. Yeah. Not ordered by its sort order. About that. There we go. We got only the new file.

All right. Let’s look at a few more interesting things. I’m going to go ahead and move faster now so that we have time to look at those things. We can use exclusions. I can say, all right, I want to download… When I’m basically downloading files, I want to download only… I want to exclude some of the files that I’m downloading. So let’s see how we do this. Let’s see what we have. I think that I have here already a spec that includes this.

All right, look at that. This is relatively similar to the actual spec that we had earlier, but now I added exclusions. So exclusions can get a list of exclude patterns. Here I’m basically saying, all right, exclude everything which is inside generic local, inside the A directory, and also excludes everything which is part of this pattern. I don’t want to get all the Read Me files there, and I don’t want to use a pattern right now, for a placeholder, and just get me everything from the generic local and exclude those files. Let’s run this real quick. I want to upload this into a new directory called Out 3, oh, RT, CL and then minus, minus spec. That of course did the trick. If we go ahead and look inside this directory, it will have those files excluded.

What else? You can work with symlinks. If you have a symlink that you want to upload and you want to upload it to Artifactory, then by default the same link, when you are uploading the content, you will be uploaded the file which the symlink is referencing, but the download command also supports… I do minus, minus help. Also supports a… Let’s just scrap.

By default, let’s look. Let’s start with the upload, because that’s basically what I wanted to show. When you are uploading files, by default, that the value of the symlink is false, but if you’re doing symlinks true, then those symlinks will actually be uploaded to Artifactory. Then when you’re downloading, you can say, the download command will basically figure out that what was uploaded to Artifactory is a symlink, and then you have the option to do a validation, to actually make sure that the symlink that you’re actually downloading is actually referencing the right file with the right check sum, which was uploaded.

We don’t have a lot of time, so I’m just going to briefly talk about a few more nice capabilities that you can use that can help you with managing your files and automating everything with Artifactory. There is a nice functionality called sync deletes, which I want to show you. What sync deletes does, it basically allows you to say, all right, I’m going to download the content of a specific folder into the local file system, but I want to make sure that the local file system is basically in sync with the content that I downloaded from Artifactory, so I want to delete everything which is in this directory and does not exist in Artifactory. I can do the same when I’m actually uploading files and I want to make sure that my upload destination is in sync and basically includes only the files that I uploaded, and everything else would be cleanup. Those two capabilities are supported by the sync deletes option.

You can see here. So sync deletes both for the upload and the download command basically specify a root path from which you want to sync your packages to. There are more functionality obviously around JFrog CLI. You can search inside archives and also download archives according to the archive entries that they specified.

Yeah, so basically, I hope that I managed to show you here a few of the advanced features or the packaging options that allow you to manage files with Artifactory through uploads, downloads. You can do the same using file specs with copy delete set props. Basically, all of the functionality that I described here today can be done using file specs or with command line arguments.

Okay, so I think with that, we will move to questions if you guys have any, so, if we have any questions asked, I’d be happy to answer.

We got a lot of questions, so we’ll try to answer as much as we can now. For the questions that we can’t answer, we will answer you by email. One of the questions is, regarding Artifactory supports all type of repositories, do you have any insight into the availability of Alpine local repositories on Artifactory? Is there a specific milestone for it yet?

Okay. So, yeah. You basically asking me about a feature that is planned to be released in Artifactory. I think that we will, it’s better that we answer this question later on offline we reply with an answer, because basically I’m not the right person in the company to answer about future roadmap items regarding to Artifactory, so we’ll make sure to check that with the relevant product managers here and provide you with the answer.

Another question is, is it possible to use JFrog CLI to publish, build info from Bamboo or Jenkins and how?

Yeah, so we haven’t looked at build info, the build info integration of JFrog CLI, but basically… Let me share my screen again. JFrog CLI, a lot of the commands that you can run with JFrog CLI and receive a build name and a build number… Let’s look at the upload command, for example. So this command also… All right, it’s somewhere here. There you go. The build name and the build number. Similarly, a lot of the commands expect the build name and the build number, and also commands which integrates with the various, with the popular package managers. Then this build info can be uploaded to Artifactory.

Now, both Jenkins and Bamboo also support build info through the Jenkins Artifactory plugin and the Bamboo Artifactory plugin. They include a build in for support. You can also use JFrog CLI directly inside Bamboo, but yeah, this basically means that you’ll have to execute them on your own through a common line interface.

For example, in Jenkins’ pipeline, you can make sure that JFrog CLI is installed and just run the JFrog CLI command and do whatever you want. You can do the same with Bamboo. But if you’re asking whether there is a tight integration with Jenkins and Bamboo with JFrog CLI, there is not. JFrog CLI is used, however, by other CI solutions that we maintain, by GitHub through the setup, JFrog CLI by Azure DevOps. But since for Jenkins and Bamboo we have a integration, like a built in native integration in the plugin with those capabilities, then that’s why it’s not utilizes JFrog CLI natively. I hope that I answered your question.

Okay, I see a few people have asked the following question. Is it possible to use JFrog CLI with Docker repository?

Yeah, sure. Sure. Here’s how you do it. View the screen again. So JFrog CLI supports two commands related to Docker. It’s the JFrog RT DockerPush, which basically allows pushing a Docker image into Artifactory, and also the Docker-Pull command, which pulls a Docker image from a repository in Artifactory. What JFrog CLI is doing here is utilizing the Docker client, but it makes sure to collect and record build info for those actions. If you are pulling Docker images from Artifactory, all of the layers of those Docker images will be recorded as build dependencies as part of the build info. When you’re pushing a Docker image, the layers will be recorded as artifact, and also the base layers will be recorded as dependencies. So yeah.

From that point, you have a Docker, a building for your Docker build. The same is true for other packages managers too. It’s the same concept, basically providing a build name and a build number or running the command, like the Docker-Push or the Docker Pull. Then all the build info is collected and generated locally. Then when you are ready, when you don’t want to add anything else to the build, you just run a JFrog RT build push, build publish command, which basically publishes the build in for.

Okay. Maybe we have time for one more question. Is there a limit to the number of files I can upload or download with JFrog CLI?

The answer is no. You are not limited by the number of files that you’re uploading or downloading. Let me just describe how that works. When you are uploading files to Artifactory, you are providing a pattern for the files to be collected from the file system, and then JFrog CLI just goes over, walks over the file structure in the file system and the files which match the pattern they just uploaded to Artifactory, while the search is going on. For download, basically JFrog CLI issues an AQL query, which returns the list of downloaded files, and while this list is being processed and parsed by JFrog CLI, those files begin, are starting to be downloaded. Basically, you don’t have a limit. The only limits that you have is… I don’t know, is this space that you’re using and the time that you’re willing to wait for the operation to happen.

Let me just add to that, that everything is check sum, is optimized through check sum. So both the downloads and the uploads. If the files already exist in Artifactory, they will not be really uploaded. The bytes will not be actually transferred to Artifactory. It will just be another reference added to the new pattern Artifactory, and the same for downloads, almost the same. If you are downloading a file which already exists in the location that you ask for it to be downloaded, there will be a check sum calculation running, and if the file is there, it will not be downloaded. So everything is optimized in that sense.

Just before we wrap up, one announcement from us. I wanted to let you know that we have JFrog SwampUp, our user conference. It’s going online, and it’s going to be from June 23rd till the 24th or June 30 till July 1st. You have the opportunity to receive live DevOps training, join technical sessions similar to what we did right here, and learn about the future of DevOps and everything that is going on, from cloud native DevOps to DevSecOps and everything in between. So yeah, just wanted to remind you that it’s happening, and we hope to see all of you there. You can see the details, check out the details online, of course, and register.

Okay. Thank you, So, as he mentioned, you have the link for SwampUp in your resources list, so you can learn more about it on.SwampUp.com.

Thank you, Ray-yel, for the great presentation.

Thank you, everyone, for joining us today. For the questions we didn’t answer, we will reply to you by email today or tomorrow, latest. That is it from us for today. We wish you a great day, and we hope to hear from you soon. Goodbye.