Hi guys, my name is Marija Kuester, I am IT service manager by Siemens and today my colleagues and me would like to give you some overview about motivation and challenges we have setting JFrog Artifactory running as the IT service in our company. So, three years ago, we had the following situation: There were a lot of developer teams working with different development platforms, using diverse decentralized solutions for hosting their binaries. We had teams hosting the binaries on TFS platform which is in principle not designed for binaries hosting, we have teams hosting their binaries in clear case. Some teams use different shares putting their binaries there.

Some teams hosted the binaries on the local machines, and some teams found some open solutions building shadow ITs. All these solutions had to fulfill some important company requirements like how to keep binary secure, how to share binaries with other projects, how to fulfill legal requirements, how to reduce costs for administration, or how to make everything more performant and available for developers. So it was definitely necessary in our company to establish a unified central managed platform for binaries hosting, fulfilling all functional and performance requirements coming from developers, and fulfilling all security and legal requirements coming from management and reducing costs for administration. Today, we are providing a global service within Siemens making Artifactory available for developer teams in our company. We have one dedicated team administrating all Artifactory assets spread worldwide, supporting developer teams to integrate Artifactory in their development pipelines, taking care about all security and legal aspects should be fulfilled in order to manage the binaries in the proper way and being permanently in touch with JFrog getting support from their side. So here is our servicing numbers. We have activity clusters in 15 locations spread worldwide on three continents.

We are hosting 43 servers in the background and have one team taking care of whole service setup, we have one supplier: JFrog. And we are supporting more than 250 software projects and serving around 6000 developers. We have a hundred and fifty million accesses on our system per month worldwide. So what are the advantages of setting Artifactory up as an IT service? We set up Artifactory as a single binaries hub in our company. We established Siemens as an inner source hub using Artifactory. We have pretty good overview about third party binaries being used in our company. scanning them for security and license vulnerabilities, we reduce shadow ITs because we have one central service used by many projects, we cover all legal requirements for binaries hosting, we cover all security requirements for binaries hosting, we reduce cost using centralized solution. So these are all advantages we have setting Jfrog products as IT service. And my colleagues, our innovation guy Andreas Mirring, and our service architect, Imre Pe m, will talk more about the challenges we have running Artifactory as the IT service in our company, and about solutions we implemented or about to implement in order to keep our service available, performant, predictable and secure.

So thank you, and guys, it’s your turn. After this introduction to our service, and how it looks like I want to give you some hints on how to set up your own servers and what supporting technologies you can use. For this, I first want to talk about the difference between the application and the service experience. The application experience is that what you all know of Artifactory this is basically the application created to use and is good for the users. This is provided by JFrog. However, this is not all that you have as the overall experience. You also have topics like the availability of your system integrations to third party products, costs or training studies provide to your users. And this is your task to make this great. And to talk a bit about the ratio between application and service experience. This might depend on your own offering, the share of the app experience could be bigger or smaller. For example, if you consider a system which has just a small scale with a few teams, it’s a single instance.

And you have no legal requirements and only use the system out of the box. It’s more or less the app experience. But if you’re in a situation where we have quite a huge scale with systems worldwide. And you have lots of laws like export control, or FDA approvals, and an integrated workflow with third party tools like source code repository in your own company. It might not be that important how good your app really is, but how great your service is. Consider, for example, our service with quite a lot of instances worldwide and dozens of views or business units that use it. It’s really important how we set up our service and it could be the same for you. And might be the best service offering can outweigh the great applications. So keep this in mind. To support you a bit and give you some hints, I want to do now analyze some supporting tools and workflows based on some stakeholders. For this, I want to pick the developer and the project manager. Let’s first look at the developer. So a typical developer has quite a few injection points with your service which you might not often think about like build failures or downtimes, on and off boarding, so that you can use your system, some bugs that he might experience during the usage, customizations and interfaces that you have to other company assets, and the supporting and training that you offer. I want to take a look in depth at the failures and downtime. So a typical scenario would be that a user has a broken build in the archive artifacts step of a CI/CD engine and he will ask himself: “Is Artifactory not really working? How do I find this out?” and in the end, if you’re a service provider, it means that you’re guilty until proven innocent.

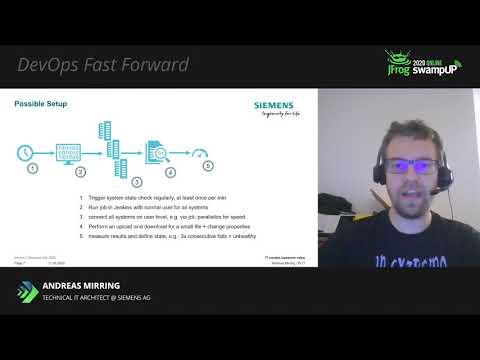

So people will always assume that it’s your fault if something isn’t working. So gather metrics and data to provide a clear picture, whether everything is up and running and everything is working fine. You have to prove this. It’s on you. Also, define the term running a system. So is it just a ping on the machine? Is this enough for your customers? Is it an HTTP 200 on the main page, do you have maybe a small sample file that you will upload and download? So when you say now the system is working? Or do we have maybe a defined service level agreement where you have a use case and say, we have to upload this artifact, we have some replications and access rate changes and because it’s customer proofed. Now, let’s quickly discuss a possible setup how you could measure these results. Typically, you should check this quite regularly I would propose at least once per minute because you always want to know the current status and you don’t want old information. You could for example, run a job in Jenkins, which uses a normal Artifactory user and which locks into all systems. In this case, we have three and you should connect as similar as the user as possible. For example, use JCI and you should also paralyze this for speed because you want to check off you can then for example, perform an update than download of a small file, compare the checksums before and after, and maybe change some properties on the system so that you interact a bit with the API’s.

Then measure all these results and compare them against the defined good state. So for example, you could say the system is always healthy if the results are fine. If you have three consecutive fails, it becomes unhealthy. If you do it like this, you will still quickly see any issues and you can ignore false positives like network flukes example. Be also sure that not only you have these results, but that you can show them an easy to understand overview to your customers like a landing page where everyone can access it and one traffic light per server which shows green or red. And also lock the results for long term analysis because you want to show that your uptime is for example, 99.9% according to the system. Another stakeholder would be the team lead or a project manager. So also they might have quite a lot of interactions with a service. For example, they often want to know the resource consumption that our projects have because they have to pay for it. They have a project onboarding in case of new projects, they want to go be up and running quickly. They have to maintain the project, for example, check permissions or add new users. And they might be an innovation driver, because they want their project to succeed. And all these want to know if you provide new features.

In this case, let’s discuss the project creation and maintenance. So if you want to provide a service, you require additional informations from your customers.

So you can do proper service delivery, like who is the owner of a repository, who will pay for it and who should be contacted in case of issues like a hacker attack, who is allowed to grant access to the project resources and Do we have any special legal requirements that we have to cover might be Xcode for export control, there might be FDA approval, there’s a lot. And you have to work together with our customer for this information, you cannot provide this on your own. But if you have these infos, it could be a baseline for future automations and easier interactions. Let’s discuss a possible setup how you could realize for example, an automatic project creation and maintenance, so a good approach would be to create a small UI for the users where they can log in and enter and request new projects so that you do not have any manual interactions. In this case, you should gather all the information, all the information that you need depends always a bit on your own setup, and you should store them on a separate DB. Do not put it just into the Artifactory because you need it globally, not just for one instance. And what you could then do if you have these informations, you could automatically create all your instances via the rest API, all the repositories, you could automatically add the replications. And you could, for example, create an Active Directory group in your corporate Active Directory, hand over the group ownership to the user that requested it so that he can now maintain all the users. And it’s not on your central servers to maintain this. And after you’ve done created the repos, you should also add them to your regular monitoring jobs because all your end users need is information like usage patterns of the repositories or the cost that they create or security issues like public anonymous rights to want to report this information to your users.

So you should also add them here. Yes, and after this short overview of the 10 possible service setups I want to hand now over to Imre. Hello, everybody. Welcome to SwampUp. My name is Imre Pem and as Marija introduced me, I’m a service architect on this Siemens Artifactory service. And in the next five minutes, I would like to introduce to you some growing pains that we experienced and some solutions that we came up with. So on the screen you can see again this map, I will show another representation of our servers, which are just pictures of servers to illustrate what problem we had once. Basically some servers became unresponsive for minutes or maybe just tens of seconds, but the strange thing was that there was no indication at all in our monitoring system what can be the problem. So clearly, our monitoring solutions – which are standard stuff like CPU and so on, storage and others like that – were not sufficient. So I would like to show you what other monitoring solutions we installed to circumvent this problem.

And we see here HTTP threads, access threads, background workers DB connections, JVM heap. These are all more things that are built on the Java Virtual Machine and being data. We read these with a JMS client, which is for our cases – you look here but there are other great clients as well that you can use. And as you can see, we now can monitor it, we can set alerts. We also have this health check, which is basically a standard script with upload some files, download some files and do standard tasks and measure its time. So this monitoring is really essential for our service at this scale, because without it, we wouldn’t be able to function as you see, we had before that sporadic problems and by the way, it took several weeks together with JFrog to find out that it was a problem running out of the HTTP thread pool that was enabled in Tomcat.

And other occasion they’re very good use this data in a great way where in one of our clusters was unresponsive for some time. And with this HTTP threaded data, we were able to find out that one of the nodes got all the real requests. As you can see, there were a high number of requests for some other nodes as well, but they were event based replication threats, and as the load balancing scheme are based on the lowest number of threads, it was a problem and of course, we just did a load balancing and solved the problem. But these experiences come with growing and it was great to have the JFrog support and also without these Java, virtual machine based monitoring, we couldn’t function. And so this was some problem that we had to overcome. I hope maybe you get some ideas from this and I would like to show you something else.

We have these servers, and they generate logs as all the servers all the time. In many applications, it’s not really a big interest. For many others, they just look at some logs maybe once a year, or they just archive them for for traceability. But for us, it’s really our daily life to dig the logs. I tell you an example. For example, one of the users come to us and say, “Hey, my files are not replicated to the remote location, what’s going on? ” And then we had to go to logs and see if he really uploaded the file, maybe just another file name mistake on his part. Maybe the replication ran on an error and failed. Maybe it’s still running so we really need to go to dig in all the logs and the user central log analytics solution. Again, it’s not a big deal. It’s not reinventing the wheel, but it’s something we couldn’t live without. And maybe your solutions will be interesting to you. We use Elastic Search, I know that there are great tools out there as well, like Splunk, like Sumo logic, but really, it enables us to function.

For example, I look at the last 15 minutes of access logs. Let’s see what’s going on. And you can see we see all the servers at once. We see all the actions of course we have the track all the fields, I just show you this because maybe I don’t want to show you some confidential data. But you can filter in those stuff and you can search stuff it really enables us to leave and quickly dig into logs. But it also enables some great insights. For example, we have this dashboard where based on these logs, we see interesting data, maybe for the management, but also to see the big picture. Like what number of requests are coming to the total of four systems.

You can see the weekends are less busy than the weekdays. You can see also the load distribution between the servers and some big numbers. It’s great to see the big picture. Or, we have this other tool other dashboard where we track the number of requests weekly on the servers. It’s a great tool to see if there are big shifts in load on our system like one of the server is getting more and more loads and it’s, for example, coming up many places like the screen server here. In this case, you might go look into the hardware, is it sufficient? Or maybe you need to increase something. And another big benefit for us is this stops errors. We report the top five errors maybe here… and it really can help us proactively find big troubles in our system. Again, we couldn’t function at this scale without these solutions. I hope it was useful for you. If you have any questions, feel free to contact me during the session or maybe later. I think my contacts are up there so thank you for watching and have a great time at SwampUp.