Migrate and Modernize: How to Upgrade Your DevOps as You Move to the Cloud

Understand how to transform your IT with AWS infrastructure and JFrog DevOps.

Join our webinar to learn how JFrog and AWS can help you transform your DevOps and infrastructure to a more nimble and secure environment. In this webinar, you will gain insight on the strategies and best practices to overcome the challenges of legacy infrastructure and older application build and deployment approaches.

In this webinar you will discover:

- Why and how to transform your IT architecture with a DevOps model.

- How to modernize your infrastructure as you transition workloads to the cloud.

- Why and how to employ microservices and containers across your environment.

- How to get unified visibility of your binary repositories across AWS and on-premises data centers.

Webinar Transcript

Good morning and good afternoon, everyone, my name is Courtney Gold, I will be your moderator today for today’s webinar. I would first want to introduce the title today, it’s Migrate and Modernize with AWS, and we’ll have Bill Manning joining us today here, and then we will also have, let me move it on, Junaid Kapadia. He will be the AWS partner we have today joining our call. So again, I want to appreciate you guys both for joining us.

Before I hand it off to both of you guys, I want to do couple of housekeeping tips, just a reminder, this is being recorded, so don’t worry if you miss something. We will be sending it out after this webinar. Secondly, don’t forget to answer or ask any questions in the Q&A chat. We will have someone on this call to help answer any of those questions. I know no one wants to listen to me, I will move it on, and you guys enjoy the webinar today. Thank you.

Courtney, thank you so much for the introduction, and everybody welcome. I’m very excited to have Junaid as part of this discussion today, I’m Bill Manning, I’m one of the solution architects here at JFrog. Some of you have actually probably had interactions with me, if you’re our customers. I’ve also done a bunch of series of talks over time for JFrog in general, but like I said, I am very honored and happy to have Junaid here. Do you want you to introduce yourself?

Thank you, Bill. Hello everyone, my name is Junaid Kapadia and I’m a solutions architect for AWS. I primarily focus on the startup segment and my career prior to Amazon, I’ve been in the IoT healthcare tech spaces in general, both public, private cloud, but it’s great to be here with Bill and JFrog, and looking forward to a little chat today.

Excellent. So let’s move on to our agenda. So first of all, that was our quick introduction that we have here. Next thing we’ll be discussing is, as Junaid actually gave a quick explanation of some stuff behind AWS, why the cloud, why AWS, we’ll also be going through and discussing some of the challenges and benefits of actually migrating from say, you’re self-hosted on-premise installation, in this case, artifactory and our tool sets, and maybe you’re modernizing and moving your infrastructure for all your development needs up into AWS as we see a lot these days.

And we’re going to talk about some nice ways to do that, although, how do we move your SDLC and how do you get that into your AWS infrastructure without missing a beat? At the end, we’ll discuss some resources that we have available on the JFrog side and also the AWS side to help with this. So without further ado, I’m going to have Junaid actually kick this off and let him actually take over for the next section of this.

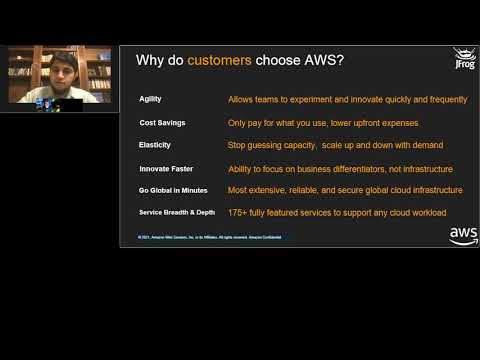

Thanks Bill. Before we move forward, it’s actually very important for us to address why cloud or why cloud infrastructure. So why are companies moving to the cloud is a common question. Right? And to put it simply, customers are moving to the cloud or specifically a cloud-based infrastructure for a variety of reasons, and if you summarize it, it would be number one, they want to increase their IT agility. They want to attain some level of near unlimited scalability. They want to improve reliability, and of course, they into lower cost and they want to wrap all of that up with as much as security as possible.

But at the end of the day, what do all of these buzzwords mean in terms of business value? So, moving to the cloud effectively allows customers to innovate faster. And that is because it allows the tech shops to focus their IT resources on developing applications that provide a differentiated business value that improves the customer experience for whatever product or service they’re providing instead of spending time on managing, say their infrastructure and data centers. So to summarize that again, the cloud allows businesses to innovate faster simply by removing the amount of time spent on infrastructure and even application management in the case of managed services.

So, with the business value addressed, the next question is, “Okay, which cloud provider?” And it’s important to note that when companies decide which cloud provider to select, they’re often basing their decision on a few traits, but if we focus on three of them, number one, it would be quality, number two, performance, number three, the scale of the provider’s global data center infrastructure. Companies want to trust their IT assets, excuse me, their assets and their IT infrastructure to a provider that has the most advanced systems, security, processes and controls in place that deliver that exceptional level performance coupled with experience that a business would require and would expect from one of the largest corporations. And this is where AWS can help.

With cloud computing, AWS manages and maintains the technology infrastructure in a secure environment and businesses access those resources through the internet to develop and run their applications. So why do customers choose AWS? There are a variety of reasons, but in the interest of time, we’ll focus on six key tenets. Number one, and first, we’ve got agility. We said that innovating faster is one of the main business propositions of the cloud. So, AWS provides agility to its customers because you can experiment and innovate in rapid fashion by accessing a plethora of different types of services that range from compute to DevTools to databases, to networking, to serverless and more.

Second, you only pay for what you use. For example, a retail business may require fewer instances during an off peak period versus they require many more instances during a peak period. So they’ve got a sale going on. In that case, you’re going to pay much less during your off peak period and you’ll pay whatever is required during your peak period. Third, and this goes hand in hand with cost is the elasticity. So how much will you need to scale during your peak season versus what is your baseline versus when you’re not getting that much traffic? So you can automate both the scaling up and scaling down of your instances and whatever other specific service you’re using as required in terms of both scaling up and scaling down.

Fourth, and we mentioned this earlier that, with respect to our primary business value, we want to be able to innovate faster. And AWS helps this in a variety of ways, but for example, you can spin up a server to test your MVP in a matter of a few minutes, and not worry about the underlying infrastructure through a variety of different AWS compute services. Fifth, going global in a few minutes. So, what does that mean? Any business would like to be able to serve their customers in their specific locations. AWS at minimum has 24 geographical locations, and it has the ability to provide more than 230 plus security features across those locations.

Last but not least, AWS has more than 175 plus services that can effectively support any kind of cloud workload. That allows AWS to provide both breadth and depth. So there’s a broad and deep cloud functionality addressing multiple use cases that AWS can provide. For example, you can leverage servers through EC2 instances, manage databases that are highly available through RDS and Aurora, you can satisfy your compliance through our security and compliance tools. With respect to analytics, you can build data lakes on Amazon S3. You can run hybrid architectures and even provide services that assist with migration.

So, all of this includes the AWS marketplace where partners like JFrog can provide their solutions to run on the AWS cloud. So there’s plenty more we can talk about, but the key here is that all services are built upon compete, storage, database, networking, and they run up and down the stack addressing the specific customer need. So you can use what provides differentiated value for you as the customer. With that being said, I’m going to hand it back over to Bill to talk more about cloud migration.

Excellent. Thank you so much for that, because really what comes down to is, is that, everything that Junaid said was correct. I mean, this is the things that AWS really excels at. I mean [inaudible 00:08:26] largest longstanding infrastructures is cloud as service. Right? Providing all these pieces to make it easier to also offload, and to also lower the TCO. These are the things that we hear.

When we look at cloud migration, we see it more and more, especially over the past 20, 21 months that we’ve seen this, is that this has been a time period where we’ve seen a lot of customers, actually even may come to us and say, “Hey, look, you know what? We’re looking to lower our TCO, we’re more remote engineers now, we were actually building more diversified products in larger scales and more global infrastructure. Right? We’re looking for more compliance, or we want to iterate faster. How do we do that? How do we implement security into this?”

[inaudible 00:09:10] part of it comes down to is, “Hey, look, we’re going to go ahead, we have a mandate that comes from either, maybe an executive or an initiative internally, an organization, what is the major things that really cause companies to go ahead and want to migrate from say self-hosted infrastructure that might be old and archaic, or like I said, there are people looking to reduce costs.” And of course, one place that most people go initially is, of course, AWS. So that’s the reason why we’re having this joint partnership discussion here.

But some of the other factors behind it is understanding trends and the way that things are happening, the agility aspect of it. Right? The fact that you don’t have to maintain your infrastructure or maybe your infrastructure is old and outdated, and you’re like, “You know what? This is too painful for us to go ahead and increase our OPEX. We don’t want to do that. We want to go ahead and have somebody else maintain it for us. We want go virtualize, because …” You know what? We might start small today and we might scale tomorrow. We also were looking at the operational things and we want more resiliency, we want the larger geographical footprint, as Junaid said, being able to go global in minutes is pretty amazing.

I mean, you’re talking to a guy here with a lot of white on his face, who’s been in the history for a while. He used to have to go buy servers and actually physically install them in locations and wake up in the middle of the night, and we’re starting some of my first companies, but now with everything in cloud computing, system’s going on for over more than a decade now, but at the same time, it’s like there are still industries out there that are starting to learn to trust the cloud, there’s still a level of trust that some companies might have.

They’re also looking, like I said, to offload those costs. This is ways to do this. Even things like CDM optimization, they’re looking to do more predictive cost modeling because when you have infrastructure in place, it’s a lot harder to do that. And a lot of companies go through this and they have challenges that they see. I know, I’ve seen it on my side and I’m sure Junaid has actually seen it on his side. I mean, this is just the way it is.

I mean, coming from that older style mentality of, we have to host ourselves, it’s not secure, I want to have all my retention. Right? There’s all these things, there’s other things too, there’s other models too, like the idea of like, “What systems do we move?” Right? Do we want to move everything? Maybe we want to keep our Jira ticketing system hosted somewhere else or we want to move our build infrastructure to the cloud, because, you know what? We have all this build infrastructure currently, it’s not really being utilized. So we want to have it in essential place where we have like a manufacturing facility for it.

Do we want to offer this as either … We implemented ourselves in the cloud, do we want it as a service that we offer to our internal customers? What kind of models are we looking for too because there are some companies that are also like, “You know what? We’re actually going to go for more of a different deployment model with things like maybe a hybrid architecture, maybe keep some of the stuff on site where we have our local developers hit, in our case, our artifactory and x-ray product locally. So that this way, there’s not a lot of cost when it comes to going out to the cloud and back.”

But then they also want to actually reap the benefits of having that scalable architecture in the cloud, where they might move their CI environments. They might move their built infrastructure, they might virtualize it by using some of the tools that AWS offers in terms of building out the code. Right? Or with us, we have our pipelines product, and our pipelines product we can actually bootstrap the runtime environment because our pipeline product runs in Kubernetes for the build scale infrastructure, why don’t I put it there and why don’t I utilize EKS to host that infrastructure and to do my build processes?

Understanding what’s going to cost also too, what is the cost of my migration? When I’m resolving in this case for our platform, resolving dependencies, do I want to have the dependencies in the cloud, do I want to have local versions, do I want to share between them? Then the other thing too is, is that, when we start thinking about the different routes that you can take, are we going to rehost it, reshape it, repurpose it? There’s a lot of these questions and we start looking at those kind of level of information. It’s really broken down into a couple of things. We have our on-premise infrastructure that is currently in place.

This is what we’ve been in operational with for a while, we have this mandate, we got to move to the cloud to lower costs, to increase efficiency, scalability, global reach. And then you have to make those determinations. And as an organization, this is not our place, not my place, and it’s definitely not AWS’s place to make those decisions for you. But you need to understand there are methods in which we can actually help you do this easier because whether you’re refactoring what you’re doing by saying, “Okay, we’re just going to take everything we did and we’re going to redo it completely up inside of AWS.”

Or in our case, maybe you want to move your current artifactory or JFrog platform from yourself hosted, and you want to rehost it inside of AWS, because you want to scale easier. Are you going to redo everything? These are things that you need to keep in mind. Are you going to go hybrid for a while? What’s the timeline? Is this a weekend project where you’re moving everybody or is this one of these things that you know what? We’re going to spend the next year in planning. And we’re going to talk about some of those aspects too.

Taking this cloud journey on is not for the faint of heart in a lot of cases, but there are ways to make it easier where you’ll only have to worry about the decision making process, the planning process, and then you can come up with a standardized ways to implement this, so that you can do either the weekend work or you can go ahead and do slow scale role from your organization up into the cloud. And so saying that, the big thing is, how do I do it?

Now that we’ve explained some of the issues, some things to keep in mind, the usual strategies we see out in the public field, now let’s talk about how do I take my optimal software organization, I’ve been running with for a decade, we’ll say, or I’ve been running with for two years, now we’re scaling. How do I get that from here to there? And that’s what we’re going to discuss here. And this is the methodologies in which we’re going to discuss this. I’m going to do a quick recap just in case you guys … One of the things that Junaid actually touched upon was scale, like global reach, and getting out there.

So if you’re not familiar with all of our product lines, today we’re going to focus mostly on things like x-ray and artifactory, but artifactory specifically, because the other components here can be … there are ways to move those also, and those are longer discussions, but of course we have artifactory universal binary repository manager, third party transitive management system, build management, SDLC optimization tool, giant metadata repository of everything that you produce, our x-ray product, so you can ensure that those third party transit dependencies are safe and secure and you have compliance.

Everything from shift left at the developer level, all the way up to your final build processes, because our platform is end to end, codes to cloud, developer to device, whatever you want to say. With the military’s compile the combat, anything you want to say, how you define your SDLC. Those are two of the core components behind it, but also too, say you do go into this global reach, right, suddenly you build a web service, you have a series of Helm charts and Docker images that you want to go host in EKS.

And the big thing about it is that, how do I get these out there? So we have our distribution platform where you can take and build your software in artifactory, use our distribution platform with our edge node and maybe set up edge notes in other regions around the globe, and quickly be able to create things called release bundles, which are digitally signed, consumed, immutable objects that might be your Helm charts and your Docker images, and you publish them out to the edge for deployments.

It’s in these regions, suddenly you’re a global entity. Now you actually have the ability to do it rapidly and quickly and increase the velocity in which you can go, because change is the main thing companies are looking to do. How do we implement faster? How do we get those features out there, but while still in securing consistency and security, and the edge notes really help with this. Right? They help with deployment scale aspect.

We have our pipelines product, which is our CI/CD and CI orchestration tool. It’s all Yammer-based, so it’s easy to use. You can temporize it. It’s built for infinite scale because when you do the build processes, you can design your images, your Docker images, that run these builds to fit your needs. They can be [Rel 00:17:32] they can be Debian-based. They can be windows based. You can temporize it. You can digitally sign it. So you have a blockchain style ledger of all the things you’re producing. So you have a regulation behind it.

You can also include things like approval processes between things, using our artifactory processes too. With pipelines, you can use things like promotion APIs, so you can actually promote your bills through the cycle and then have that accountability. At the top of it, say you deploy this artifactory into multiple regions. And when you have multiple regions, we have our Mission Control product, the insight, and Mission Control allows you to get the status of all the components of the system so that you can see how they’re operating.

And on top of that, we have insights. So if you’re using any monitoring tools, we’re using a standard. So this way you can actually go ahead and actually ensure velocity, how are my coverage in security? Are there any issues I’m running into? So you have constant monitoring and enablement. But now understanding that let’s go, and I was a little product pitch, I had to throw that in there so everybody understands all the pieces that can actually co-align in the way when you are thinking about this, because in some cases we see a lot of customers go and say, “Hey, you know what? We started off simple, we’re going into the cloud because we’re expanding as an organization.

We actually now have offices regionally all over the world. I want to make sure that all my developers are developing. I also want to make sure that I have an expedited method to deploy my services to where they need to be.” And that’s where the platform really excels, and especially as hosted on a system like AWS, because it gives you that ability to scale like that and do it rapidly and quickly. I mean, you can even go ahead. When you’re building using our products, you can deploy our artifactory using our Helm charts into EKS, pretty simply, we have quick start guides. And we’ll talk about these different things at the end. But let’s go to the crust of what we’re here for. Right? Methods of migration.

Now, understanding that you have various aspects in which you can do this. Now, this is like I said, it’s not for the faint of heart in most cases. Right? You need to make decision, you need to make choices, and the things I’m going to show you should influence the choices you made in the methodologies that you approach. Right? Like I said, whether you’re doing it over a weekend or you have a year long migration path. The various aspects that are available moving from say your own infrastructure into the cloud, and we’re going to discuss the methods of such things as replication, and we’ll talk about that in a minute. So there’s various aspects of replication that we can discuss.

There’s also the import/export functionality that we offer as part of the services of artifactory, export my system and import it into my new environment. And then we have external methodologies. I put down things like DB export and rsync, but actually AWS has a series of tools that are actually a way above and beyond that. And later on, when we get to that section, I’m actually going to have Junaid talk about a bunch of the services that are pretty amazing that they offer to help expedite this too, because there are different methodologies, depending on the way you’re approaching this as your organization.

Look, we have 70% of the customers, almost 7,000 customers. No two customers are the same. So that’s the reason why, when we say this is, we’re going to give you all the methodologies that you need to think about. It’s up to you, and remember, we’re here, we have our support team that will be there to support you during these kind of levels of migration. If you have our support infrastructure, depending on your service level, but we actually are very common and used to this, can help you through some of those pitfall that we see in a lot of cases.

Now saying that, like I said, things to keep in mind. I always like to start off with a precautionary tale of what things mean. Right? So of course you’ve got to plan your migration. Right? Like I said. This is one of those things that you’re taking on something, you need to be familiar with AWS. Right? And we’ll talk about some of the resources that are available for your teams to get the knowledge they need. You also need to go ahead and work with your product teams. You need to work with your developers. You need to sit down and discuss how you want to do this. What’s the timeframe, how are we going to do this? And we’ll discuss some of those methodologies.

But also too, I always recommend that before you migrate your instance, we have lots of good information online on how to clean up your instance, remove artifacts that don’t need to be there. Right? Remove things that you don’t want when you move to your new system, because in some cases you are lifting and shifting, but at the same time, you don’t want to bring all the baggage. Right? So this might be a time for you to go in and say, “We’ve had this running for four years in our infrastructure. We should probably go ahead and prune it a bit, because that’ll just help with the expedite.” Right?

So when you’re actually moving from one to the next, having a cleaner system could be … this could be your opportune time to do the house cleaning that you guys keep avoiding. Right? We see this, a lot of customers are like, “You know what? To be honest, we haven’t cleaned the system up probably in four or five years.” I’ll send some scripts over and they’ll go, “Oh my God, we didn’t realize we had so much stuff in here.” Right? It had been a place just to put things. Now, this is your opportune time.

And lastly, goes without saying, always back up before you go. Right? Always make sure you always have a copy just in case as you’re doing this, just always back up, it’s always a good strategy, anyway. I’m sure I don’t have to say this to anybody, but at the same time, just throwing it out there. So let’s dive into the first set of ways to do things. When we do this, let’s talk about replication. Right? So, with replication … Oh, this came out all weird here. I don’t know what happened with the formatting, but we’ll go with it anyway.

So, replication in artifactory is, there’s a couple of different approaches to this, and I’m going to show you that in a minute after I talk about these slides. So first of all, we have straight push replication and pull replication. Right? So you’re going to have the source and the target, location A and location B. They’re self explanatory, in case of push replication, the source talks to the target, location A, talks to location B, and location A says, “I’m going to deliver you stuff.” And it pushes the binaries from one to the other.

Now, when you set up replication, we have plenty of videos and documentation on it. But if you’re not aware of how this works, I’ll just show you quickly. I’m going to share my screen. So let me go to my window, let me go to my Safari and share this. So, if you take a look at what we have here is that anytime you have a repository definition, just the way you go in and you look at the repositories, I’ll just click any repository. You have your replication.

And in here, you can go in and you say, “I want to run my replication [inaudible 00:24:26] I can make an event base, so it’s real time. I can disable this and say, “I want to do it after hours.” And then I can go in and say, “Here’s the locations in which I want to replicate. I actually want to go in, I want to sync delete the artifacts. I want to sync my properties. I want to sync my statistics.” Right? These are ways I can actually replicate local repositories between multiple locations.

The other way I can do things is through remote repositories, where I actually have a source that I want to pull from, I want to do it more request based. So I set up a remote repository in my artifactory instance, and I can pull through, so in other words, I might have location A be myself-hosted instance, and then I might have location B be actual AWS. I could pull my request through the AWS instance. It will pull the binaries out of my local source. I also might want to set up actually push replication in the meantime, because maybe I’m doing this as a slow roll. Right? I’m doing this over a year period.

I’ve sat down, I’ve actually met with my product teams, I’ve looked at what they have for repositories. We’ve decided that team A is the team that’s going to go first. And so maybe what I’ll do is, maybe I’ll set up my infrastructure in AWS already, I’ll keep my current version of artifactory, I’ll take their repositories and I’ll start push replicating the binaries from the self hosted all the way up into AWS. And then maybe when I’m done doing that and I’m ready to move over, I can kill the replication, point that team to the new build process and have the ability for them to start using AWS as the primary resource for this, and then I can go in and clean up artifactory locally, keep it in place, so I don’t lose those binaries.

And then I’ll talk about the next kind of replication in a minute in which you can actually keep those together and keep them working. So you can either have a combination hybrid or a backup even locally, just in case you want to have that. Right? So you have the ability to use replication as one of the methodologies, push or pull. The next thing you could talk about is, do you want to have multi-site? So maybe you want to use this. Right? So multi-site, you can either do star or mesh.

Let me talk about mesh first because piggybacking on the previous example, maybe what I do is that, maybe I set up my own installation in AWS. I keep my current version in place, and then I set the mesh topology between them. By having that mesh topology, this allows me to go through and say, “You know what? I’ll put a virtual repository in both. I’ll have my teams work with that, but maybe it’s a slow transition period. But now I have the same set of binaries in both locations.

So I could have myself-hosted and my AWS, this allows full mesh, or maybe I want to have a source star. So this would be more the push base where the source, myself-hosted, pushes to AWS. But here’s another cool thing. What if I suddenly go, “You know what? We’re going to replace this infrastructure, but when we replace this infrastructure, we’re not just going to have a single region we’re going to do this, we’re going to create a region in US East one, we’re going to create one in EU Central, and then we’re going to create one and say APAC. Right?

Now, maybe I set up a star-base topology, where I start off having all my local build processes set up there and I start publishing to those three other regions. Then eventually, maybe I go back to mesh, and then I have all three regions synchronized. So you have these abilities to be very flexible in your approach on how you want to use replication as a way to do this. Now saying that, we have one more thing, and this is a new feature we just introduced, and I want to make sure, because that’s why I’m separating it from standard pull and push, event-based and prom-based, star-based and mesh-based.

Because one of the biggest problems we actually had from our customers was like, “Hey, your replication’s awesome. But when you have two and three locations, that’s great.” What if I have 10 locations? In this case, 10 regions, which is a very big thing. I mean, one of our biggest customers that we have with AWS is Riot Games. Riot Games is in 36 locations. Right? They have 36, and for them to set up this level of individual replication is arduous. The human error factor, or even if you script it as an API is tremendous.

So we actually just recently introduced federated repositories. What is federated repositories? Federated repositories is an easy way for you to go in and create a full bidirectional mesh replication quickly. So you can have your self hosted DC set up your EU and your US regions create a circle of trust. Right? Now we have all this online, say circle of trust between these entities. And let me share my screen again and I’ll show you, because one of the cool things about this is how easy it is to actually set this up.

so when I go in, before we had this standard replication schema, well, a federator repositories, by the way, you can go in and create a new federator repository and, or just so you know that if you’re using yourself hosted incidents at any time now with the latest version, you can convert any repository to a federator repository just right from the UI or from the API. But once you’ve actually defined a federated repository, one of the cool things about this is, is that, how do I replicate it more expediently?

So, let’s go in and let me just say like, here’s an example of a federated docker repository. Right? So here it is, it’s my federated docker repo, and all I have to do is say, add repository. I click on here. I find my locations. I can click on here and say, I want to create the same repository in New York instance. I also want to create the same repository in one of my Bangkok instances. So now, I’m going to go and hit done, and if you look, it’s going to allow me to say, I’m going to hit save, and what this is going to do is that, now it’s going to go ahead and they created the federated repo.

Now the federated repository, now that I’ve done that, what’s really cool is that, as soon as I hit done, I hit save, it’s automatically now taking those from that self-hosted DC and it’s immediately replicated, it created the repository in the other two locations, it’s replicating the binaries and it’s replicating the properties. It’s replicating basically a duplicate set, so it’s full bidirectional mirroring.

Now that’s as easy as it gets. So if you’re going from that, you can even do this with a single location, but if you are one of these companies that we see a lot these days, once you’ve actually set up that trust relationship, now you have bidirectional mesh, which makes that moving from yourself hosted DC to the actual up into your cloud instance that’s much more expedient. Now that’s, if you’re going to go, I would use this approach, even if you’re doing this over a couple of days, couple of weeks, or maybe you have a year, we see more companies actually like, “Look, we’re not going to do it right away, we’re going to move teams.” That’s why I started with replication first.

But what if you’re one of those adventurous people, what if you’re one of those teams that are like, “We want to go ahead and we’re just going to go, “You know what? We’re going to cut a week off from development. Everybody take a chill. Now let’s go ahead, and we’re going to spend a week migrating from myself-hosted. We’re going to go up, and we’re shutting the data centers down as soon as possible.”

And that’s when we have the methodologies around import/export. So artifactory … There’s a couple of different ways. The first way we’re going to talk about is actually, how do you utilize artifactory? Right? How do you utilize the tools? Then we’re going to talk about how you can use third party tools, and that’s where Junaid is going to talk about some of the methodologies that are available. Now, basically you’re backing up your local instance, is what you’re doing, and then you’re moving it.

Now, I will take an error of caution here to say, how big is your instance? You want to make sure that it’s not too substantially big, but one of the things you can do is, you can do this either at the system or at the repository level. Right? So you have options. You can do system configuration, system information, repositories, and the metadata. You can even move the repositories without the content, and then use one of the tools that Junaid is going to talk about to actually move the content. Right?

So maybe you have terabytes of data in your physical file store, how are you going to get that up into S3? Maybe S3 is what you chose as your hosting in this case. And we’ll talk about that in a bit, but you have the ability to export all the metadata minus the binaries. This is huge. So that those big files you can move over in a regular transition period. Maybe even slow move that, and then when you’re ready, you just export and then import. You have the system basically re-enabled in your system and you’re ready to go, and those are there.

Now this thing here is, the thing is [inaudible 00:33:23] you can also increase the log verbosity, so you make sure you have everything. That’s just a little detail. But the thing is that, by using these export functions, it allows you to basically snapshot your local and move it up into the cloud as easy as pie. Now, it can be a system record or you can exclude metadata, you can exclude the content like builds and things like that. Right? You can do all the repositories. There are specific repositories. You can move the metadata, can also be excluded. Right?

This is the time for you to sit down and look at our documentation that we have. You can also, when you’re going ahead and you do this and you go to import, right, you just have to choose what you want to import. Right? You can change things also during that time period. You can do it as a server infrastructure folder style, or you can use it as a zip. Once again, there’s all these things, and where is that? How do I find that? So let me just show you quickly again, just so you guys know when you are looking at this, where is located. So if I were to go ahead and look at this, I can just go to artifactory. You can see here, we have the import things. Right? So here it is, import/export.

And on this page here, you could say, I want to export all repositories. I want to export specific repositories. Where on the server path is this information? Do I want to exclude the metadata? And below that is the import functions. You stand up your new instance. This is how you bring all that stuff into what you’re doing. Right? You can just do it this way. This is one of the major things. Now this is all around, how you do your repositories. Well, let’s go back and see the system. Right?

So one of the nice things about the system is, is that once again, you define your export repository path. Right? You say, I want to exclude content, metadata. You want to have compatible exports. You want to create a giant zip file. It’ll take a while. Right? You want verbosity. And we have the same thing too. So this is all here, but what if you want to script it, you don’t want to use our region interface? Well, you can actually just go to our API and our API has all that same level of information. So you could script these functions. You can use our JFrog CLI if you want to.

So you have options on how you want to do a system export. This is a way for you to maybe do a slow roll of migrating binaries up and then showing them the system, exporting it and bringing it in. Or you can use this as a way for just backing up even. You can even just use it as a methodology to do backups around this. And the thing is, is that, those are just some of the functions. I want to be cognitive of time so that we keep your interest.

But the next thing is, is that, one of the things I mentioned is, what if I want to export, or maybe I want to have it so that I don’t want to do it with you, JFrog, I don’t want to use your methods, I want to use some of the tools that are actually available from AWS, like migrating my data or migrating the binaries I have. I’m going to let Junaid talk about some of the things that are available here.

Thanks Bill. So what are native AWS services that can help with migration? So we’ve got a short list of a few here, and this isn’t necessarily an exhaustive list either. Each of these services addresses a different migration vertical. So for example, first, if you have applications you want to migrate, you can leverage AWS application migration service, and it’ll make the process of migrating a complicated app much easier. And hopefully it’ll reduce the amount of changes that you have to make minimizing the changes for that migration.

Second, you’ve got the migration hub which is really a tool that provides a holistic view of your overall migration status. Third, you’ve got an application discovery service, where if you have too many apps or legacy applications and you need assistance in collecting information on-premise, you can leverage it by deploying it. Fourth, you’ve got AWS DMS, database migration service, where, hey, if the components that JFrog provided isn’t enough, you can leverage DMS to migrate to and from on-premise and cloud databases such as, but not limited to including RDS, Aurora and so forth.

Fifth, you’ve got AWS SMS. That’s our server migration service, which is an agentless service that you could deploy to your environment to migrate thousands of on-premise workloads. Number six, we’ve got the family of snow devices, and Bill alluded to some of this earlier, where if you want to transfer data to AWS S3, well, what if you want to transfer data to S3, but you have terabytes of data and you have a certain timeline that you want to get it in? Relying on traditional internet connectivity won’t necessarily be possible for search large amounts of data. So, you can access our family of snow devices where you will be shipped a device that fits your specific need. You can load all your data into it, and then securely ship it back to AWS, and then it’ll magically appear on S3.

And last but not least, we also have the AWS transfer family to transfer in and out of Amazon S3 as well as EFS, and it supports a variety of protocols and integrations with existing authentication systems along with DNS through route 53, so there isn’t an impact on your customer and either. And Bill before I actually hand it back over to you, I’ll add that one of our basic recommendations when it comes to migrations is to follow a three step process, assess, mobilize, and migrate. Will assess what is the most important workload for you to migrate, and many shops struggle through figuring this out. Sometimes they are looking at that big monolith that they want to migrate, and that might not be the first foray you want to do into the cloud. Maybe it is, maybe it isn’t, it’ll depend on your need.

Number two, you want to mobilize the resources that you need which could be, perhaps you’re doing it with your internal resources, with a certain set of applications, JFrog tools, AWS tools, or maybe you actually need support from a partner like JFrog to perform the migration. So you want to make sure you address that in your second phase, and then finally, your third phase being the migration itself, again, you could either be doing it on your own, or you could be leveraging a partner like JFrog or anyone else. At the end of the day, the choice is yours, and you have tools that are both native to AWS, as well as provided through third parties and our partners. So Bill, with that, back over to you.

And thank you for that, because actually some of the times, what we see is some of our customers are like, “You know what, we are going to do this, but what we’re going to do is, is actually, we’re going to export our system configuration. We’re going to take our database and actually move it from our self-host that say maybe Postgres, and we want to move it directly into RDS. And they might set this up in advance. So that it’s constant. Right? So that data is always being updated until they’re ready to cut over.

Same thing when they go ahead and they move there and say maybe they’re using an NFS locally, and now they want to take terabytes of data and move it into S3, using these methodologies and these tools supplied by AWS helps expedite the process. It might be one of the methods you want, maybe you don’t want to do replication, you don’t want to do the system import, you want to use a hardware based, or in this case, a software, hardware based combination. Whatever you decide you want to do, and this will allow you to do that. And the resources are there.

Speaking of resources, that’s one of the things we’re going to talk about next, because a lot of these things that we’ve discussed, there’s a lot of information that we have online. For JFrog we have our academy, we have tons of webinars, like the one you’re watching now. We also have a lot of community sport behind the things that we do behind the scenes with the various providers of that level of infrastructure. Also, we’re in the AWS marketplace and we have AWS quick start guides. And I know that Junaid and his team over there have a lot of resources that they have available also as part of this.

And knowing that, allows you to go in and just know that this is a joint effort. Right? This is JFrog and AWS. And we have a lot of cross information between the two to help make this transition period for you that much easier, to help smooth the process, and then allow you to still retain your velocity and everything. Now saying that too is that, we’re part of the same, we actually have a lot of also joint work we do with each other, of course, it’s like AWS private link and other things that we have as part of even our SaaS platform that’s even hosted, if we host in AWS. Right? So you have those abilities.

We have a lot of other resources that are available because we’re doing a lot of work together. And the last thing I want to say is if you want to try any of this, we actually have a great 30 day trial. You can go right to the AWS marketplace, just do a search for JFrog enterprise, x-ray and pipelines artifacts. I mean, it’s a mouthful, but yes, you put it in. We’re giving a 30 day evaluation, go give it a shot. I think you’d be very happy with your choices, but this is out there today, we’ve had this for a while. Once you sign them, we’ll also maybe have a rep talk to you. If you want to talk to me, you could always send a message internally to our team, our sales team, we can have a discussion.

But really go give it a shot, give it a whirl, try it out. And number one, thank you for attending, and you know what? I cannot thank you enough for being here today. It’s been an absolute pleasure. Thank you for all the foresight, the information, and just being a pleasure to work with you. You’re pretty rad.

Thank you, Bill. I appreciate it. It’s been a pleasure to be here as well, and likewise.

Excellent.

Awesome guys, that was a great, great presentation. We did have some questions come in. So I’m going to go ahead and just shoot those off to you one by one. The first question that came in was, “How can I get a quick and consistent access to remote artifacts?”

A quick consistent access? Okay. So to remote artifacts, of course, using artifactory, we have the ability for you to go in and use remote repositories to point to a third party externalized sources. And once you set that up, if you watch any of our videos on basically introduction to artifactories, one of the paramount feature that we offer, by having those remote repositories, what’s great is, if you have a thousand developers and developer zero brings in that binary, all 999 other developers will use the same binary itself. Applying a level of consistency.

But in addition, when you use this with AWS, the great part about it is, is that that binary could be in yourself-hosted and your AWS and then your AWS globally by using replication across the board.

Great, great answer. All right, the second question we have is, “How do I most efficiently distribute large artifacts across my organization?”

This is another part of our replication scheme that we have as part of this. If you go with our larger enterprise level platforms, we actually have check some based replication. Now that is one of the things we do. So actually having it so, say you have three locations, A, B, and C, and you set this up as a full mesh topology, the source communicates to the target. And when it communicates to the target, it says, “Here’s a manifest of things I want to send you.”

And the other side, the target says, “Hey, that’s great, source, I actually have already 90% of that, please send me just the variant, send me the Delta between what we have.” And this allows you to actually expedite the way binaries get replicated because you’re not doing it because we don’t duplicate binaries. That’s one of the big benefits in the way we actually have our artifactory platform, and we carry that over to the replication.

Awesome. All right. The next question we have is, “How do I become more efficient and prevent different teams from using different libraries to do the same tasks?”

That is actually more of you than us. We actually provide the ways for you to actually segment, have it set up by teams and utilize permissions and permission targets. We now have a thing called projects where you can actually now encapsulate groups together into a project scale where you have like a project admin and a bunch of project users, but also too, you can use our projects to actually even go ahead and do cross team or cross project dependency sharing. But also, it’s more of an education portion. We have our JFrog academy where we can actually also show you how to implement.

We’ve actually made implementing artifactory easy into your organization. And also to the onboarding by using a set of resources like our training. And also too, our product actually has set me up instructions for every repository today to make it easier to onboard your developers and your tool sets, because we also have plugins for all major CI environments. So actually onboarding artifactory is pretty easy to do. Once you get the hang of our methodologies, it becomes very ubiquitous with most organizations.

Awesome. All right, we got three more here. “What level of enhanced security should I expect to protect my organization when moving from onsite data center to cloud infrastructure with AWS?”

This actually might be more of Junaid in this case, because we actually have the software stuff to handle the third party trends in the management. But Junaid what kind of AWS is known for security aspect. Do you have any input here?

Yeah. When we think about security in the cloud, there are two aspects, there’s security in the cloud, and then there’s security of the cloud. So AWS will handle a good chunk of that for you and what you’re at the end of the day responsible for are the things that you host, that you run. So for example, if you’re running an AWS managed service that is already HIPAA compliant, HITRUST compliant, you don’t have to worry about those things, but you would have to worry about, “Hey, should this service be exposed to the public internet or not?” Maybe it’s not a public facing service. So you want to ensure that you’re leveraging the correct security groups and other AWS tools that are there to prevent it from being public.

When we talk about security, there are also a variety of things with respect to compliance that come into question, I want to be able to track every kind of action that has happened by my developer, as well as by an external party. So there are services like AWS CloudTrail, which will log every single component of whatever is being done across your single account or multi account environment. And you can funnel that into say something like AWS Config and issue alerts and provide automated remediation.

So, I’m only mentioning CloudTrail here, AWS Config, security groups, but there’s a plethora of other services. At the end of the day, you wouldn’t have to worry about the physical data center security and anything that’s specific to a managed service, but you would only have to worry about whatever you open up to the public, or however you provision something that’s unique to your use case. So it’s a shared relationship, but it reduces the burden.

That was great, actually you touched upon something, a lot of companies have this elegant mentality, right, where maybe they don’t have the ability to pull in sources from third party locations. And if they work with us, actually, one of the nice features is, is that we do have air gap enabled inside of our JFrog platform. So you could set up a location with an artifactory instance and you can say like, almost like a DMZ, in this case to pull independencies and get into your regular rated environment much easier.

And that can be done in a couple of different ways, and actually we do have some talks about that in some of the webinars on how to utilize the air gapping in our product because we’ve actually built it that way to be part of this. This actually carries over to another AWS thing is, is that, we’re also in the AWS GovCloud marketplace. So we’ve actually been approved, we’re in there, and this is where air gapping actually also comes as a substantial part of the offering that we have in conjunction with AWS.

Great. All right. Two more questions, thank you both for some great answers so far. The next one is, “What can I expect as improvements in my backup and disaster recovery if I move from on-premises to computing in the cloud, so my day to day operations are not hindered?”

Yeah. I can address some of that there. So, it depends on the type of workload you have, but the beauty of the cloud over here is that, it offers you a plethora of different option. So for example, let’s say, you’re talking about your database backups and you’re running MySQL and RDS. Well, if you’re running it in a multi-AZ fashion, you’ll always have another recovery point that’s actively running in a different availability zone, which is a completely different data center from the one that you’re actually running it.

So if availability zone gets wiped out, God forbid, due to some kind of event, then you know that you can rely on the second active copy from another location. And that’s just one example of managed services that AWS provides where you don’t have to worry about any of that. You just have to push a button and you can rest assured that everything will be replicated automatically. But then there are a bunch of other AWS services that we could provide in the comment section later, that would help you not just in terms of your day to day recovery, but also in terms of disaster recovery.

We have a lot of clients that like to back up things into Amazon S3 in addition to their active backup. So you’ve got situations of active, passive, passive, passive, whatever scenario you can think of, there’s a way that you could potentially do it within the cloud. Bill, if you can add to that, feel free to do so.

Absolutely. So inside of our artifactory, inside of our platform, we have like things like backup to S3. So you can actually use our product to go ahead and schedule up backups that way. Another thing you can do is, is also set up artifactory, set up our platform in multi-region through full mesh topology, and we have companies that go out there and say, “I have US East and US West implementations. I want to have an active, active setup, or I want to have more DR where I might have US East as my primary, US West as my secondary and use replication to replicate between them to stay synchronized.

And then I also have it. You have a massive amount of ability for you to go in and ensure that your uptime stays your uptime. Right? And that you don’t get bogged down by these, because using different methodologies in both ways allows you to do this, and we have it both in AWS, and we also have it inside the JFrog platform.

Wonderful. All right. The final question we have that just came in is, “If I moved to AWS, what options can I expect in regards to operating system programming, language, web application platform, database, and other services?”

That’s a really big question because that could take another hour to answer.

Yeah, definitely.

I guess that’s high level.

Yeah. There’s a variety of different options. So for example, if you need to run with respect to databases, if you need to run Oracle on AWS, you can do so, if you need to run Postgres or MySQL, you can do it in RDS fashion, which is managed, or you can do it via our native AWS service known as Amazon Aurora. So the options are there. There’s no limit to what you can do. In terms of compute, you can run traditional servers with EC2 instances, or you can containerize them on your own within those EC2 instances.

And once you realize that, “Hey, I’ve got a lot of containers and I can’t manage them on my own, you can move to AWS orchestration services like elastic compute service, in which you can semi manage the EC2 instance and leave the orchestration for the containers to AWS, or you could do a completely serverless containers with AWS target. So, across database, you have plenty of options and the same would apply to other areas of networking, where you can abstract your security, as well as your networking through different subnetting, as well as virtual private cloud capability.

And last I’ll mention, and Bill, maybe you can top it off here is, we also have a plethora of DevTools that you could use. And those DevTools could be partner DevTools, or you can use AWS native DevTools like CodePipeline, which will allow you to leverage CI/CD on the AWS cloud. Bill, anything to add there?

Yeah, and then when you use our artifactory, we support over 30 package types right out of the box. Right? Native package types like Docker, Helm, MPM, and all that we can integrate into things like code for AWS, which is also an implementation. You can also use our extra product to make sure they’re safe and secure. You can use us in multitude of different ways because that’s what we do. And we actually compliment all the things that Junaid had actually mentioned because as the binary manager, as the package manager, we have the support for this, that we’re known for.

So it’s not just the support for actually building the software, but it’s also where you can store your build so you have accountability. And that’s a major factor. So you’re looking at, between AWS and the native tools that they have, and artifactory, and x-ray, and all of the stuff we offer as a platform, you really have a massively scalable enterprise, great solution for building whatever you need to build, maintain it, offer level of safety, security, resiliency, it’s all there.

At the same time, looking at reducing cost and all the things that we discussed before, and this discussion really was geared towards, yes, you have the ability to move over and start utilizing the amazing tool sets of the partnership that we have with AWS, and at the same time, do it succinctly, do it easily, and also provide an extra layer of social security, resiliency, and also global scale.

Wow, wonderful answers. Great, great, amazing questions from the audience today. I know we’re wrapping up here on time. So I just want to quickly say thank you to both our amazing, wonderful expert speakers we had today. They took time out of their day to come and present to you guys. And so again, thank you to you both, and everybody else have a great rest of your day. Thank you very much. Bye-bye

Cheers everyone. Thank you.

Thanks everyone. See you later.

Transcription:

Good morning and good afternoon everyone! My name is Courtney Gold. I will be your moderator today for today’s webinar. I would first want to introduce the title today, it’s: “migrate and modernize with AWS” and we’ll have Bill Manning